Guide to configuring and using ForgeRock® Directory Services features.

ForgeRock Identity Platform™ serves as the basis for our simple and comprehensive Identity and Access Management solution. We help our customers deepen their relationships with their customers, and improve the productivity and connectivity of their employees and partners. For more information about ForgeRock and about the platform, see https://www.forgerock.com.

The ForgeRock Common REST API works across the platform to provide common ways to access web resources and collections of resources.

This guide shows you how to configure, maintain, and troubleshoot Directory Services software. ForgeRock Directory Services allow applications to access directory data:

Over Lightweight Directory Access Protocol (LDAP)

Using Directory Services Markup Language (DSML)

Over Hypertext Transfer Protocol (HTTP) by using HTTP methods in the Representational State Transfer (REST) style

In reading and following the instructions in this guide, you will learn how to:

Use Directory Services administration tools

Manage Directory Services processes

Import, export, backup, and restore directory data

Configure Directory Services connection handlers for all supported protocols

Configure administrative privileges and fine-grained access control

Index directory data, manage schemas for directory data, and enforce uniqueness of directory data attribute values

Configure data replication

Implement password policies, pass-through authentication to another directory, password synchronization with Samba, account lockout, and account status notification

Set resource limits to prevent unfair use of server resources

Monitor servers through logs and alerts and over JMX

Tune servers for best performance

Secure server deployments

Change server key pairs and public key certificates

Move a server to a different system

Troubleshoot server issues

This chapter introduces directory concepts and server features. In this chapter you will learn:

Why directory services exist and what they do well

How data is arranged in directories that support Lightweight Directory Access Protocol (LDAP)

How clients and servers communicate in LDAP

What operations are standard according to LDAP and how standard extensions to the protocol work

Why directory servers index directory data

What LDAP schemas are for

What LDAP directories provide to control access to directory data

Why LDAP directory data is replicated and what replication does

What Directory Services Markup Language (DSML) is for

How HTTP applications can access directory data in the Representation State Transfer (REST) style

A directory resembles a dictionary or a phone book. If you know a word, you can look it up its entry in the dictionary to learn its definition or its pronunciation. If you know a name, you can look it up its entry in the phone book to find the telephone number and street address associated with the name. If you are bored, curious, or have lots of time, you can also read through the dictionary, phone book, or directory, entry after entry.

Where a directory differs from a paper dictionary or phone book is in how entries are indexed. Dictionaries typically have one index—words in alphabetical order. Phone books, too—names in alphabetical order. Directories' entries on the other hand are often indexed for multiple attributes, names, user identifiers, email addresses, and telephone numbers. This means you can look up a directory entry by the name of the user the entry belongs to, but also by their user identifier, their email address, or their telephone number, for example.

ForgeRock Directory Services are based on the Lightweight Directory Access Protocol (LDAP). Much of this chapter serves therefore as an introduction to LDAP. ForgeRock Directory Services also provide RESTful access to directory data, yet, as directory administrator, you will find it useful to understand the underlying model even if most users are accessing the directory over HTTP rather than LDAP.

Phone companies have been managing directories for many decades. The Internet itself has relied on distributed directory services like DNS since the mid 1980s.

It was not until the late 1980s, however, that experts from what is now the International Telecommunications Union published the X.500 set of international standards, including Directory Access Protocol. The X.500 standards specify Open Systems Interconnect (OSI) protocols and data definitions for general purpose directory services. The X.500 standards were designed to meet the needs of systems built according to the X.400 standards, covering electronic mail services.

Lightweight Directory Access Protocol has been around since the early 1990s. LDAP was originally developed as an alternative protocol that would allow directory access over Internet protocols rather than OSI protocols, and be lightweight enough for desktop implementations. By the mid-1990s, LDAP directory servers became generally available and widely used.

Until the late 1990s, LDAP directory servers were designed primarily with quick lookups and high availability for lookups in mind. LDAP directory servers replicate data, so when an update is made, that update is applied to other peer directory servers. Thus, if one directory server goes down, lookups can continue on other servers. Furthermore, if a directory service needs to support more lookups, the administrator can simply add another directory server to replicate with its peers.

As organizations rolled out larger and larger directories serving more and more applications, they discovered that they needed high availability not only for lookups, but also for updates. Around the year 2000, directories began to support multi-master replication; that is, replication with multiple read-write servers. Soon thereafter, the organizations with the very largest directories started to need higher update performance as well as availability.

The DS code base began in the mid-2000s, when engineers solving the update performance issue decided that the cost of adapting the existing C-based directory technology for high-performance updates would be higher than the cost of building new, high-performance directory using Java technology.

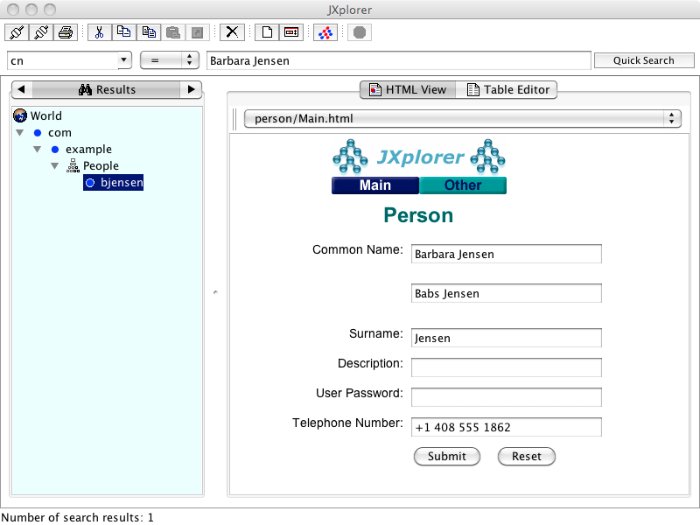

LDAP directory data is organized into entries, similar to the entries for words in the dictionary, or for subscriber names in the phone book. A sample entry follows:

dn: uid=bjensen,ou=People,dc=example,dc=com

uid: bjensen

cn: Babs Jensen

cn: Barbara Jensen

facsimileTelephoneNumber: +1 408 555 1992

gidNumber: 1000

givenName: Barbara

homeDirectory: /home/bjensen

l: San Francisco

mail: bjensen@example.com

objectClass: inetOrgPerson

objectClass: organizationalPerson

objectClass: person

objectClass: posixAccount

objectClass: top

ou: People

ou: Product Development

roomNumber: 0209

sn: Jensen

telephoneNumber: +1 408 555 1862

uidNumber: 1076

Barbara Jensen's entry has a number of attributes, such as uid: bjensen, telephoneNumber: +1 408 555 1862, and objectClass: posixAccount. (The objectClass attribute type indicates which types of attributes are required and allowed for the entry. As the entries object classes can be updated online, and even the definitions of object classes and attributes are expressed as entries that can be updated online, directory data is extensible on the fly.) When you look up her entry in the directory, you specify one or more attributes and values to match. The directory server then returns entries with attribute values that match what you specified.

The attributes you search for are indexed in the directory, so the directory server can retrieve them more quickly. Attribute values do not have to be strings. Some attribute values, like certificates and photos, are binary.

The entry also has a unique identifier, shown at the top of the entry, dn: uid=bjensen,ou=People,dc=example,dc=com. DN is an acronym for distinguished name. No two entries in the directory have the same distinguished name. Yet, DNs are typically composed of case-insensitive attributes.

Sometimes distinguished names include characters that you must escape. The following example shows an entry that includes escaped characters in the DN:

$ldapsearch --port 1389 --baseDN dc=example,dc=com "(uid=escape)"dn: cn=DN Escape Characters \" # \+ \, \; \< = \> \\,dc=example,dc=com objectClass: person objectClass: inetOrgPerson objectClass: organizationalPerson objectClass: top givenName: DN Escape Characters uid: escape cn: DN Escape Characters " # + , ; < = > \ sn: " # + , ; < = > \ mail: escape@example.com

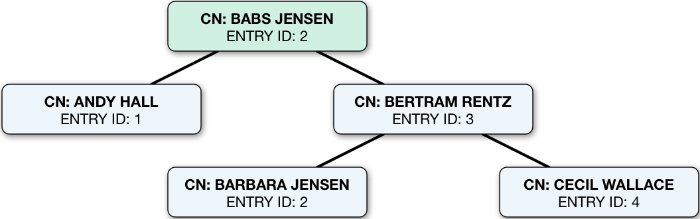

LDAP entries are arranged hierarchically in the directory. The hierarchical organization resembles a file system on a PC or a web server, often imagined as an upside down tree structure, or a pyramid. The distinguished name consists of components separated by commas, uid=bjensen,ou=People,dc=example,dc=com. The names are little-endian. The components reflect the hierarchy of directory entries.

"Directory Data" shows the hierarchy.

Barbara Jensen's entry is located under an entry with DN ou=People,dc=example,dc=com, an organization unit and parent entry for the people at Example.com. The ou=People entry is located under the entry with DN dc=example,dc=com, the base entry for Example.com. DC is an acronym for domain component. The directory has other base entries, such as cn=config, under which the configuration is accessible through LDAP. A directory can serve multiple organizations, too. You might find dc=example,dc=com, dc=mycompany,dc=com, and o=myOrganization in the same LDAP directory. Therefore, when you look up entries, you specify the base DN to look under in the same way you need to know whether to look in the New York, Paris, or Tokyo phone book to find a telephone number. The root entry for the directory, technically the entry with DN "" (the empty string), is called the root DSE. It contains information about what the server supports, including the other base DNs it serves.

A directory server stores two kinds of attributes in a directory entry: user attributes and operational attributes. User attributes hold the information for users of the directory. All of the attributes shown in the entry at the outset of this section are user attributes. Operational attributes hold information used by the directory itself. Examples of operational attributes include entryUUID, modifyTimestamp, and subschemaSubentry. When an LDAP search operation finds an entry in the directory, the directory server returns all the visible user attributes unless the search request restricts the list of attributes by specifying those attributes explicitly. The directory server does not, however, return any operational attributes unless the search request specifically asks for them. Generally speaking, applications should change only user attributes, and leave updates of operational attributes to the server, relying on public directory server interfaces to change server behavior. An exception is access control instruction (aci) attributes, which are operational attributes used to control access to directory data.

In some client server communication, like web browsing, a connection is set up and then torn down for each client request to the server. LDAP has a different model. In LDAP the client application connects to the server and authenticates, then requests any number of operations, perhaps processing results in between requests, and finally disconnects when done.

The standard operations are as follows:

Bind (authenticate). The first operation in an LDAP session usually involves the client binding to the LDAP server with the server authenticating the client. Authentication identifies the client's identity in LDAP terms, the identity which is later used by the server to authorize (or not) access to directory data that the client wants to lookup or change.

If the client does not bind explicitly, the server treats the client as an anonymous client. An anonymous client is allowed to do anything that can be done anonymously. What can be done anonymously depends on access control and configuration settings. The client can also bind again on the same connection.

Search (lookup). After binding, the client can request that the server return entries based on an LDAP filter, which is an expression that the server uses to find entries that match the request, and a base DN under which to search. For example, to look up all entries for people with the email address

bjensen@example.comin data for Example.com, you would specify a base DN such asou=People,dc=example,dc=comand the filter(mail=bjensen@example.com).Compare. After binding, the client can request that the server compare an attribute value the client specifies with the value stored on an entry in the directory.

Modify. After binding, the client can request that the server change one or more attribute values on an entry. Often administrators do not allow clients to change directory data, so allow appropriate access for client application if they have the right to update data.

Add. After binding, the client can request to add one or more new LDAP entries to the server.

Delete. After binding, the client can request that the server delete one or more entries. To delete an entry with other entries underneath, first delete the children, then the parent.

Modify DN. After binding, the client can request that the server change the distinguished name of the entry. In other words, this renames the entry or moves it to another location. For example, if Barbara changes her unique identifier from

bjensento something else, her DN would have to change. For another example, if you decide to consolidateou=Customersandou=Employeesunderou=Peopleinstead, all the entries underneath must change distinguished names.Renaming entire branches of entries can be a major operation for the directory, so avoid moving entire branches if you can.

Unbind. When done making requests, the client can request an unbind operation to end the LDAP session.

Abandon. When a request seems to be taking too long to complete, or when a search request returns many more matches than desired, the client can send an abandon request to the server to drop the operation in progress.

For practical examples showing how to perform the key operations using the command-line tools delivered with DS servers, read "Performing LDAP Operations" in the Developer's Guide.

LDAP has standardized two mechanisms for extending the operations directory servers can perform beyond the basic operations listed above. One mechanism involves using LDAP controls. The other mechanism involves using LDAP extended operations.

LDAP controls are information added to an LDAP message to further specify how an LDAP operation should be processed. For example, the Server-Side Sort request control modifies a search to request that the directory server return entries to the client in sorted order. The Subtree Delete request control modifies a delete to request that the server also remove child entries of the entry targeted for deletion.

One special search operation that DS servers support is Persistent Search. The client application sets up a Persistent Search to continue receiving new results whenever changes are made to data that is in the scope of the search, thus using the search as a form of change notification. Persistent Searches are intended to remain connected permanently, though they can be idle for long periods of time.

The directory server can also send response controls in some cases to indicate that the response contains special information. Examples include responses for entry change notification, password policy, and paged results.

For the list of supported LDAP controls, see "LDAP Controls" in the Reference.

LDAP extended operations are additional LDAP operations not included in the original standard list. For example, the Cancel Extended Operation works like an abandon operation, but finishes with a response from the server after the cancel is complete. The StartTLS Extended Operation allows a client to connect to a server on an unsecure port, but then starts Transport Layer Security negotiations to protect communications.

For the list of supported LDAP extended operations, see "LDAP Extended Operations" in the Reference.

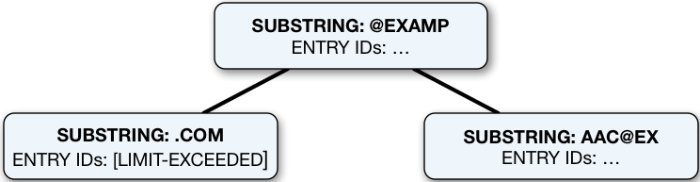

As mentioned early in this chapter, directories have indexes for multiple attributes. By default, DS does not let normal users perform searches that are not indexed, because such searches mean DS servers have to scan an entire directory database when looking for matches.

As directory administrator, part of your responsibility is making sure directory data is properly indexed. DS software provides tools for building and rebuilding indexes, for verifying indexes, and for evaluating how well indexes are working.

For help better understanding and managing indexes, read "Indexing Attribute Values".

Some databases are designed to hold huge amounts of data for a particular application. Although such databases might support multiple applications, how their data is organized depends a lot on the particular applications served.

In contrast, directories are designed for shared, centralized services. Although the first guides to deploying directory services suggested taking inventory of all the applications that would access the directory, many current directory administrators do not even know how many applications use their services. The shared, centralized nature of directory services fosters interoperability in practice, and has helped directory services be successful in the long term.

Part of what makes this possible is the shared model of directory user information, and in particular the LDAP schema. LDAP schema defines what the directory can contain. This means that directory entries are not arbitrary data, but instead tightly codified objects whose attributes are completely predictable from publicly readable definitions. Many schema definitions are in fact standard. They are the same not just across a directory service but across different directory services.

At the same time, unlike some databases, LDAP schema and the data it defines can be extended on the fly while the service is running. LDAP schema is also accessible over LDAP. One attribute of every entry is its set of objectClass values. This gives you as administrator great flexibility in adapting your directory service to store new data without losing or changing the structure of existing data, and also without ever stopping your directory service.

For a closer look, see "Managing Schema".

In addition to directory schema, another feature of directory services that enables sharing is fine-grained access control.

As directory administrator, you can control who has access to what data when, how, where and under what conditions by using access control instructions (ACI). You can allow some directory operations and not others. You can scope access control from the whole directory service down to individual attributes on directory entries. You can specify when, from what host or IP address, and what strength of encryption is needed in order to perform a particular operation.

As ACIs are stored on entries in the directory, you can furthermore update access controls while the service is running, and even delegate that control to client applications. DS software combines the strengths of ACIs with separate administrative privileges to help you secure access to directory data.

For more information, read "Configuring Privileges and Access Control".

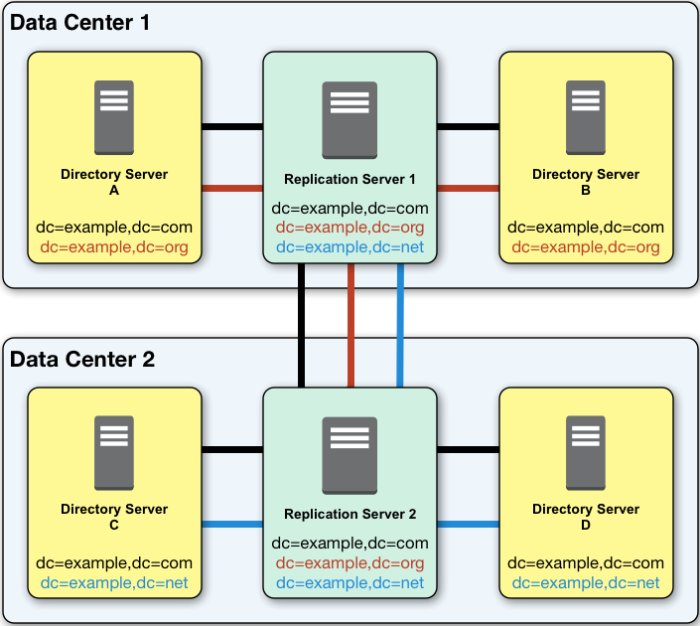

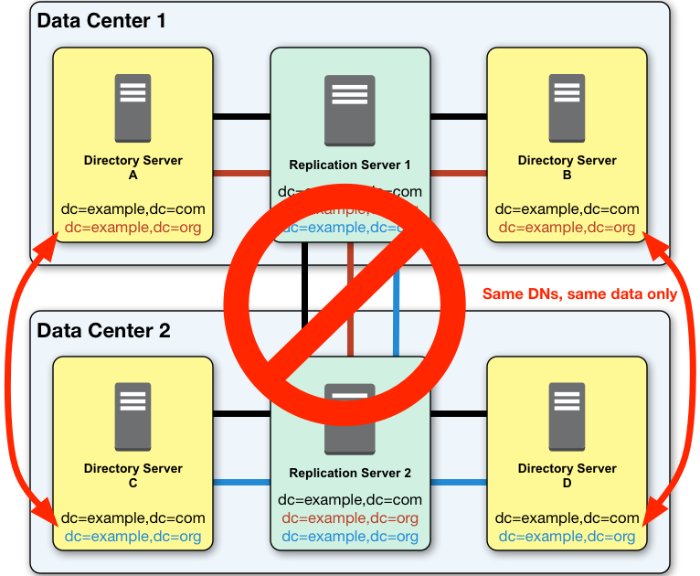

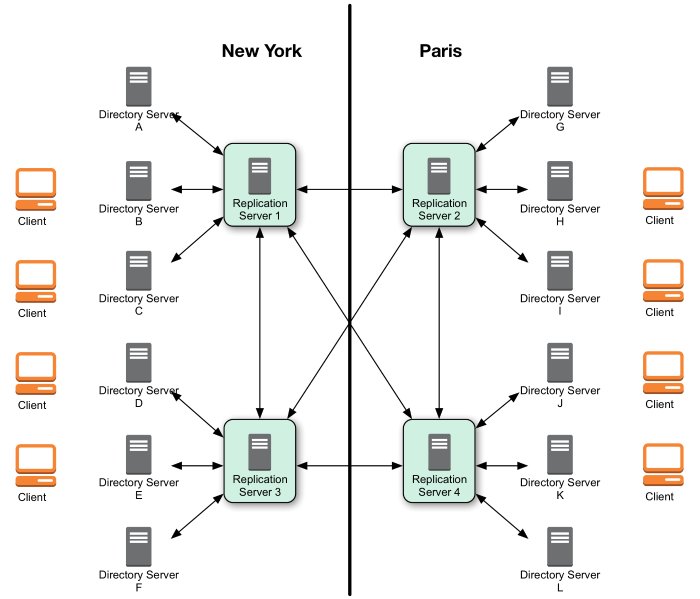

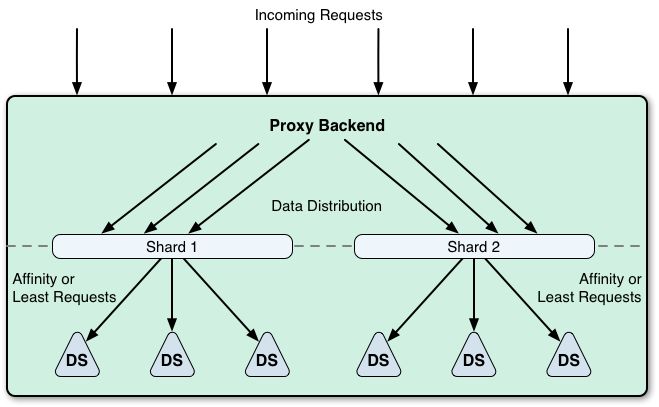

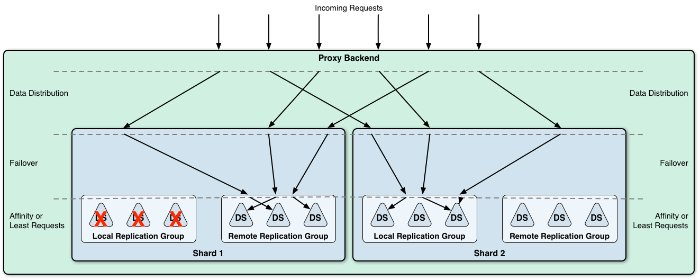

DS replication consists of copying each update to the directory service to multiple directory servers. This brings both redundancy, in the case of network partitions or of crashes, and scalability for read operations. Most directory deployments involve multiple servers replicating together.

When you have replicated servers, all of which are writable, you can have replication conflicts. What if, for example, there is a network outage between two replicas, and meanwhile two different values are written to the same attribute on the same entry on the two replicas? In nearly all cases, DS replication can resolve these situations automatically without involving you, the directory administrator. This makes your directory service resilient and safe even in the unpredictable real world.

One perhaps counterintuitive aspect of replication is that although you do add directory read capacity by adding replicas to your deployment, you do not add directory write capacity by adding replicas. As each write operation must be replayed everywhere, the result is that if you have N servers, you have N write operations to replay.

Another aspect of replication to keep in mind is that it is "loosely consistent." Loosely consistent means that directory data will eventually converge to be the same everywhere, but it will not necessarily be the same everywhere right away. Client applications sometimes get this wrong when they write to a pool of load balanced directory servers, immediately read back what they wrote, and are surprised that it is not the same. If your users are complaining about this, either make sure their application always gets sent to the same server, or else ask that they adapt their application to work in a more realistic manner.

To get started with replication, see "Managing Data Replication".

Directory Services Markup Language (DSMLv2) v2.0 became a standard in 2001. DSMLv2 describes directory data and basic directory operations in XML format, so they can be carried in Simple Object Access Protocol (SOAP) messages. DSMLv2 further allows clients to batch multiple operations together in a single request, to be processed either in sequential order or in parallel.

DS software provides support for DSMLv2 as a DSML gateway, which is a Servlet that connects to any standard LDAPv3 directory. DSMLv2 opens basic directory services to SOAP-based web services and service oriented architectures.

To set up DSMLv2 access, see "DSML Client Access".

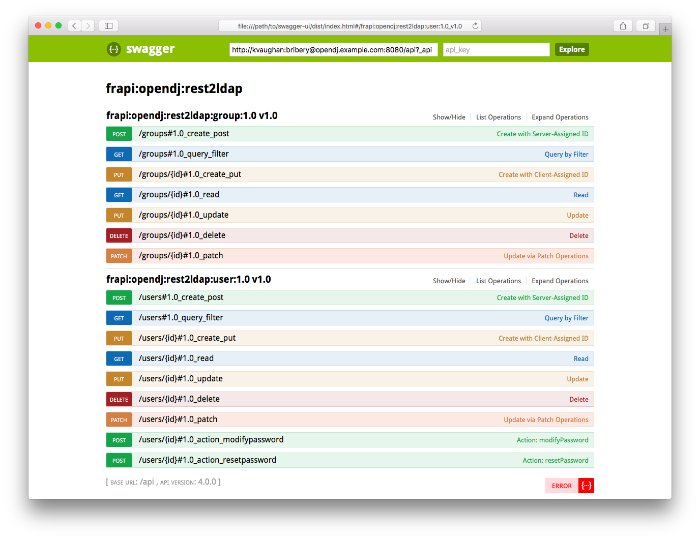

DS software can expose directory data as JSON resources over HTTP to REST clients, providing easy access to directory data for developers who are not familiar with LDAP. RESTful access depends on a configuration that describes how the JSON representation maps to LDAP entries.

Although client applications have no need to understand LDAP, the underlying implementation still uses the LDAP model for its operations. The mapping adds some overhead. Furthermore, depending on the configuration, individual JSON resources can require multiple LDAP operations. For example, an LDAP user entry represents manager as a DN (of the manager's entry). The same manager might be represented in JSON as an object holding the manager's user ID and full name, in which case the software must look up the manager's entry to resolve the mapping for the manager portion of the JSON resource, in addition to looking up the user's entry. As another example, suppose a large group is represented in LDAP as a set of 100,000 DNs. If the JSON resource is configured so that a member is represented by its name, then listing that resource would involve 100,000 LDAP searches to translate DNs to names.

A primary distinction between LDAP entries and JSON resources is that LDAP entries hold sets of attributes and their values, whereas JSON resources are documents containing arbitrarily nested objects. As LDAP data is governed by schema, almost no LDAP objects are arbitrary collections of data. (LDAP has the object class extensibleObject, but its use should be the exception rather than the rule.) Furthermore, JSON resources can hold arrays, ordered collections that can contain duplicates, whereas LDAP attributes are sets, unordered collections without duplicates. For most directory and identity data, these distinctions do not matter. You are likely to run into them, however, if you try to turn your directory into a document store for arbitrary JSON resources.

Despite some extra cost in terms of system resources, exposing directory data over HTTP can unlock directory services for a new generation of applications. The configuration provides flexible mapping, so that you can configure views that correspond to how client applications need to see directory data.

DS software also give you a deployment choice for HTTP access. You can deploy the REST to LDAP gateway, which is a Servlet that connects to any standard LDAPv3 directory, or you can activate the HTTP connection handler on a server to allow direct and more efficient HTTP and HTTPS access.

For examples showing how to use RESTful access, see "Performing RESTful Operations" in the Developer's Guide.

This chapter is meant to serve as an introduction, and so does not even cover everything in this guide, let alone everything you might want to know about directory services.

When you have understood enough of the concepts to build the directory services that you want to deploy, you must still build a prototype and test it before you roll out shared, centralized services for your organization. Read "Tuning Servers For Performance" for a look at how to meet the service levels that directory clients expect.

This chapter covers server administration tools. In this chapter you will learn to:

Find and run command-line tools

Understand how command-line tools trust server certificates

Use expressions in the server configuration file

At a protocol level, administration tools and interfaces connect to servers through a different network port than that used to listen for traffic from other client applications.

This chapter takes a quick look at the tools for managing directory services.

This section covers the tools installed with server software.

Before you try the examples in this guide, set your PATH to include the DS server tools. The location of the tools depends on the operating environment and on the packages used to install server software. "Paths To Administration Tools" indicates where to find the tools.

| DS running on... | DS installed from... | Default path to tools... |

|---|---|---|

| Linux distributions | .zip | /path/to/opendj/bin |

| Linux distributions | .deb, .rpm | /opt/opendj/bin |

| Microsoft Windows | .zip | C:\path\to\opendj\bat |

You find the installation and upgrade tools, setup, and upgrade, in the parent directory of the other tools, as these tools are not used for everyday administration. For example, if the path to most tools is /path/to/opendj/bin you can find these tools in /path/to/opendj. For instructions on how to use the installation and upgrade tools, see the Installation Guide.

All DS command-line tools take the --help option.

All commands call Java programs and therefore involve starting a JVM.

"Tools and Server Constraints" indicates the constraints, if any, that apply when using a command-line tool with a server.

| Commands | Constraints | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| These commands must be used with the local DS server in the same installation as the tools. These commands are not useful with non-DS servers. | ||||||||||

| These commands must be used with DS servers having the same version as the command. These commands are not useful with non-DS servers. | ||||||||||

|

| ||||||||||

| This command depends on template files. The template files can make use of configuration files installed with DS servers under The LDIF output can be used with any directory server. | ||||||||||

| These commands can be used independently of DS servers, and so are not tied to a specific version. |

The following list uses the UNIX names for the commands. On Windows all command-line tools have the extension .bat:

- addrate

Measure add and delete throughput and response time.

For details, see "addrate — measure add and delete throughput and response time" in the Reference.

- authrate

Measure bind throughput and response time.

For details, see "authrate — measure bind throughput and response time" in the Reference.

- backendstat

Debug databases for pluggable backends.

For details, see "backendstat — gather OpenDJ backend debugging information" in the Reference.

- backup

Back up or schedule backup of directory data.

For details, see "backup — back up directory data" in the Reference.

- base64

Encode and decode data in base64 format.

Base64-encoding represents binary data in ASCII, and can be used to encode character strings in LDIF, for example.

For details, see "base64 — encode and decode base64 strings" in the Reference.

- changelogstat

Debug file-based changelog databases.

For details, see "changelogstat — debug changelog and changenumber files" in the Reference.

- create-rc-script (UNIX)

Generate a script you can use to start, stop, and restart the server either directly or at system boot and shutdown. Use create-rc-script -f script-file.

This allows you to register and manage DS servers as services on UNIX and Linux systems.

For details, see "create-rc-script — script to manage OpenDJ as a service on UNIX" in the Reference.

- dsconfig

The dsconfig command is the primary command-line tool for viewing and editing DS server configurations. When started without arguments, dsconfig prompts you for administration connection information. Once connected to a running server, it presents you with a menu-driven interface to the server configuration.

To edit the configuration when the server is not running, use the

--offlinecommand.Some advanced properties are not visible by default when you run the dsconfig command interactively. Use the

--advancedoption to access advanced properties.When you pass connection information, subcommands, and additional options to dsconfig, the command runs in script mode and so is not interactive.

You can prepare dsconfig batch scripts by running the command with the

--commandFilePathoption in interactive mode, then reading from the batch file with the--batchFilePathoption in script mode. Batch files can be useful when you have many dsconfig commands to run and want to avoid starting the JVM for each command.Alternatively, you can read commands from standard input by using the

--batchoption.For details, see "dsconfig — manage OpenDJ server configuration" in the Reference.

- dsreplication

Configure data replication between directory servers to keep their contents in sync.

For details, see "dsreplication — manage directory data replication" in the Reference.

- encode-password

Encode a cleartext password according to one of the available storage schemes.

For details, see "encode-password — encode a password with a storage scheme" in the Reference.

- export-ldif

Export directory data to LDIF, the standard, portable, text-based representation of directory content.

For details, see "export-ldif — export directory data in LDIF" in the Reference.

- import-ldif

Load LDIF content into the directory, overwriting existing data. It cannot be used to append data to the backend database.

For details, see "import-ldif — import directory data from LDIF" in the Reference.

- ldapcompare

Compare the attribute values you specify with those stored on entries in the directory.

For details, see "ldapcompare — perform LDAP compare operations" in the Reference.

- ldapdelete

Delete one entry or an entire branch of subordinate entries in the directory.

For details, see "ldapdelete — perform LDAP delete operations" in the Reference.

- ldapmodify

Modify the specified attribute values for the specified entries.

For details, see "ldapmodify — perform LDAP modify, add, delete, mod DN operations" in the Reference.

- ldappasswordmodify

Modify user passwords.

For details, see "ldappasswordmodify — perform LDAP password modifications" in the Reference.

- ldapsearch

Search a branch of directory data for entries that match the LDAP filter you specify.

For details, see "ldapsearch — perform LDAP search operations" in the Reference.

- ldifdiff

Display differences between two LDIF files, with the resulting output having LDIF format.

For details, see "ldifdiff — compare small LDIF files" in the Reference.

- ldifmodify

Similar to the ldapmodify command, modify specified attribute values for specified entries in an LDIF file.

For details, see "ldifmodify — apply LDIF changes to LDIF" in the Reference.

- ldifsearch

Similar to the ldapsearch command, search a branch of data in LDIF for entries matching the LDAP filter you specify.

For details, see "ldifsearch — search LDIF with LDAP filters" in the Reference.

- makeldif

Generate directory data in LDIF based on templates that define how the data should appear.

The makeldif command is designed to help generate test data that mimics data expected in production, but without compromising real, potentially private information.

For details, see "makeldif — generate test LDIF" in the Reference.

- manage-account

Lock and unlock user accounts, and view and manipulate password policy state information.

For details, see "manage-account — manage state of OpenDJ server accounts" in the Reference.

- manage-tasks

View information about tasks scheduled to run in the server, and cancel specified tasks.

For details, see "manage-tasks — manage server administration tasks" in the Reference.

- modrate

Measure modification throughput and response time.

For details, see "modrate — measure modification throughput and response time" in the Reference.

- rebuild-index

Rebuild an index stored in an indexed backend.

For details, see "rebuild-index — rebuild index after configuration change" in the Reference.

- restore

Restore data from backup.

For details, see "restore — restore directory data backups" in the Reference.

- searchrate

Measure search throughput and response time.

For details, see "searchrate — measure search throughput and response time" in the Reference.

- start-ds

Start one DS server.

For details, see "start-ds — start OpenDJ server" in the Reference.

- status

Display information about the server.

For details, see "status — display basic OpenDJ server information" in the Reference.

- stop-ds

Stop one DS server.

For details, see "stop-ds — stop OpenDJ server" in the Reference.

- supportextract

Collect troubleshooting information for technical support purposes.

For details, see "supportextract — extract support data" in the Reference.

- verify-index

Verify that an index stored in an indexed backend is not corrupt.

For details, see "verify-index — check index for consistency or errors" in the Reference.

- windows-service (Windows)

Register and manage one DS server as a Windows Service.

For details, see "windows-service — register DS as a Windows Service" in the Reference.

This section describes how DS command-line tools determine whether to trust server certificates.

When DS command-line tools connect securely to a server, the server presents its digital certificate. The tool must then determine whether to trust the server certificate and continue negotiating the secure connection, or not to trust the server certificate and drop the connection.

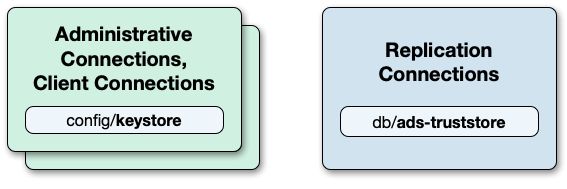

An important part of trusting a server certificate is trusting the signing certificate of the party who signed the server certificate. The process is described in more detail in "About Certificates, Private Keys, and Secret Keys" in the Security Guide.

Put simply, a tool can automatically trust the server certificate if the tool's truststore contains the signing certificate. "Command-Line Tools and Truststores" indicates where to find the truststore. The signing certificate could be a CA certificate, or the server certificate itself if the certificate was self-signed.

When run in interactive mode, DS command-line tools can prompt you to decide whether to trust a server certificate not found in the truststore. If you have not specified a truststore and you choose to trust the certificate permanently, the tools store the certificate in a file. The file is user.home/.opendj/keystore, where user.home is the Java system property. user.home is $HOME on Linux and UNIX, and %USERPROFILE% on Windows. The keystore password is OpenDJ. Neither the file name nor the password can be changed.

When run in non-interactive mode, the tools either rely on the specified truststore, or use this default truststore if none is specified.

| Truststore Option | Truststore Used |

|---|---|

| None | The default truststore, When you choose interactively to permanently trust a server certificate, the certificate is stored in this truststore. |

| | Only the specified truststore is used. In this case, the tool does not allow you to choose interactively to permanently trust an unrecognized server certificate. |

Property value substitution enables you to achieve the following:

Define a configuration that is specific to a single instance, for example, setting the location of the keystore on a particular host.

Define a configuration whose parameters vary between different environments, for example, the hostnames and passwords for test, development, and production environments.

Disable certain capabilities on specific servers. For example, you might want to disable a database backend and its replication agreement for one set of replicas while enabling it on another set of replicas. This makes it possible to use the same configuration for environments using different data sets.

Property value substitution uses configuration expressions to introduce variables into the server configuration. You set configuration expressions as the values of configuration properties. The effective property values can be evaluated in a number of ways. For more information about property evaluation, see "Expression Evaluation and Order of Precedence".

Note

DS servers only resolve configuration expressions in the config/config.ldif file on LDAP attributes whose names start with ds-cfg-*. These correspond to configuration properties listed in "Properties" in the Configuration Reference.

DS servers do not resolve configuration expressions anywhere else.

DS servers resolve expressions at startup to determine the configuration. DS commands that read the configuration in offline mode also resolve expressions at startup. When you use expressions in the configuration, you must make their values available as described below before starting the server and also when running such commands.

Configuration expressions share their syntax and underlying implementation with other ForgeRock Identity Platform software. Configuration expressions have the following characteristics:

To distinguish them from static values, expression tokens are preceded by an ampersand and enclosed in braces. For example:

&{listen.port}. The expression token in the example islisten.port. The.serves as the separator character.You can use a default value in an expression by including it after a vertical bar following the token.

For example, the following expression sets the default listen port value to 1389:

&{listen.port|1389}.A configuration property can include a mix of static values and expressions.

For example, suppose

hostnameis set todirectory. Then&{hostname}.example.comevaluates todirectory.example.com.You can define nested properties (that is a property definition within another property definition).

For example, suppose

listen.portis set to&{port.prefix}389, andport.prefixis set to2. Then&{listen.port}evaluates to2389.You can read the value of an expression token from a file.

For example, if the cleartext password is stored in

/path/to/password.txt, the following expression resolves to the cleartext password:&{file:/path/to/password.txt}.You specify the file either by its absolute path, or by a path relative to the DS instance directory. In other words, if the DS instance directory is

/path/to/opendj, then/path/to/opendj/config/keystore.pinandconfig/keystore.pinreference the same file.

DS servers define the following expression tokens by default. You can use these in expressions without explicitly setting their values beforehand:

ds.instance.dirThe file system directory holding the instance files required to run an instance of a server.

By default, the files are co-located with the product tools, libraries, and configuration files. You can change the location by using the

setup --instancePathoption.This evaluates to a directory such as

/path/to/my-instance.ds.install.dirThe file system directory where the server files are installed.

This evaluates to a directory such as

/path/to/opendj.

You must define expression values before starting the DS server that uses them. When evaluated, an expression must return the appropriate type for the configuration property. For example, the listen-port property takes an integer. If you set it using an expression, the result of the evaluated expression must be an integer. If the type is wrong, the server fails to start due to a syntax error.

If the expression cannot be resolved, and there is no default value in the configuration expression, DS also fails to start.

Expression resolvers evaluate expression tokens to literal values.

Expression resolvers get values from the following sources:

Environment variables

You set an environment variable to hold the value.

For example:

export LISTEN_PORT=1389The environment variable name must be composed of uppercase characters and underscores. The name maps to the expression token as follows:

Uppercase characters are lower cased.

Underscores,

_, are replaced with.characters.

In other words, the value of

LISTEN_PORTreplaces&{listen.port}in the server configuration.Java system properties

You set a Java system property to hold the value.

Java system property names must match expression tokens exactly. In other words, the value of the

listen.portsystem property replaces&{listen.port}in the server configuration.Java system properties can be set in a number of ways. One way of setting system properties for DS servers is to pass them through the

OPENDJ_JAVA_ARGSenvironment variable.For example:

export OPENDJ_JAVA_ARGS="-Dlisten.port=1389"Expressions files (optional)

You set a key in a

.jsonor.propertiesfile to hold the value. This optional mechanism is set using theDS_ENVCONFIG_DIRSenvironment variable, or theds.envconfig.dirsJava system property as described below.Keys in

.propertiesfiles must match expression tokens exactly. In other words, the value of thelisten.portkey replaces&{listen.port}in the server configuration.The following example expressions properties file sets the listen port:

listen.port=1389JSON expression files can contain nested objects.

JSON field names map to expression tokens as follows:

The JSON path name matches the expression token.

The

.character serves as the JSON path separator character.

The following example JSON expressions file sets the listen address and listen port:

{ "listen": { "address": "192.168.0.10", "port": "1389" } }In other words, the value of the

listen/portfield replaces&{listen.port}in the server configuration.In order to use expression files, set the environment variable,

DS_ENVCONFIG_DIRS, or the Java system property,ds.envconfig.dirs, to a comma-separated list of the directories containing the expression files.Note the following constraints when using expression files:

Although DS browses the directories in the specified order, within a directory DS scans the files in a non-deterministic order.

DS reads all files with

.jsonand.propertiesextensions.DS does not scan subdirectories.

Do not define the same configuration token more than once in a file, as you cannot know in advance which value will be used.

You cannot define the same configuration token in more than one file in a single directory.

If the same token occurs in more than one file in a single directory, an error occurs.

If the same token occurs once in several files which are located in different directories, the first value that DS reads is used.

The preceding list reflects the order of precedence:

Environment variables override system properties, default token settings, and settings in any expression files.

System properties override default token settings, and any settings in expression files.

If

DS_ENVCONFIG_DIRSords.envconfig.dirsis set, then the server uses settings found in expression files.Default token settings (

ds.config.dir,ds.instance.dir,ds.install.dir).

For an embedded DS server, it is possible to change the expression resolvers, in the server configuration. For details, see the DS server Java API.

A single expression token can evaluate to multiple property values. Such expressions are useful with multivalued properties.

For example, suppose you choose to set a connection handler's ssl-cipher-suite property. Instead of listing cipher suites individually, you use an ssl.cipher.suites token that takes multiple values. The following example commands set the token value in the environment, stop the server, use the expression in the LDAP connection handler configuration while the server is offline, and then start the server again:

$export SSL_CIPHER_SUITES=\ TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,\ TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,\ TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,\ TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,\ TLS_EMPTY_RENEGOTIATION_INFO_SCSV$stop-ds --quiet$dsconfig \ set-connection-handler-prop \ --offline \ --handler-name LDAP \ --add ssl-protocol:TLSv1.2 \ --add ssl-cipher-suite:'&{ssl.cipher.suites}' \ --no-prompt$start-ds --quiet

Multiple values are separated by commas in environment variables, system properties, and properties files. They are formatted as arrays in JSON files.

You can set the value of the ssl.cipher.suites token in the following ways:

- Environment Variable

export SSL_CIPHER_SUITES=\ TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,\ TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,\ TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,\ TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,\ TLS_EMPTY_RENEGOTIATION_INFO_SCSV

- System Property

export OPENDJ_JAVA_ARGS="-Dssl.cipher.suites=\ TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,\ TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,\ TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,\ TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,\ TLS_EMPTY_RENEGOTIATION_INFO_SCSV"

- Properties File

ssl.cipher.suites=\ TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,\ TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,\ TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,\ TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,\ TLS_EMPTY_RENEGOTIATION_INFO_SCSV- JSON Expressions File

{ "ssl.cipher.suites": [ "TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384", "TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384", "TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256", "TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256", "TLS_EMPTY_RENEGOTIATION_INFO_SCSV" ] }Alternative JSON expressions file that sets

ssl.protocolas well:{ "ssl": { "protocol": "TLSv1.2", "cipher.suites": [ "TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384", "TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384", "TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256", "TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256", "TLS_EMPTY_RENEGOTIATION_INFO_SCSV" ] } }In order to fully use the settings in this file, you would have to change the example to include the additional expression:

--add ssl-protocol:'&{ssl.protocol}'.

When the server evaluates &{ssl.cipher.suites}, the result is the following property values:

ssl-cipher-suite: TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384 ssl-cipher-suite: TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384 ssl-cipher-suite: TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256 ssl-cipher-suite: TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256 ssl-cipher-suite: TLS_EMPTY_RENEGOTIATION_INFO_SCSV

You can debug configuration expressions by following the instructions in "Enabling Debug Logging". Create a debug target for org.forgerock.config.resolvers. The following example demonstrates the process:

$dsconfig \ create-debug-target \ --hostname opendj.example.com \ --port 4444 \ --bindDN "cn=Directory Manager" \ --bindPassword password \ --publisher-name "File-Based Debug Logger" \ --type generic \ --target-name org.forgerock.config.resolvers \ --set enabled:true \ --trustAll \ --no-prompt$dsconfig \ set-log-publisher-prop \ --hostname opendj.example.com \ --port 4444 \ --bindDN "cn=Directory Manager" \ --bindPassword password \ --publisher-name "File-Based Debug Logger" \ --set enabled:true \ --trustAll \ --no-prompt$stop-ds --restart --quiet

When the server starts, it logs debugging messages for configuration expressions. Do not leave debug logging enabled in production systems.

This chapter covers starting and stopping DS servers. In this chapter you will learn to:

Start, restart, and stop servers using the command-line tools or system service integration on Linux and Windows systems

Understand server tasks and how to schedule them

Understand how to recognize that a server is recovering from a crash or abrupt shutdown

Configure whether a directory server loads data into cache at server startup before accepting client connections

Use one of the following techniques:

Use the start-ds command, described in "start-ds — start OpenDJ server" in the Reference:

$

start-dsAlternatively, you can specify the

--no-detachoption to start the server in the foreground.(Linux) If the DS server was installed from a .deb or .rpm package, then service management scripts were created at setup time.

Use the service opendj start command:

centos#

service opendj startStarting opendj (via systemctl): [ OK ]ubuntu$

sudo service opendj start$Starting opendj: > SUCCESS.(UNIX) Create an RC script by using the create-rc-script command, described in "create-rc-script — script to manage OpenDJ as a service on UNIX" in the Reference, and then use the script to start the server.

Unless you run DS servers on Linux as root, use the

--userName userNameoption to specify the user who installed the server:$

sudo create-rc-script \ --outputFile /etc/init.d/opendj \ --userName opendj$sudo /etc/init.d/opendj startFor example, if you run the DS server on Linux as root, you can use the RC script to start the server at system boot, and stop the server at system shutdown:

$

sudo update-rc.d opendj defaultsupdate-rc.d: warning: /etc/init.d/opendj missing LSB information update-rc.d: see <http://wiki.debian.org/LSBInitScripts> Adding system startup for /etc/init.d/opendj ... /etc/rc0.d/K20opendj -> ../init.d/opendj /etc/rc1.d/K20opendj -> ../init.d/opendj /etc/rc6.d/K20opendj -> ../init.d/opendj /etc/rc2.d/S20opendj -> ../init.d/opendj /etc/rc3.d/S20opendj -> ../init.d/opendj /etc/rc4.d/S20opendj -> ../init.d/opendj /etc/rc5.d/S20opendj -> ../init.d/opendj(Windows) Register DS servers as Windows Services by using the windows-service command, described in "windows-service — register DS as a Windows Service" in the Reference. Manage the service through Windows administration tools. The following example registers the DS server as a Windows Service:

C:\path\to\opendj\bat>

windows-service.bat --enableService

By default, DS servers save a compressed version of the server configuration used on successful startup. This ensures that the server provides a last known good configuration, which can be used as a reference or copied into the active configuration if the server fails to start with the current active configuration. It is possible, though not usually recommended, to turn this behavior off by changing the global server setting save-config-on-successful-startup to false.

Although DS servers are designed to recover from failure and disorderly shutdown, it is safer to shut the server down cleanly, because a clean shutdown reduces startup delays during which the server attempts to recover database backend state, and prevents situations where the server cannot recover automatically.

Follow these steps to shut down the DS server cleanly:

(Optional) If you are stopping a replicated server permanently, for example, before decommissioning the underlying system or virtual machine, first remove the server from the replication topology:

$

dsreplication \ unconfigure \ --unconfigureAll \ --port 4444 \ --hostname opendj.example.com \ --adminUID admin \ --adminPassword password \ --trustAll \ --no-promptThis step unregisters the server from the replication topology, effectively removing its replication configuration from other servers. This step must be performed before you decommission the system, because the server must connect to its peers in the replication topology.

Before shutting down the system where the server is running, and before detaching any storage used for directory data, cleanly stop the server using one of the following techniques:

Use the stop-ds command, described in "stop-ds — stop OpenDJ server" in the Reference:

$

stop-ds(Linux) If the DS server was installed from a .deb or .rpm package, then service management scripts were created at setup time.

Use the service opendj stop command:

centos#

service opendj stopStopping opendj (via systemctl): [ OK ]ubuntu$

sudo service opendj stop$Stopping opendj: ... > SUCCESS.(UNIX) Create an RC script, and then use the script to stop the server:

$

sudo create-rc-script \ --outputFile /etc/init.d/opendj \ --userName opendj$sudo /etc/init.d/opendj stop(Windows) Register the DS server as a Windows Service, and then manage the service through Windows administration tools:

C:\path\to\opendj\bat>

windows-service.bat --enableService

Do not intentionally kill the DS server process unless the server is completely unresponsive.

When stopping cleanly, the server writes state information to database backends, and releases locks that it holds on database files.

Use one of the following techniques:

Use the stop-ds command:

$

stop-ds --restart(Linux) If the DS server was installed from a .deb or .rpm package, then service management scripts were created at setup time.

Use the service opendj restart command:

centos#

service opendj restartRestarting opendj (via systemctl): [ OK ]ubuntu$

sudo service opendj restart$Stopping opendj: ... > SUCCESS. $Starting opendj: > SUCCESS.(UNIX) Create an RC script, and then use the script to stop the server:

$

sudo create-rc-script \ --outputFile /etc/init.d/opendj \ --userName opendj$sudo /etc/init.d/opendj restart(Windows) Register the DS server as a Windows Service, and then manage the service through Windows administration tools:

C:\path\to\opendj\bat>

windows-service.bat --enableService

The following server administration commands can be run in online and offline mode. They invoke data-intensive operations, and so potentially take a long time to complete. The links below are to the reference documentation for each command:

"backup — back up directory data" in the Reference

"export-ldif — export directory data in LDIF" in the Reference

"import-ldif — import directory data from LDIF" in the Reference

"restore — restore directory data backups" in the Reference

"rebuild-index — rebuild index after configuration change" in the Reference

When you run these commands in online mode, they run as tasks on the server. Server tasks are scheduled operations that can run one or more times as long as the server is up. For example, you can schedule the backup and export-ldif commands to run recurrently in order to back up server data on a regular basis. See the command's reference documentation for details.

You schedule a task as a directory administrator, sending the request to the administration port. You can therefore schedule a task on a remote server if you choose. When you schedule a task on a server, the command returns immediately, yet the task can start later, and might run for a long time before it completes. You can access tasks by using the "manage-tasks — manage server administration tasks" in the Reference command.

Although you can schedule a server task on a remote server, the data for the task must be accessible to the server locally. For example, when you schedule a backup task on a remote server, that server writes backup files to a file system on the remote server. Similarly, when you schedule a restore task on a remote server, that server restores backup files from a file system on the remote server.

The reference documentation describes the available options for each command:

Configure email notification for success and failure

Define alternatives on failure

Start tasks immediately (

--start 0)Schedule tasks to start at any time in the future

DS servers tend to show resilience when restarting after a crash or after the server process is killed abruptly. After disorderly shutdown, the DS server might have to replay the last few entries in a transaction log. Generally, DS servers return to service quickly.

Database recovery messages are found in the database log file, such as /path/to/opendj/db/userRoot/dj.log.

The following example shows two example messages from the recovery log. The first message is written at the beginning of the recovery process. The second message is written at the end of the process:

[/path/to/opendj/db/userRoot]Recovery underway, found end of log ... [/path/to/opendj/db/userRoot]Recovery finished: Recovery Info ...

Directory data cached in memory is lost during a crash. Loading directory data into memory when the server starts can take time. DS servers start accepting client requests before this process is complete.

This chapter covers management of LDAP Data Interchange Format (LDIF) data. In this chapter you will learn to:

Generate test LDIF data

Import and export LDIF data

Perform searches and modifications on LDIF files with command-line tools

Create and manage database backends to house directory data imported from LDIF

Delete database backends

LDIF provides a mechanism for representing directory data in text format. LDIF data is typically used to initialize directory databases, but also may be used to move data between different directories that cannot replicate directly, or even as an alternative backup format.

When you install DS directory servers, you have the option of importing sample data that is generated during the installation. This procedure demonstrates how to generate LDIF by using the makeldif command, described in "makeldif — generate test LDIF" in the Reference.

The makeldif command uses templates to provide sample data. Default templates are located in the /path/to/opendj/config/MakeLDIF/ directory. The example.template file can be used to create a suffix with entries of the type inetOrgPerson.

Write a file to act as the template for your generated LDIF.

The resulting test data template depends on what data you expect to encounter in production. Base your work on your knowledge of the production data, and on the sample template,

/path/to/opendj/config/MakeLDIF/example.template, and associated data.See "makeldif.template — template file for the makeldif command" in the Reference for reference information about template files.

Create additional data files for the content in your template to be selected randomly from a file, rather than generated by an expression.

Additional data files are located in the same directory as your template file.

Decide whether you want to generate the same test data each time you run the makeldif command with your template.

If so, provide the same

randomSeedinteger each time you run the command.Before generating a very large LDIF file, make sure you have enough space on disk.

Run the makeldif command to generate your LDIF file.

The following command demonstrates use of the example MakeLDIF template:

$

makeldif \ --outputLDIF generated.ldif \ --randomSeed 42 \ /path/to/opendj/config/MakeLDIF/example.template...LDIF processing complete....

The following procedures demonstrate how to use the import-ldif and export-ldif commands, described in "import-ldif — import directory data from LDIF" in the Reference and "export-ldif — export directory data in LDIF" in the Reference.

The most efficient method of importing LDIF data is to take the DS server offline. Alternatively, you can schedule a task to import the data while the server is online.

Note

Importing from LDIF overwrites all data in the target backend with entries from the LDIF data.

The following example imports

dc=example,dc=comdata into thedsEvaluationbackend, overwriting existing data:If you want to speed up the process—for example because you have millions of directory entries to import—first shut down the server, and then run the import-ldif command:

$

stop-ds --quiet$import-ldif \ --offline \ --backendID dsEvaluation \ --includeBranch dc=example,dc=com \ --ldifFile generated.ldifIf not, schedule a task to import the data while online:

$

start-ds --quiet$import-ldif \ --hostname opendj.example.com \ --port 4444 \ --bindDN "cn=Directory Manager" \ --bindPassword password \ --backendID dsEvaluation \ --includeBranch dc=example,dc=com \ --ldifFile generated.ldif \ --trustAllThe import task is scheduled through a secure connection to the administration port, by default

4444. You can schedule the import task to start at a particular time using the--startoption.The

--trustAlloption trusts all SSL certificates, such as a self-signed DS server certificate.

If the server is replicated with other servers, initialize replication again after the successful import.

For details see "Initializing Replicas".

Initializing replication overwrites data in the remote servers in the same way that import overwrites existing data with LDIF data.

If the LDIF was exported from a server rather than generated using the makeldif command, it may contain pre-encoded passwords. By default, password policies do not allow you to use pre-encoded passwords, but you can change this behavior by changing the password policy's configuration property,

allow-pre-encoded-passwords. Furthermore, the LDIF may include passwords encrypted using a reversible storage scheme, such as AES or Blowfish. As described in "Configuring Password Storage", in order to decrypt the passwords, the server must be a replica of the server that encrypted the passwords.

The following examples export dc=example,dc=com data from the dsEvaluation backend:

To expedite export, shut down the server and then use the export-ldif command:

$

stop-ds --quiet$export-ldif \ --offline \ --backendID dsEvaluation \ --includeBranch dc=example,dc=com \ --ldifFile backup.ldifTo export the data while online, leave the server running and schedule a task:

$

export-ldif \ --hostname opendj.example.com \ --port 4444 \ --bindDN "cn=Directory Manager" \ --bindPassword password \ --backendID dsEvaluation \ --includeBranch dc=example,dc=com \ --ldifFile backup.ldif \ --start 0 \ --trustAllThe

--start 0option tells the directory server to start the export task immediately.You can specify a time for the task to start using the format yyyymmddHHMMSS. For example,

20250101043000specifies a start time of 4:30 AM on January 1, 2025.If the server is not running at the time specified, it attempts to perform the task after it restarts.

This section demonstrates the command-line tools for working with LDIF:

ldifdiff, demonstrated in "Comparing LDIF Files".

ldifmodify, demonstrated in "Updating LDIF".

ldifsearch, demonstrated in "Searching LDIF".

The ldifsearch command is to LDIF files what the ldapsearch command is to directory servers:

$ldifsearch \ --baseDN dc=example,dc=com \ generated.ldif \ "(sn=Grenier)" \ uiddn: uid=user.4630,ou=People,dc=example,dc=com uid: user.4630

The ldif-file replaces the --hostname and --port options used to connect to an LDAP directory. Otherwise, the command syntax and LDIF output is familiar to ldapsearch users.

The ldifmodify command lets you apply changes to LDIF files, generating a new, changed version of the original file:

$cat changes.ldifdn: uid=user.0,ou=People,dc=example,dc=com changetype: modify replace: description description: New description. - replace: initials initials: ZZZ$ldifmodify \ --outputLDIF new.ldif \ generated.ldif \ changes.ldif

The resulting target LDIF file is approximately the same size as the source LDIF file, but the order of entries in the file is not guaranteed to be identical.

The ldifdiff command reports differences between two LDIF files in LDIF format:

$ldifdiff generated.ldif new.ldifdn: uid=user.0,ou=People,dc=example,dc=com changetype: modify delete: description description: This is the description for Aaccf Amar. - add: description description: New description. - delete: initials initials: AAA - add: initials initials: ZZZ -

The ldifdiff command reads both files into memory, and constructs tree maps to perform the comparison. The command is designed to work with small files and fragments, and can quickly run out of memory when calculating differences between large files.

For each LDIF tool, a double dash, --, signifies the end of command options, after which only trailing arguments are allowed. To indicate standard input as a trailing argument, use a bare dash, -, after the double dash.

How bare dashes can be used after a double dash depends on the tool:

- ldifdiff

The bare dash can replace either the source LDIF file, or the target LDIF file argument.

To take source LDIF from standard input, use the following construction:

ldifdiff [options] -- - target.ldif

To take target LDIF from standard input, use the following construction:

ldifdiff [options] -- source.ldif -

- ldifmodify

The bare dash can replace either the source LDIF file, or the changes LDIF file arguments.

To take source LDIF from standard input, use the following construction:

ldifmodify [options] -- - changes.ldif [changes.ldif ...]

To take changes LDIF from standard input, use the following construction:

ldifmodify [options] -- source.ldif -

- ldifsearch

The bare dash can be used to take the source LDIF from standard input by using the following construction:

ldifsearch [options] -- - filter [attributes ...]

DS directory server stores data in a backend. A backend is a private server repository that can be implemented in memory, as a file, or as an embedded database.

Database backends are designed to hold large amounts of user data. DS servers have tools for backing up and restoring database backends, as described in "Backing Up and Restoring Data". By default, a directory server stores your data in a database backend named userRoot, unless you use a setup profile.

When installing the server and importing user data, and when creating a database backend, you choose the backend type. DS directory servers use JE backends for local data.

The JE backend type is implemented using B-tree data structures. It stores data as key-value pairs, which is different from the relational model exposed to clients of relational databases.

Important

Do not compress, tamper with, or otherwise alter backend database files directly unless specifically instructed to do so by a qualified ForgeRock technical support engineer. External changes to backend database files can render them unusable by the server. By default, backend database files are located under the /path/to/opendj/db directory.

When backing up backend databases at the file system level rather than using the backup command, be aware that you may need to stop the server before running the backup procedure. A database backend performs internal cleanup operations that change the database log files even when there are no pending client or replication operations. An ongoing file system backup operation may therefore record database log files that are not in sync with each other. Such inconsistencies make it impossible for the backend database to recover after the database log files are restored. When the directory server is online during file system backup, successful recovery after restore can only be guaranteed if the backup operation took a fully atomic snapshot, capturing the state of all files at exactly the same time. If the recursive file system copy takes a true snapshot, you may perform the backup with the DS server online. Otherwise, if the file system copy is not a fully atomic snapshot, then you must stop the DS server before performing the backup operation.

A JE backend stores data on disk using append-only log files with names like number.jdb. The JE backend writes updates to the highest-numbered log file. The log files grow until they reach a specified size (default: 1 GB). When the current log file reaches the specified size, the JE backend creates a new log file.

To avoid an endless increase in database size on disk, JE backends clean their log files in the background. A cleaner thread copies active records to new log files. Log files that no longer contain active records are deleted.

By default, JE backends let the operating system potentially cache data for a period of time before flushing the data to disk. This setting trades full durability with higher disk I/O for good performance with lower disk I/O. With this setting, it is possible to lose the most recent updates that were not yet written to disk in the event of an underlying OS or hardware failure. You can modify this behavior by changing the advanced configuration settings for the JE backend. If necessary, you can change the advanced setting for durability, db-durability, using the dsconfig set-backend-prop command.

When a JE backend is opened, it recovers by recreating its B-tree structure from its log files. This is a normal process, one that allows the backend to recover after an orderly shutdown or after a crash.

Due to the cleanup processes, JE backends can be actively writing to disk even when there are no pending client or replication operations. To back up a server using a file system snapshot, you must stop the server before taking the snapshot.

In addition to the cleanup process, JE backends run checksum verification periodically on the database logs. If the verification process detects backend database corruption, then the server logs an error message and the backend is taken offline. In this case, restore the corrupted backend from backup so that it can be used again. By default, the verification runs every night at midnight local time. If necessary, you can change this behavior by adjusting the advanced settings, db-run-log-verifier, and db-log-verifier-schedule, using the dsconfig set-backend-prop command.

You can create new backends using the dsconfig create-backend command, described in "create-backend" in the Configuration Reference. When you create a backend, choose the type of backend that fits your purpose.

The following example creates a database backend named exampleOrgBackend. The backend is of type je, which relies on a JE database for data storage and indexing:

$ dsconfig \

create-backend \

--hostname opendj.example.com \

--port 4444 \

--bindDN "cn=Directory Manager" \

--bindPassword password \

--backend-name exampleOrgBackend \

--type je \

--set enabled:true \

--set base-dn:dc=example,dc=org \

--trustAll \

--no-prompt

After creating the backend, you can view the settings as in the following example:

$dsconfig \ get-backend-prop \ --hostname opendj.example.com \ --port 4444 \ --bindDN "cn=Directory Manager" \ --bindPassword password \ --backend-name exampleOrgBackend \ --trustAll \ --no-promptProperty : Value(s) ------------------------:--------------------- backend-id : exampleOrgBackend base-dn : "dc=example,dc=org" cipher-key-length : 128 cipher-transformation : AES/CBC/PKCS5Padding compact-encoding : true confidentiality-enabled : false db-cache-percent : 50 db-cache-size : 0 b db-directory : db enabled : true writability-mode : enabled

When you create a new backend using the dsconfig command, DS directory servers create the following indexes automatically:

aci presence |

ds-sync-conflict equality |

ds-sync-hist ordering |

entryUUID equality |

objectClass equality |

You can create additional indexes as described in "Configuring and Rebuilding Indexes".

In some cases, such as directory services with subtree replication, you might choose to split directory data across multiple backends.

In the example in this section, the exampleData backend holds all data under dc=example,dc=com except ou=People,dc=example,dc=com. A separate peopleData backend holds data under ou=People,dc=example,dc=com. Replication for these backends is configured separately as described in "Subtree Replication".

The following example assumes you perform the steps when initially setting up the directory data. It uses the sample data from "Generating Test Data":

# Create a backend for the data not in the sub-suffix: $dsconfig \ create-backend \ --hostname opendj.example.com \ --port 4444 \ --bindDN "cn=Directory Manager" \ --bindPassword password \ --backend-name exampleData \ --type je \ --set enabled:true \ --set base-dn:dc=example,dc=com \ --trustAll \ --no-prompt# Create a backend for the data in the sub-suffix: $dsconfig \ create-backend \ --hostname opendj.example.com \ --port 4444 \ --bindDN "cn=Directory Manager" \ --bindPassword password \ --backend-name peopleData \ --type je \ --set enabled:true \ --set base-dn:ou=People,dc=example,dc=com \ --trustAll \ --no-prompt# Import data not in the sub-suffix: $import-ldif \ --hostname opendj.example.com \ --port 4444 \ --bindDN "cn=Directory Manager" \ --bindPassword password \ --backendID exampleData \ --excludeBranch ou=People,dc=example,dc=com \ --ldifFile generated.ldif \ --trustAll# Import data in the sub-suffix: $import-ldif \ --hostname opendj.example.com \ --port 4444 \ --bindDN "cn=Directory Manager" \ --bindPassword password \ --backendID peopleData \ --includeBranch ou=People,dc=example,dc=com \ --ldifFile generated.ldif \ --trustAll

If, unlike the example, you must split an existing backend, follow these steps:

Create the new backend.

Export the data for the backend.

Import the data for the sub-suffix into the new backend using the

--includeBranchoption.Delete all data under the sub-suffix from the old backend.

For an example, see "Delete: Removing a Subtree" in the Developer's Guide.

When you split an existing backend, you must handle the service interruption that results when you move the sub-suffix data out of the original backend. If there is not a maintenance window where you can bring the service down in order to move the data, consider alternatives for replaying changes applied between the time that you exported the data and the time that you retired the old sub-suffix.

DS directory servers can encrypt directory data before storing it in a database backend on disk, keeping the data confidential until it is accessed by a directory client.

Data encryption is useful for at least the following cases:

- Ensuring Confidentiality and Integrity

Encrypted directory data is confidential, remaining private until decrypted with a proper key.

Encryption ensures data integrity at the moment it is accessed. The DS directory service cannot decrypt corrupted data.

- Protection on a Shared Infrastructure

When you deploy directory services on a shared infrastructure you relinquish full and sole control of directory data.

For example, if the DS directory server runs in the cloud, or in a data center with shared disks, the file system and disk management are not under your control.

Data confidentiality and encryption come with the following trade-offs:

- Equality Indexes Limited to Equality Matching

When an equality index is configured without confidentiality, the values can be maintained in sorted order. A non-confidential, cleartext equality index can therefore be used for searches that require ordering and searches that match an initial substring.

An example of a search that requires ordering is a search with a filter

"(cn<=App)". The filter matches entries withcommonNameup to those starting withApp(case-insensitive) in alphabetical order.An example of a search that matches an initial substring is a search with a filter

"(cn=A*)". The filter matches entries having acommonNamethat starts witha(case-insensitive).In an equality index with confidentiality enabled, the DS directory server no longer sorts cleartext values. As a result, you must accept that ordering and initial substring searches are unindexed.

- Performance Impact

Encryption and decryption requires more processing than handling cleartext values.

Encrypted values also take up more space than cleartext values.

As explained in "Protect DS Server Files", DS directory servers do not encrypt directory data by default. This means that any user with system access to read directory files can potentially access directory data in cleartext.

You can verify what a system user could see with read access to backend database files by using the backendstat dump-raw-db command. The backendstat subcommands list-raw-dbs and dump-raw-db help you list and view the low-level databases within a backend. Unlike the output of other subcommands, the output of the dump-raw-db subcommand is neither decrypted nor formatted for readability. Instead, you can see values as they are stored in the backend file.