Install a Single Node Deployment

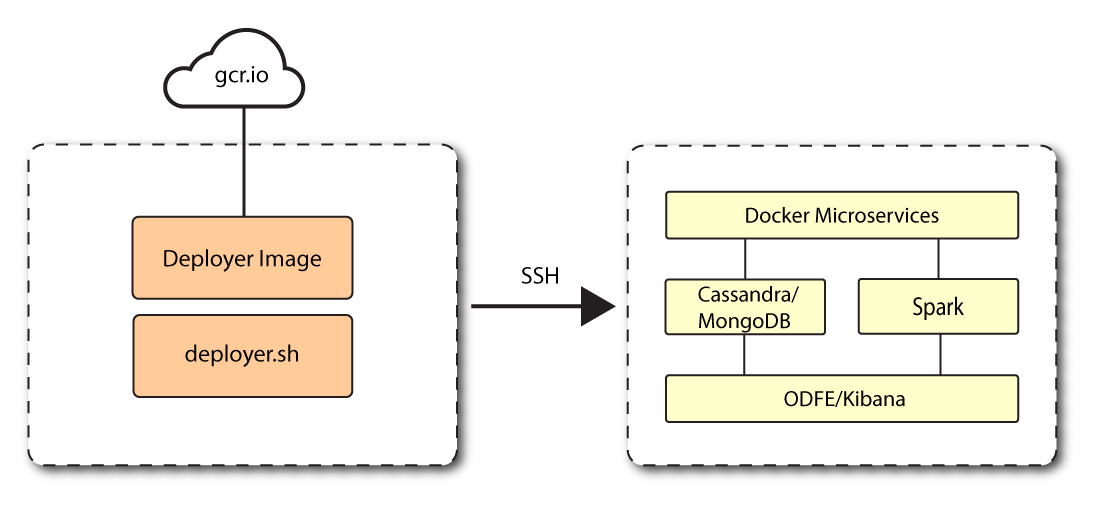

This section presents instructions on deploying Autonomous Identity in a single-target machine that has Internet connectivity. ForgeRock provides a deployer script that pulls a Docker image from ForgeRock’s Google Cloud Registry (gcr.io) repository. The image contains the microservices, analytics, and backend databases needed for the system.

This installation assumes that you set up the deployer script on a separate machine from the target. This lets you launch a build from a laptop or local server.

Figure 6: A single-node target deployment.

Prerequisites

Let’s deploy Autonomous Identity on a single-node target on CentOS 7. The following are prerequisites:

-

Operating System. The target machine requires CentOS 7. The deployer machine can use any operating system as long as Docker is installed. For this chapter, we use CentOS 7 as its base operating system.

-

Memory Requirements. Make sure you have enough free disk space on the deployer machine before running the

deployer.shcommands. We recommend at least 500GB. -

Default Shell. The default shell for the

autoiduser must be bash. -

Deployment Requirements. Autonomous Identity provides a Docker image that creates a

deployer.shscript. The script downloads additional images necessary for the installation. To download the deployment images, you must first obtain a registry key to log into the ForgeRock Google Cloud Registry (gcr.io). The registry key is only available to ForgeRock Autonomous Identity customers. For specific instructions on obtaining the registry key, see How To Configure Service Credentials (Push Auth, Docker) in Backstage. -

Database Requirements. Decide which database you are using: Apache Cassandra or MongoDB.

-

IPv4 Forwarding. Many high security environments run their CentOS-based systems with IPv4 forwarding disabled. However, Docker Swarm does not work with a disabled IPv4 forward setting. In such environments, make sure to enable IPv4 forwarding in the file

/etc/sysctl.conf:net.ipv4.ip_forward=1

Set Up the Target Machine

Autonomous Identity is configured on a target machine. Make sure you have sufficient storage for your particular deployment. For more information on sizing considerations, see Deployment Planning Guide.

-

The install assumes that you have CentOS 7 as your operating system. Check your CentOS 7 version.

$ sudo cat /etc/centos-release

-

Set the user for the target machine to a username of your choice. For example,

autoid.$ sudo adduser autoid

-

Set the password for the user you created in the previous step.

$ sudo passwd autoid

-

Configure the user for passwordless sudo.

$ echo "autoid ALL=(ALL) NOPASSWD:ALL" | sudo tee /etc/sudoers.d/autoid

-

Add administrator privileges to the user.

$ sudo usermod -aG wheel autoid

-

Change to the user account.

$ su - autoid

-

Install yum-utils package on the deployer machine. yum-utils is a utilities manager for the Yum RPM package repository. The repository compresses software packages for Linux distributions.

$ sudo yum -y install yum-utils

Set Up the Deployer Machine

Set up another machine as a deployer node. You can use any OS-based machine for the deployer as long as it has Docker installed. For this example, we use CentOS 7.

-

The install assumes that you have CentOS 7 as your operating system. Check your CentOS 7 version.

$ sudo cat /etc/centos-release

-

Set the user for the target machine to a username of your choice. For example,

autoid.$ sudo adduser autoid

-

Set the password for the user you created in the previous step.

$ sudo passwd autoid

-

Configure the user for passwordless sudo.

$ echo "autoid ALL=(ALL) NOPASSWD:ALL" | sudo tee /etc/sudoers.d/autoid

-

Add administrator privileges to the user.

$ sudo usermod -aG wheel autoid

-

Change to the user account.

$ su - autoid

-

Install yum-utils package on the deployer machine. yum-utils is a utilities manager for the Yum RPM package repository. The repository compresses software packages for Linux distributions.

$ sudo yum -y install yum-utils

-

Create the installation directory. Note that you can use any install directory for your system as long as your run the

deployer.shscript from there. Also, the disk volume where you have the install directory must have at least 8GB free space for the installation.$ mkdir ~/autoid-config

Install Docker on the Deployer Machine

Install Docker on the deployer machine. We run commands from this machine to install Autonomous Identity on the target machine. In this example, we use CentOS 7.

-

On the deployer machine, set up the Docker-CE repository.

$ sudo yum-config-manager \ --add-repo https://download.docker.com/linux/centos/docker-ce.repo -

Install the latest version of the Docker CE, the command-line interface, and containerd.io, a containerized website.

$ sudo yum -y install docker-ce docker-ce-cli containerd.io

-

Enable Docker to start at boot.

$ sudo systemctl enable docker

-

Start Docker.

$ sudo systemctl start docker

-

Check that Docker is running.

$ systemctl status docker

-

Add the user to the Docker group.

$ sudo usermod -aG docker ${USER} -

Logout of the user account.

$ logout

-

Re-login using created user. Login with the user created for the deployer machine. For example,

autoid.$ su - autoid

Set Up SSH on the Deployer

This section shows how to set up SSH keys for the autoid user to the target machine. This is a critical step and

necessary for a successful deployment.

-

On the deployer machine, change to the SSH directory.

$ cd ~/.ssh

-

Run

ssh-keygento generate a 2048-bit RSA keypair for theautoiduser, and then click Enter. Use the default settings, and do not enter a passphrase for your private key.$ ssh-keygen -t rsa -C "autoid"

The public and private rsa key pair is stored in

home-directory/.ssh/id_rsaandhome-directory/.ssh/id_rsa.pub. -

Copy the SSH key to the

autoid-configdirectory.$ cp id_rsa ~/autoid-config

-

Change the privileges and owner to the file.

$ chmod 400 ~/autoid-config/id_rsa

-

Copy your public SSH key,

id_rsa.pub, to the target machine’s~/.ssh/authorized_keysfolder. If your target system does not have an~/.ssh/authorized_keys, create it usingsudo mkdir -p ~/.ssh, thensudo touch ~/.ssh/authorized_keys.This example uses

ssh-copy-idto copy the public key to the target machine, which may or may not be available on your operating system. You can also manually copy-n-paste the public key to your~/.ssh/authorized_keyson the target machine.$ ssh-copy-id -i id_rsa.pub autoid@<Target IP Address>

The ssh-copy-idcommand requires that you have public key authentication enabled on the target server. You can enable it by editing the/etc/ssh/sshd_configfile on the target machine. For example: sudo vi /etc/ssh/sshd_config, set PubkeyAuthentication yes, and save the file. Next, restart sshd: sudo systemctl restart sshd. -

On the deployer machine, test your SSH connection to the target machine. This is a critical step. Make sure the connection works before proceeding with the installation.

$ ssh -i ~/.ssh/id_rsa autoid@<Target IP Address> Last login: Tue Dec 14 14:06:06 2020

-

While SSH’ing into the target node, set the privileges on your

~/.sshand~/.ssh/authorized_keys.$ chmod 700 ~/.ssh && chmod 600 ~/.ssh/authorized_keys

-

If you successfully accessed the remote server and changed the privileges on the

~/.ssh, enterexitto end your SSH session.

Install Autonomous Identity

-

On the deployer machine, change to the installation directory.

$ cd ~/autoid-config

-

Log in to the ForgeRock Google Cloud Registry (gcr.io) using the registry key. The registry key is only available to ForgeRock Autonomous Identity customers. For specific instructions on obtaining the registry key, see How To Configure Service Credentials (Push Auth, Docker) in Backstage.

$ docker login -u _json_key -p "$(cat autoid_registry_key.json)" https://gcr.io/forgerock-autoid

You should see:

Login Succeeded

-

Run the

create-templatecommand to generate thedeployer.shscript wrapper and configuration files. Note that the command sets the configuration directory on the target node to/config. The--userparameter eliminates the need to usesudowhile editing the hosts file and other configuration files.$ docker run --user=$(id -u) -v ~/autoid-config:/config -it gcr.io/forgerock-autoid/deployer:2021.8.7 create-template … d6c7c6f3303e: Pull complete Digest: sha256:15225be65417f8bfb111adea37c83eb5e0d87140ed498bfb624a358f43fbbf Status: Downloaded newer image for gcr.io/forgerock-autoid/autoid/dev-compact/deployer@sha256:15225be65417f8bfb111a dea37c83eb5e0d87140ed498bfb624a358f43fbbf Config template is copied to host machine directory mapped to /config

-

To see the list of commands, enter

deployer.sh.$ ./deployer.sh Usage: deployer <command> Commands: create-template download-images import-deployer encrypt-vault decrypt-vault run create-tar install-docker install-dbutils upgrade

Configure Autonomous Identity

The create-template command from the previous section creates a number of configuration files, required for the deployment: ansible.cfg, vars.yml, hosts, and vault.yml.

If you are running a deployment for evaluation, you can minimally set the ansible.cfg file in step 1,

private IP address mapping in the vars.yml file in step 2, edit the hosts file in step 3,

jump to step 6 to download the images, and then run the deployer in step 7.

|

-

On the deployer machine, open a text editor and edit the

~/autoid-config/ansible.cfgto set up the remote user and SSH private key file location on the target node. Make sure that theremote_userexists on the target node and that the deployer machine can ssh to the target node as the user specified in theid_rsafile. In most cases, you can use the default values.[defaults] host_key_checking = False remote_user = autoid private_key_file = id_rsa

-

On the deployer machine, open a text editor and edit the

~/autoid-config/vars.ymlfile to configure specific settings for your deployment:-

AI Product. Do not change this property.

ai_product: auto-id

-

Domain and Target Environment. Set the domain name and target environment specific to your deployment by editing the

/autoid-config/vars.xmlfile. By default, the domain name is set toforgerock.comand the target environment is set toautoid. The default Autonomous Identity URL will be:https://autoid-ui.forgerock.com. For this example, we use the default values.domain_name: forgerock.com target_environment: autoid

If you change the domain name and target environment, you need to also change the certificates to reflect the new changes. For more information, see Customize the Domain and Namespace.

-

Analytics Data Directory and Analytics Configuration Direction. Although rarely necessary for a single node deployment, you can change the analytics and analytics configuration mount directories by editing the properties in the

~/autoid-config/vars.ymlfile.analytics_data_dir: /data analytics_conf_dif: /data/conf

-

Offline Mode. Do not change this property. The property is for air-gap deployments only and should be kept to

false. -

Database Type. By default, Apache Cassandra is set as the default database for Autonomous Identity. For MongoDB, set the

db_driver_type:tomongo.db_driver_type: cassandra

-

Private IP Address Mapping. If your external and internal IP addresses are different, for example, when deploying the target host in a cloud, define a mapping between the external IP address and the private IP address in the

~/autoid-config/vars.ymlfile.If your external and internal IP addresses are the same, you can skip this step.

On the deployer node, add the

private_ip_address_mappingproperty in the~/autoid-config/vars.ymlfile. You can look up the private IP on the cloud console, or runsudo ifconfigon the target host. Make sure the values are within double quotes. The key should not be in double quotes and should have two spaces preceding the IP address.private_ip_address_mapping: external_ip: "internal_ip"

For example:

private_ip_address_mapping: 34.70.190.144: "10.128.0.71"

-

Authentication Option. This property has three possible values:

-

Local.

Localindicates that sets up elasticsearch with local accounts and enables the Autonomous Identity UI features: self-service and manage identities. Local auth mode should be enabled for demo environments only. -

SSO.

SSOindicates that single sign-on (SSO) is in use. With SSO only, the Autonomous Identity UI features, self-service and manage identities pages, is not available on the system but is managed by the SSO provider. The login page displays "Sign in using OpenID." For more information, see Set Up SSO. -

LocalAndSSO.

LocalAndSSOindicates that SSO is used and local account features, like self-service and manage identities are available to the user. The login page displays "Sign in using OpenID" and a link "Or sign in via email".authentication_option: "Local"

-

-

Access Log. By default, the access log is enabled. If you want to disable the access log, set the

access_log_enabledvariable to "false".access_log_enabled: true

-

JWT Expiry and Secret File. Optional. By default, the session JWT is set at 30 minutes. To change this value, set the

jwt_expiryproperty to a different value.jwt_expiry: "30 minutes" jwt_secret_file: "{{install path}}"/jwt/secret.txt" jwt_audience: "http://my.service" oidc_jwks_url: "na" -

Local Auth Mode Password. When

authentication_optionis set toLocal, thelocal_auth_mode_passwordsets the password for the login user. -

SSO. Use these properties to set up SSO. For more information, see Set Up SSO.

-

MongoDB Settings. Use these settings to configure a MongoDB cluster. These settings are not needed for single node deployments.

-

Elasticsearch Heap Size. Optional. The default heap size for Elasticsearch is 1GB, which may be small for production. For production deployments, uncomment the option and specify

2Gor3G.#elastic_heap_size: 1g # sets the heap size (1g|2g|3g) for the Elastic Servers

-

Java API Service. Optional. Set the Java API Service (JAS) properties for the deployment: authentication, maximum memory, directory for attribute mappings data source entities:

jas: auth_enabled: true auth_type: 'jwt' signiture_key_id: 'service1-hmac' signiture_algorithm: 'hmac-sha256' max_memory: 4096M mapping_entity_type: /common/mappings datasource_entity_type: /common/datasources

-

-

Open a text editor and enter the target host’s public IP addresses in the

~/autoid-config/hostsfile. Make sure the target machine’s external IP address is accessible from the deployer machine. NOTE:[notebook]is not used in Autonomous Identity.Click to See a Host File for Cassandra Deployments

If you configured Cassandra as your database, the

~/autoid-config/hostsfile is as follows for single-node target deployments:[docker-managers] 34.70.190.144 [docker-workers] 34.70.190.144 [docker:children] docker-managers docker-workers [cassandra-seeds] 34.70.190.144 [spark-master] 34.70.190.144 [spark-workers] 34.70.190.144 [mongo_master] [mongo_replicas] [mongo:children] mongo_replicas mongo_master # ELastic Nodes [odfe-master-node] 34.70.190.144 [odfe-data-nodes] 34.70.190.144 [kibana-node] 34.70.190.144

Click to See a Host File for MongoDB Deployments

If you configured MongoDB as your database, the

~/autoid-config/hostsfile is as follows for single-node target deployments:[docker-managers] 34.70.190.144 [docker-workers] 34.70.190.144 [docker:children] docker-managers docker-workers [cassandra-seeds] [spark-master] 34.70.190.144 [spark-workers] 34.70.190.144 [mongo_master] 34.70.190.144 mongodb_master=True [mongo_replicas] 34.70.190.144 [mongo:children] mongo_replicas mongo_master # ELastic Nodes [odfe-master-node] 34.70.190.144 [odfe-data-nodes] 34.70.190.144 [kibana-node] 34.70.190.144

-

Open a text editor and set the Autonomous Identity passwords for the configuration service, Cassandra or MongoDB database and their keystore/truststore, and the Elasticsearch service. The vault passwords file is located at

~/autoid-config/vault.yml.Despite the presence of special characters in the examples below, do not include special characters, such as &or$, in your productionvault.ymlpasswords as it will result in a failed deployer process.configuration_service_vault: basic_auth_password: ~@C~O>@%^()-_+=|<Y*$$rH&&/m#g{?-o!z/1}2??3=!*& cassandra_vault: cassandra_password: ~@C~O>@%^()-_+=|<Y*$$rH&&/m#g{?-o!z/1}2??3=!*& cassandra_admin_password: ~@C~O>@%^()-_+=|<Y*$$rH&&/m#g{?-o!z/1}2??3=!*& keystore_password: Acc#1234 truststore_password: Acc#1234 mongo_vault: mongo_admin_password: ~@C~O>@%^()-_+=|<Y*$$rH&&/m#g{?-o!z/1}2??3=!*& mongo_root_password: ~@C~O>@%^()-_+=|<Y*$$rH&&/m#g{?-o!z/1}2??3=!*& keystore_password: Acc#1234 truststore_password: Acc#1234 elastic_vault: elastic_admin_password: ~@C~O>@%^()-_+=|<Y*$$rH&&/m#g{?-o!z/1}2??3=!*& elasticsearch_password: ~@C~O>@%^()-_+=|<Y*$$rH&&/m#g{?-o!z/1}2??3=!*& keystore_password: Acc#1234 truststore_password: Acc#1234 -

Encrypt the vault file that stores the Autonomous Identity passwords, located at

~/autoid-config/vault.yml. The encrypted passwords will be saved to/config/.autoid_vault_password. The/config/mount is internal to the deployer container.$ ./deployer.sh encrypt-vault

-

Download the images. This step downloads software dependencies needed for the deployment and places them in the

autoid-packagesdirectory.$ ./deployer.sh download-images

Make sure you have no unreachable or failed processes before proceeding to the next step.

PLAY RECAP ***************************************************************************** localhost : ok=20 changed=12 unreachable=0 failed=0 skipped=8 rescued=0 ignored=0

-

Run the deployment. The command installs the packages, and starts the microservices and the analytics service. Make sure you have no failed processes before proceeding to the next step.

$ ./deployer.sh run

Make sure you have no unreachable or failed processes before proceeding to the next step.

PLAY RECAP ************************************************************************************ 34.70.190.144 : ok=643 changed=319 unreachable=0 failed=0 skipped=79 rescued=0 ignored=1 localhost : ok=34 changed=14 unreachable=0 failed=0 skipped=4 rescued=0 ignored=0

Resolve Hostname

After installing Autonomous Identity, set up the hostname resolution for your deployment.

Resolve the hostname:

-

Configure your DNS servers to access Autonomous Identity dashboard on the target node. The following domain names must resolve to the IP address of the target node:

<target-environment>-ui.<domain-name>. -

If DNS cannot resolve target node hostname, edit it locally on the machine that you want to access Autonomous Identity using a browser. Open a text editor and add an entry in the

/etc/hosts(Linux/Unix) file orC:\Windows\System32\drivers\etc\hosts(Windows) for the self-service and UI services for each managed target node.<Target IP Address> <target-environment>-ui.<domain-name>

For example:

34.70.190.144 autoid-ui.forgerock.com

-

If you set up a custom domain name and target environment, add the entries in

/etc/hosts. For example:34.70.190.144 myid-ui.abc.com

For more information on customizing your domain name, see Customize Domains.

Access the Dashboard

Access the Autonomous Identity console UI:

-

Open a browser. If you set up your own url, use it for your login.

-

Log in as a test user.

test user: bob.rodgers@forgerock.com password: <password>

Check Apache Cassandra

Check Cassandra:

-

On the target node, check the status of Apache Cassandra.

$ /opt/autoid/apache-cassandra-3.11.2/bin/nodetool status

-

An example output is as follows:

Datacenter: datacenter1 ======================= Status=Up/Down |/ State=Normal/Leaving/Joining/Moving -- Address Load Tokens Owns (effective) Host ID Rack UN 34.70.190.144 1.33 MiB 256 100.0% a10a91a4-96e83dd-85a2-4f90d19224d9 rack1 --

|

If you see a "data set too large for maximum size" error message while checking the status or starting Apache Cassandra, then you must update the segment size and timeout settings. To update these settings:

|

Check MongoDB

Check the status of MongoDB:

-

On the target node, check the status of MongoDB.

$ mongo --tls \ --host <Host IP> \ --tlsCAFile /opt/autoid/mongo/certs/rootCA.pem \ --tlsAllowInvalidCertificates \ --tlsCertificateKeyFile /opt/autoid/mongo/certs/mongodb.pem

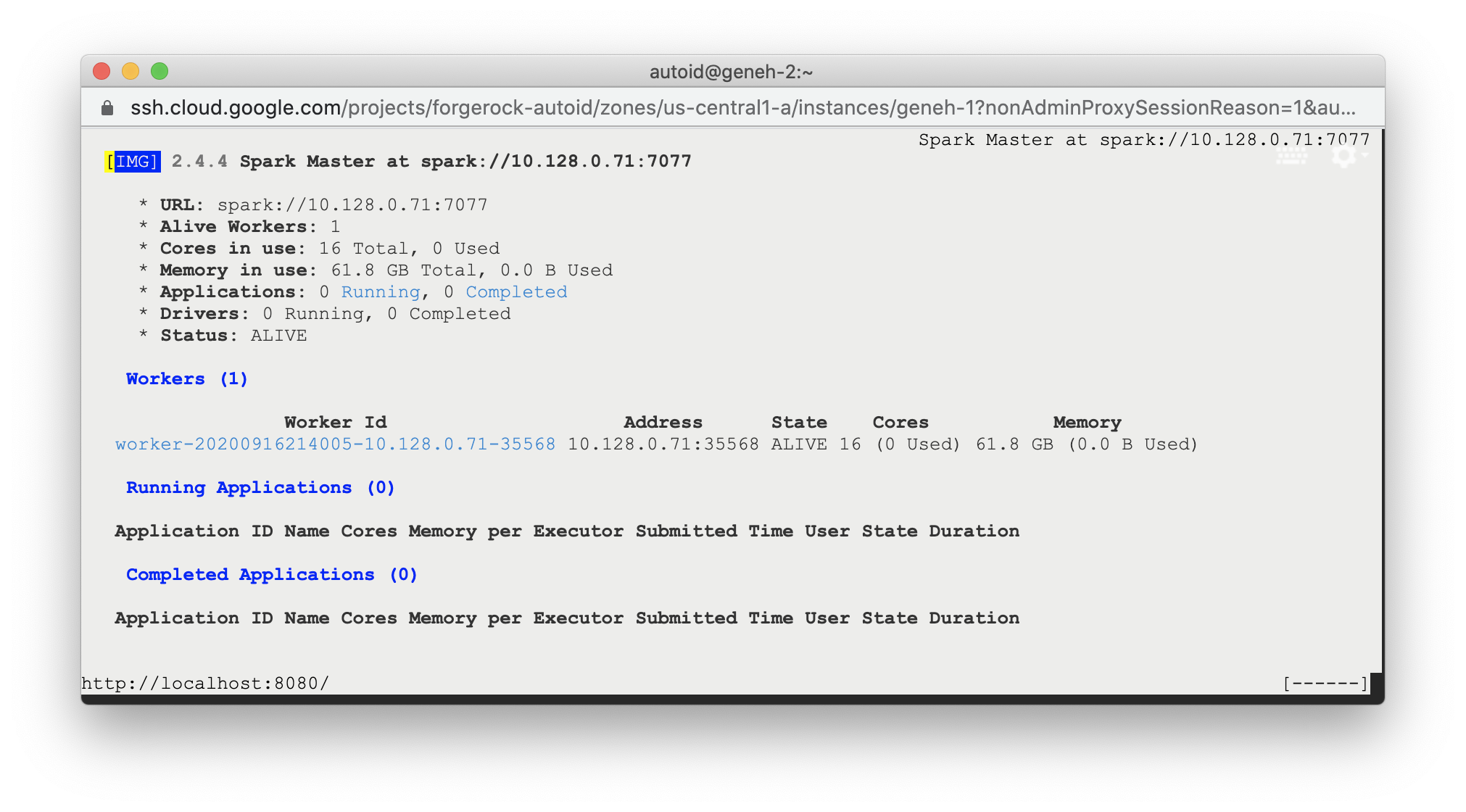

Check Apache Spark

Check Spark:

-

SSH to the target node and open Spark dashboard using the bundled text-mode web browser

$ elinks http://localhost:8080

You should see Spark Master status as ALIVE and worker(s) with State ALIVE.

Click to See an Example of the Spark Dashboard

Start the Analytics

If the previous installation steps all succeeded, you must now prepare your data’s entity definitions, data sources, and attribute mappings prior to running your analytics jobs. These step are required and are critical for a successful analytics process.

For more information, see Set Entity Definitions.