Guide to configurating and integrating OpenIDM into identity management solutions. The OpenIDM project offers flexible, open source services for automating management of the identity life cycle.

This guide shows you how to integrate OpenIDM as part of a complete identity management solution.

This guide is written for systems integrators building identity management solutions based on OpenIDM services. This guide describes OpenIDM, and shows you how to set up OpenIDM as part of your identity management solution.

You do not need to be an OpenIDM wizard to learn something from this guide, though a background in identity management and building identity management solutions can help.

Most examples in the documentation are created in GNU/Linux or Mac OS X

operating environments.

If distinctions are necessary between operating environments,

examples are labeled with the operating environment name in parentheses.

To avoid repetition file system directory names are often given

only in UNIX format as in /path/to/server,

even if the text applies to C:\path\to\server as well.

Absolute path names usually begin with the placeholder

/path/to/.

This path might translate to /opt/,

C:\Program Files\, or somewhere else on your system.

Command-line, terminal sessions are formatted as follows:

$ echo $JAVA_HOME /path/to/jdk

Command output is sometimes formatted for narrower, more readable output even though formatting parameters are not shown in the command.

Program listings are formatted as follows:

class Test {

public static void main(String [] args) {

System.out.println("This is a program listing.");

}

}ForgeRock publishes comprehensive documentation online:

The ForgeRock Knowledge Base offers a large and increasing number of up-to-date, practical articles that help you deploy and manage ForgeRock software.

While many articles are visible to community members, ForgeRock customers have access to much more, including advanced information for customers using ForgeRock software in a mission-critical capacity.

ForgeRock product documentation, such as this document, aims to be technically accurate and complete with respect to the software documented. It is visible to everyone and covers all product features and examples of how to use them.

The ForgeRock.org site has links to source code for ForgeRock open source software, as well as links to the ForgeRock forums and technical blogs.

If you are a ForgeRock customer, raise a support ticket instead of using the forums. ForgeRock support professionals will get in touch to help you.

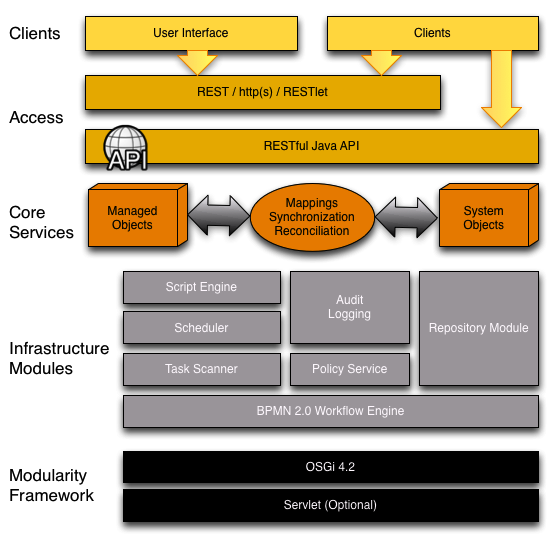

The following figure provides an overview of the OpenIDM architecture, which is covered in more detail in subsequent sections of this chapter.

The OpenIDM framework is based on OSGi.

- OSGi

OSGi is a module system and service platform for the Java programming language that implements a complete and dynamic component model. For a good introduction, see the OSGi site. While OpenIDM services are designed to run in any OSGi container, OpenIDM currently runs in Apache Felix.

- Servlet

The optional Servlet layer provides RESTful HTTP access to the managed objects and services. While the Servlet layer can be provided by many different engines, OpenIDM embeds Jetty by default.

OpenIDM infrastructure modules provide the underlying features needed for core services.

- BPMN 2.0 Workflow Engine

OpenIDM provides an embedded workflow and business process engine based on Activiti and the Business Process Model and Notation (BPMN) 2.0 standard.

For more information, see Integrating Business Processes and Workflows.

- Task Scanner

OpenIDM provides a task scanning mechanism that enables you to perform a batch scan for a specified date in OpenIDM data, on a scheduled interval, and then to execute a task when this date is reached.

For more information, see Scanning Data to Trigger Tasks.

- Scheduler

The scheduler provides a cron-like scheduling component implemented using the Quartz library. Use the scheduler, for example, to enable regular synchronizations and reconciliations.

See the Scheduling Synchronization chapter for details.

- Script Engine

The script engine is a pluggable module that provides the triggers and plugin points for OpenIDM. OpenIDM currently implements a JavaScript engine.

- Policy Service

OpenIDM provides an extensible policy service that enables you to apply specific validation requirements to various components and properties.

For more information, see Using Policies to Validate Data.

- Audit Logging

Auditing logs all relevant system activity to the configured log stores. This includes the data from reconciliation as a basis for reporting, as well as detailed activity logs to capture operations on the internal (managed) and external (system) objects.

See the Using Audit Logs chapter for details.

- Repository

The repository provides a common abstraction for a pluggable persistence layer. OpenIDM 2.1 supports use of MySQL to back the repository. Yet, plugin repositories can include NoSQL and relational databases, LDAP, and even flat files. The repository API operates using a JSON-based object model with RESTful principles consistent with the other OpenIDM services. The default, embedded implementation for the repository is the NoSQL database OrientDB, making it easy to evaluate OpenIDM out of the box before using MySQL in your production environment.

The core services are the heart of the OpenIDM resource oriented unified object model and architecture.

- Object Model

Artifacts handled by OpenIDM are Java object representations of the JavaScript object model as defined by JSON. The object model supports interoperability and potential integration with many applications, services and programming languages. As OpenIDM is a Java-based product, these representations are instances of classes:

Map,List,String,Number,Boolean, andnull.OpenIDM can serialize and deserialize these structures to and from JSON as required. OpenIDM also exposes a set of triggers and functions that system administrators can define in JavaScript which can natively read and modify these JSON-based object model structures. OpenIDM is designed to support other scripting and programming languages.

- Managed Objects

A managed object is an object that represents the identity-related data managed by OpenIDM. Managed objects are configurable, JSON-based data structures that OpenIDM stores in its pluggable repository. The default configuration of a managed object is that of a user, but you can define any kind of managed object, for example, groups or roles.

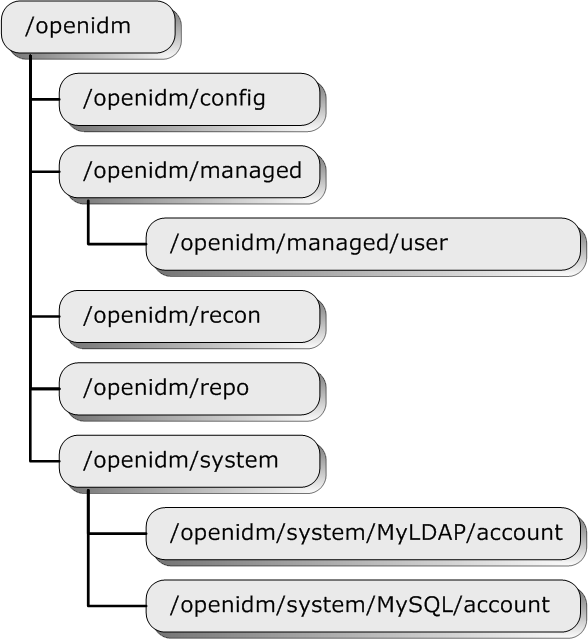

You can access managed objects over the REST interface with a query similar to the following:

$ curl --header "X-OpenIDM-Username: openidm-admin" --header "X-OpenIDM-Password: openidm-admin" --request GET "http://localhost:8080/openidm/managed/..."- System Objects

System objects are pluggable representations of objects on external systems. For example, a user entry that is stored in an external LDAP directory is represented as a system object in OpenIDM.

System objects follow the same RESTful resource-based design principles as managed objects. They can be accessed over the REST interface with a query similar to the following:

$ curl --header "X-OpenIDM-Username: openidm-admin" --header "X-OpenIDM-Password: openidm-admin" --request GET "http://localhost:8080/openidm/system/..."There is a default implementation for the OpenICF framework, that allows any connector object to be represented as a system object.

- Mappings

Mappings define policies between source and target objects and their attributes during synchronization and reconciliation. Mappings can also define triggers for validation, customization, filtering, and transformation of source and target objects.

See the Configuring Synchronization chapter for details.

- Synchronization & Reconciliation

Reconciliation provides for on-demand and scheduled resource comparisons between the OpenIDM managed object repository and source or target systems. Comparisons can result in different actions depending on the mappings defined between the systems.

Synchronization provides for creating, updating, and deleting resources from a source to a target system either on demand or according to a schedule.

See the Configuring Synchronization chapter for details.

The access layer provides the user interfaces and public APIs for accessing and managing the OpenIDM repository and its functions.

- RESTful Interfaces

OpenIDM provides REST APIs for CRUD operations and invoking synchronization and reconciliation for both HTTP and Java.

See the REST API Reference appendix for details.

- User Interfaces

User interfaces provide password management, registration, self-service, and workflow services.

This chapter covers the scripts provided for starting and stopping OpenIDM, and describes how to verify the health of a system, that is, that all requirements are met for a successful system startup.

By default you start and stop OpenIDM in interactive mode.

To start OpenIDM interactively, open a terminal or command window,

change to the openidm directory, and run the startup

script:

startup.sh (UNIX)

startup.bat (Windows)

The startup script starts OpenIDM, and opens an OSGi console with a

-> prompt where you can issue console commands.

To stop OpenIDM interactively in the OSGi console, enter the shutdown command.

-> shutdown

You can also start OpenIDM as a background process on UNIX, Linux, and Mac OS X. Follow these steps before starting OpenIDM for the first time.

If you have already started OpenIDM, then shut down OpenIDM and remove the Felix cache files under

openidm/felix-cache/.-> shutdown ... $ rm -rf felix-cache/*

Disable

ConsoleHandlerlogging before starting OpenIDM by editingopenidm/conf/logging.propertiesto setjava.util.logging.ConsoleHandler.level = OFF, and to comment out other references toConsoleHandler, as shown in the following excerpt.# ConsoleHandler: A simple handler for writing formatted records to System.err #handlers=java.util.logging.FileHandler, java.util.logging.ConsoleHandler handlers=java.util.logging.FileHandler ... # --- ConsoleHandler --- # Default: java.util.logging.ConsoleHandler.level = INFO java.util.logging.ConsoleHandler.level = OFF #java.util.logging.ConsoleHandler.formatter = ... #java.util.logging.ConsoleHandler.filter=...Remove the text-based OSGi console bundle,

bundle/org.apache.felix.shell.tui-version.jar.Start OpenIDM in the background.

$ ./startup.sh &

Alternatively, use the nohup command to keep OpenIDM running after you log out.

$ nohup ./startup.sh & [2] 394 $ appending output to nohup.out $

To stop OpenIDM running as a background process, use the shutdown.sh script.

$ ./shutdown.sh ./shutdown.sh Stopping OpenIDM (454)

By default, OpenIDM starts up with the configuration and script files

that are located in the openidm/conf and

openidm/script directories, and with the binaries that

are in the default install location. You can launch OpenIDM with a different

configuration and set of script files, and even with a different set of

binaries, in order to test a new configuration, managed multiple different

OpenIDM projects, or to run one of the included samples.

The startup.sh script enables you to specify the

following elements of a running OpenIDM instance.

project location (

-p)The project location specifies the configuration and default scripts with which OpenIDM will run.

If you specify the project location, OpenIDM does not try to locate configuration objects in the default location. All configuration objects and any artifacts that are not in the bundled defaults (such as custom scripts) must be provided in the project location. This includes everything that is in the default

openidm/confandopenidm/scriptdirectories.The following command starts OpenIDM with the configuration of sample 1:

$ ./startup.sh -p /path/to/openidm/samples/sample1

If an absolute path is not provided, the path is relative to the system property,

user.dir. If no project location is specified, OpenIDM is launched with the default configuration in/path/to/openidm/conf.working location (

-w)The working location specifies the directory to which OpenIDM writes its cache. Specifying a working location separates the project from the cached data that the system needs to store. The working location includes everything that is in the default

openidm/dbandopenidm/audit,openidm/felix-cache, andopenidm/logsdirectories.The following command specifies that OpenIDM writes its cached data to

/Users/admin/openidm/storage:$ ./startup.sh -w /Users/admin/openidm/storage

If an absolute path is not provided, the path is relative to the system property,

user.dir. If no working location is specified, OpenIDM writes its cached data toopenidm/dbandopenidm/logs.startup configuration file (

-c)A customizable startup configuration file (named

launcher.json) enables you to specify how the OSGi Framework is started.If no configuration file is specified, the default configuration (defined in

/path/to/openidm/bin/launcher.json) is used. The following command starts OpenIDM with an alternative startup configuration file:$ ./startup.sh -c /Users/admin/openidm/bin/launcher.json

You can modify the default startup configuration file to specify a different startup configuration.

The customizable properties of the default startup configuration file are as follows:

"location" : "bundle"- resolves to the install location. You can also load OpenIDM from a specified zip file ("location" : "openidm.zip") or you can install a single jar file ("location" : "openidm-system-2.1.jar")."includes" : "**/openidm-system-*.jar"- the specified folder is scanned for jar files relating to the system startup. If the value of"includes"is*.jar, you must specifically exclude any jars in the bundle that you do not want to install, by setting the"excludes"property."start-level" : 1- specifies a start level for the jar files identified previously."action" : "install.start"- a period-separated list of actions to be taken on the jar files. Values can be one or more of"install.start.update.uninstall"."config.properties"- takes either a path to a configuration file (relative to the project location) or a list of configuration properties and their values. The list must be in the format"string":"string", for example:"config.properties" : { "property" : "value" },"system.properties"- takes either a path to asystem.propertiesfile (relative to the project location) or a list of system properties and their values. The list must be in the format"string":"string", for example:"system.properties" : { "property" : "value" },"boot.properties"- takes either a path to aboot.propertiesfile (relative to the project location) or a list of boot properties and their values.The list must be in the format"string":object, for example:"boot.properties" : { "property" : true },

OpenIDM includes a customizable information service that provides

detailed information about a running OpenIDM instance. The information can

be accessed over the REST interface, under the context

http://localhost:8080/openidm/info.

By default, OpenIDM provides the following information:

Basic information about the health of the system.

This information can be accessed over REST at

http://localhost:8080/openidm/info/ping. For example:$ curl --header "X-OpenIDM-Username: openidm-admin" --header "X-OpenIDM-Password: openidm-admin" --request GET "http://localhost:8080/openidm/info/ping" {"state":"ACTIVE_READY","shortDesc":"OpenIDM ready"}The information is provided by the script

openidm/bin/defaults/script/info/ping.js.Information about the current OpenIDM session.

This information can be accessed over REST at

http://localhost:8080/openidm/info/login. For example:$ curl --header "X-OpenIDM-Username: openidm-admin" --header "X-OpenIDM-Password: openidm-admin" --request GET "http://localhost:8080/openidm/info/login" { "username":"openidm-admin", "userid":{ "id":"openidm-admin", "component":"internal/user" } }The information is provided by the script

openidm/bin/defaults/script/info/login.js.

You can extend or override the default information that is provided by

creating your own script file and its corresponding configuration file in

openidm/conf/info-name.json.

Custom script files can be located anywhere, although a best practice is to

place them in openidm/script/info. A sample customized

script file for extending the default ping service is provided in

openidm/samples/infoservice/script/info/customping.js.

The corresponding configuration file is provided in

openidm/samples/infoservice/conf/info-customping.json.

The configuration file has the following syntax:

{

"infocontext" : "ping",

"type" : "text/javascript",

"file" : "script/info/customping.js"

}

The parameters in the configuration file are as follows:

"infocontext"specifies the relative name of the info endpoint under the info context. The information can be accessed over REST at this endpoint, for example, setting"infocontext"to"mycontext/myendpoint"would make the information accessible over REST athttp://localhost:8080/openidm/info/mycontext/myendpoint."type"specifies the type of the information source. Currently, only Javascript is supported, so the type must be"text/javascript"."file"specifies the path to the Javascript file, if you do not provide a"source"parameter."source"specifies the actual Javascript, if you have not provided a"file"parameter.

Additional properties can be passed to the script in this configuration

file (openidm/samples/infoservice/conf/info-

name.json).

Script files in openidm/samples/infoservice/script/info/

have access to the following objects:

request- the request details, including the method called and any parameters passed.healthinfo- the current health status of the system.openidm- access to the JSON resource API.Any additional properties that are defined in the configuration file (

openidm/samples/infoservice/conf/info- name.json.)

Due to the highly modular, configurable nature of OpenIDM, it is often difficult to assess whether a system has started up successfully, or whether the system is ready and stable after dynamic configuration changes have been made.

OpenIDM provides a configurable health check service that verifies that the required modules and services for an operational system are up and running. During system startup, OpenIDM checks that these modules and services are available and reports on whether any requirements for an operational system have not been met. If dynamic configuration changes are made, OpenIDM rechecks that the required modules and services are functioning so that system operation is monitored on an ongoing basis.

The health check service reports on the state of the OpenIDM system and outputs this state to the console and to the log files. The system can be in one of the following states:

STARTING - OpenIDM is starting up |

ACTIVE_READY - all of the specified requirements

have been met to consider the OpenIDM system ready |

ACTIVE_NOT_READY - one or more of the specified

requirements have not been met and the OpenIDM system is not considered ready

|

STOPPING - OpenIDM is shutting down |

By default, OpenIDM checks the following modules and services:

Required Modules

"org.forgerock.openicf.framework.connector-framework" "org.forgerock.openicf.framework.connector-framework-internal" "org.forgerock.openicf.framework.connector-framework-osgi" "org.forgerock.openidm.audit" "org.forgerock.openidm.core" "org.forgerock.openidm.enhanced-config" "org.forgerock.openidm.external-email" "org.forgerock.openidm.external-rest" "org.forgerock.openidm.filter" "org.forgerock.openidm.httpcontext" "org.forgerock.openidm.infoservice" "org.forgerock.openidm.policy" "org.forgerock.openidm.provisioner" "org.forgerock.openidm.provisioner-openicf" "org.forgerock.openidm.repo" "org.forgerock.openidm.restlet" "org.forgerock.openidm.smartevent" "org.forgerock.openidm.system" "org.forgerock.openidm.ui" "org.forgerock.openidm.util" "org.forgerock.commons.org.forgerock.json.resource" "org.forgerock.commons.org.forgerock.json.resource.restlet" "org.forgerock.commons.org.forgerock.restlet" "org.forgerock.commons.org.forgerock.util" "org.forgerock.openidm.security-jetty" "org.forgerock.openidm.jetty-fragment" "org.forgerock.openidm.quartz-fragment" "org.ops4j.pax.web.pax-web-extender-whiteboard" "org.forgerock.openidm.scheduler" "org.ops4j.pax.web.pax-web-jetty-bundle" "org.forgerock.openidm.repo-jdbc" "org.forgerock.openidm.repo-orientdb" "org.forgerock.openidm.config" "org.forgerock.openidm.crypto"

Required Services

"org.forgerock.openidm.config"

"org.forgerock.openidm.provisioner"

"org.forgerock.openidm.provisioner.openicf.connectorinfoprovider"

"org.forgerock.openidm.external.rest"

"org.forgerock.openidm.audit"

"org.forgerock.openidm.policy"

"org.forgerock.openidm.managed"

"org.forgerock.openidm.script"

"org.forgerock.openidm.crypto"

"org.forgerock.openidm.recon"

"org.forgerock.openidm.info"

"org.forgerock.openidm.router"

"org.forgerock.openidm.scheduler"

"org.forgerock.openidm.scope"

"org.forgerock.openidm.taskscanner"

You can replace this list, or add to it, by adding the following lines

to the openidm/conf/boot/boot.properties file:

"openidm.healthservice.reqbundles" - overrides

the default required bundles. Bundles are specified as a list of symbolic

names, separated by commas. |

"openidm.healthservice.reqservices" - overrides

the default required services. Services are specified as a list of symolic

names, separated by commas. |

"openidm.healthservice.additionalreqbundles" -

specifies required bundles (in addition to the default list). Bundles are

specified as a list of symbolic names, separated by commas. |

"openidm.healthservice.additionalreqservices" -

specifies required services (in addition to the default list). Services are

specified as a list of symbolic names, separated by commas. |

By default, OpenIDM gives the system ten seconds to start up all

the required bundles and services, before the system readiness is assessed.

Note that this is not the total start time, but the time required to complete

the service startup after the framework has started. You can change this

default by setting the value of the servicestartmax

property (in miliseconds) in the

openidm/conf/boot/boot.properties file. This example

sets the startup time to five seconds.

openidm.healthservice.servicestartmax=5000

The health check service works in tandem with the scriptable information service. For more information see Section 2.3, "Obtaining Information About an OpenIDM Instance".

OpenIDM includes a basic command-line interface that provides a number of utilities for managing the OpenIDM instance.

All of the utilities are subcommands of the cli.sh

(UNIX) or cli.bat (Windows) scripts. To use the utilities,

you can either run them as subcommands, or launch the cli

script first, and then run the utility. For example, to run the

encrypt utility on a UNIX system:

$ cd /path/to/openidm $ ./cli.sh Using boot properties at /openidm/conf/boot/boot.properties openidm# encrypt ....

or

$ cd /path/to/openidm $ ./cli.sh encrypt ...

By default, the command-line utilities run with the properties defined

in /path/to/openidm/conf/boot/boot.properties.

The startup and shutdown scripts are not discussed in this chapter. For information about these scripts, see Starting and Stopping OpenIDM.

The following sections describe the subcommands and their use. Examples assume that you are running the commands on a UNIX system. For Windows systems, use cli.bat instead of cli.sh.

The configexport subcommand exports all configuration objects to a specified location, enabling you to reuse a system configuration in another environment. For example, you can test a configuration in a development environment, then export it and import it into a production environment. This subcommand also enables you to inspect the active configuration of an OpenIDM instance.

OpenIDM must be running when you execute this command.

Usage is as follows:

$ ./cli.sh configexport /export-location

For example:

$ ./cli.sh configexport /tmp/conf

Configuration objects are exported, as .json files,

to the specified directory. Configuration files that are present in this

directory are renamed as backup files, with a timestamp, for example,

audit.json.2012-12-19T12-00-28.bkp, and are not

overwritten. The following configuration objects are exported:

The internal repository configuration (

repo.orientdb.jsonorrepo.jdbc.json)The log configuration (

audit.json)The authentication configuration (

authentication.json)The managed object configuration (

managed.json)The connector configuration (

provisioner.openicf-*.json)The router service configuration (

router.json)The scheduler service configuration (

scheduler.json)Any configured schedules (

schedule-*.json)The synchronization mapping configuration (

sync.json)If workflows are defined, the configuration of the workflow engine (

workflow.json) and the workflow access configuration (process-access.json)Any configuration files related to the user interface (

ui-*.json)The configuration of any custom endpoints (

endpoint-*.json)The policy configuration (

policy.json)

The configimport subcommand imports configuration objects from the specified directory, enabling you to reuse a system configuration from another environment. For example, you can test a configuration in a development environment, then export it and import it into a production environment.

The command updates the existing configuration from the import-location over the OpenIDM REST interface. By default, if configuration objects are present in the import-location and not in the existing configuration, these objects are added. If configuration objects are present in the existing location but not in the import-location, these objects are left untouched in the existing configuration.

If you include the --replaceAll parameter, the

command wipes out the existing configuration and replaces it with the

configuration in the import-location. Objects in

the existing configuration that are not present in the

import-location are deleted.

Usage is as follows:

$ ./cli.sh configimport [--replaceAll] /import-location

For example:

$ ./cli.sh configimport --replaceAll /tmp/conf

Configuration objects are imported, as .json files,

from the specified directory to the conf directory. The

configuration objects that are imported are outlined in the corresponding

export command, described in the previous section.

The configureconnector subcommand generates a configuration for an OpenICF connector.

Usage is as follows:

$ ./cli.sh configureconnector connector-name

Select the type of connector that you want to configure. The following example configures a new XML connector.

$ ./cli.sh configureconnector myXmlConnector Using boot properties at /openidm/conf/boot/boot.properties Dec 11, 2012 10:35:37 AM org.restlet.ext.httpclient.HttpClientHelper start INFO: Starting the HTTP client 0. CSV File Connector version 1.1.1.0 1. LDAP Connector version 1.1.1.0 2. org.forgerock.openicf.connectors.scriptedsql.ScriptedSQLConnector version 1.1.1.0 3. XML Connector version 1.1.1.0 4. Exit Select [0..4]: 3 Edit the configuration file and run the command again. The configuration was saved to /openidm/temp/provisioner.openicf-myXmlConnector.json

The basic configuration is saved in a file named

/openidm/temp/provisioner.openicf-connector-name.json.

Edit the configurationProperties parameter in this file to

complete the connector configuration. For an XML connector, you can use the

schema definitions in sample 0 for an example configuration.

"configurationProperties" : {

"xmlFilePath" : "samples/sample0/data/resource-schema-1.xsd",

"createFileIfNotExists" : false,

"xsdFilePath" : "samples/sample0/data/resource-schema-extension.xsd",

"xsdIcfFilePath" : "samples/sample0/data/xmlConnectorData.xml"

},

For more information about the connector configuration properties, see Configuring Connectors.

When you have modified the file, run the configureconnector command again so that OpenIDM can pick up the new connector configuration.

$ ./cli.sh configureconnector myXmlConnector Using boot properties at /openidm/conf/boot/boot.properties Configuration was found and picked up from: /openidm/temp/provisioner.openicf-myXmlConnector.json Dec 11, 2012 10:55:28 AM org.restlet.ext.httpclient.HttpClientHelper start INFO: Starting the HTTP client ...

You can also configure connectors over the REST interface. For more information, see Creating Default Connector Configurations.

The encrypt subcommand encrypts an input string, or JSON object, provided at the command line. This subcommand can be used to encrypt passwords, or other sensitive data, to be stored in the OpenIDM repository. The encrypted value is output to standard output and provides details of the cryptography key that is used to encrypt the data.

Usage is as follows:

$ ./cli.sh encrypt [-j] string

The -j option specifies that the string to be

encrypted is a JSON object. If you do not enter the string as part of the

command, the command prompts for the string to be encrypted. If you enter

the string as part of the command, any special characters, for example

quotation marks, must be escaped.

The following example encrypts a normal string value:

$ ./cli.sh encrypt mypassword

Using boot properties at /openidm/conf/boot/boot.properties

Oct 23, 2012 2:00:03 PM org.forgerock.openidm.crypto.impl.CryptoServiceImpl activate

INFO: Activating cryptography service of type: JCEKS provider: location: security/keystore.jceks

Oct 23, 2012 2:00:03 PM org.forgerock.openidm.crypto.impl.CryptoServiceImpl activate

INFO: Available cryptography key: openidm-sym-default

Oct 23, 2012 2:00:03 PM org.forgerock.openidm.crypto.impl.CryptoServiceImpl activate

INFO: Available cryptography key: openidm-localhost

Oct 23, 2012 2:00:03 PM org.forgerock.openidm.crypto.impl.CryptoServiceImpl activate

INFO: Available cryptography key: openidm-local-openidm-forgerock-org

Oct 23, 2012 2:00:03 PM org.forgerock.openidm.crypto.impl.CryptoServiceImpl activate

INFO: CryptoService is initialized with 3 keys.

-----BEGIN ENCRYPTED VALUE-----

{

"$crypto" : {

"value" : {

"iv" : "M2913T5ZADlC2ip2imeOyg==",

"data" : "DZAAAM1nKjQM1qpLwh3BgA==",

"cipher" : "AES/CBC/PKCS5Padding",

"key" : "openidm-sym-default"

},

"type" : "x-simple-encryption"

}

}

------END ENCRYPTED VALUE------

The following example encrypts a JSON object. The input string must be a valid JSON object.

$ ./cli.sh encrypt -j {\"password\":\"myPassw0rd\"}

Using boot properties at /openidm/conf/boot/boot.properties

Oct 23, 2012 2:00:03 PM org.forgerock.openidm.crypto.impl.CryptoServiceImpl activate

INFO: Activating cryptography service of type: JCEKS provider: location: security/keystore.jceks

Oct 23, 2012 2:00:03 PM org.forgerock.openidm.crypto.impl.CryptoServiceImpl activate

INFO: Available cryptography key: openidm-sym-default

Oct 23, 2012 2:00:03 PM org.forgerock.openidm.crypto.impl.CryptoServiceImpl activate

INFO: Available cryptography key: openidm-localhost

Oct 23, 2012 2:00:03 PM org.forgerock.openidm.crypto.impl.CryptoServiceImpl activate

INFO: Available cryptography key: openidm-local-openidm-forgerock-org

Oct 23, 2012 2:00:03 PM org.forgerock.openidm.crypto.impl.CryptoServiceImpl activate

INFO: CryptoService is initialized with 3 keys.

-----BEGIN ENCRYPTED VALUE-----

{

"$crypto" : {

"value" : {

"iv" : "M2913T5ZADlC2ip2imeOyg==",

"data" : "DZAAAM1nKjQM1qpLwh3BgA==",

"cipher" : "AES/CBC/PKCS5Padding",

"key" : "openidm-sym-default"

},

"type" : "x-simple-encryption"

}

}

------END ENCRYPTED VALUE------

The following example prompts for a JSON object to be encrypted. In this case, you need not escape the special characters.

$ ./cli.sh encrypt -j

Using boot properties at /openidm/conf/boot/boot.properties

Enter the Json value

> Press ctrl-D to finish input

Start data input:

{"password":"myPassw0rd"}

^D

Oct 23, 2012 2:37:56 PM org.forgerock.openidm.crypto.impl.CryptoServiceImpl activate

INFO: Activating cryptography service of type: JCEKS provider: location: security/keystore.jceks

Oct 23, 2012 2:37:56 PM org.forgerock.openidm.crypto.impl.CryptoServiceImpl activate

INFO: Available cryptography key: openidm-sym-default

Oct 23, 2012 2:37:56 PM org.forgerock.openidm.crypto.impl.CryptoServiceImpl activate

INFO: Available cryptography key: openidm-localhost

Oct 23, 2012 2:37:56 PM org.forgerock.openidm.crypto.impl.CryptoServiceImpl activate

INFO: Available cryptography key: openidm-local-openidm-forgerock-org

Oct 23, 2012 2:37:56 PM org.forgerock.openidm.crypto.impl.CryptoServiceImpl activate

INFO: CryptoService is initialized with 3 keys.

-----BEGIN ENCRYPTED VALUE-----

{

"$crypto" : {

"value" : {

"iv" : "6e0RK8/4F1EK5FzSZHwNYQ==",

"data" : "gwHSdDTmzmUXeD6Gtfn6JFC8cAUiksiAGfvzTsdnAqQ=",

"cipher" : "AES/CBC/PKCS5Padding",

"key" : "openidm-sym-default"

},

"type" : "x-simple-encryption"

}

}

------END ENCRYPTED VALUE------

The keytool subcommand exports or imports private key values.

The Java keytool command enables you to export and import public keys and certificates, but not private keys. The OpenIDM keytool subcommand provides this functionality.

Usage is as follows:

./cli.sh keytool [--export, --import] alias

For example, to export the default OpenIDM symmetric key, run the following command:

$ ./cli.sh keytool --export openidm-sym-default Using boot properties at /openidm/conf/boot/boot.properties Use KeyStore from: /openidm/security/keystore.jceks Please enter the password: [OK] Secret key entry with algorithm AES AES:606d80ae316be58e94439f91ad8ce1c0

The default keystore password is changeit. You

should change this password after installation.

To import a new secret key named my-new-key, run the following command:

$ ./cli.sh keytool --import my-new-key Using boot properties at /openidm/conf/boot/boot.properties Use KeyStore from: /openidm/security/keystore.jceks Please enter the password: Enter the key: AES:606d80ae316be58e94439f91ad8ce1c0

If a secret key of that name already exists, OpenIDM returns the following error:

"KeyStore contains a key with this alias"

The validate subcommand validates all .json

configuration files in the openidm/conf/ directory.

Usage is as follows:

$ ./cli.sh validate Using boot properties at /openidm/conf/boot/boot.properties ................................................................... [Validating] Load JSON configuration files from: [Validating] /openidm/conf [Validating] audit.json .................................. SUCCESS [Validating] authentication.json ......................... SUCCESS [Validating] endpoint-getavailableuserstoassign.json ..... SUCCESS [Validating] endpoint-getprocessesforuser.json ........... SUCCESS [Validating] endpoint-gettasksview.json .................. SUCCESS [Validating] endpoint-securityQA.json .................... SUCCESS [Validating] endpoint-siteIdentification.json ............ SUCCESS [Validating] endpoint-usernotifications.json ............. SUCCESS [Validating] managed.json ................................ SUCCESS [Validating] policy.json ................................. SUCCESS [Validating] process-access.json ......................... SUCCESS [Validating] provisioner.openicf-ldap.json ............... SUCCESS [Validating] provisioner.openicf-xml.json ................ SUCCESS [Validating] repo.orientdb.json .......................... SUCCESS [Validating] router.json ................................. SUCCESS [Validating] schedule-recon.json ......................... SUCCESS [Validating] schedule-reconcile_systemXmlAccounts_managedUser.json SUCCESS [Validating] scheduler.json .............................. SUCCESS [Validating] sync.json ................................... SUCCESS [Validating] ui-configuration.json ....................... SUCCESS [Validating] ui-countries.json ........................... SUCCESS [Validating] ui-secquestions.json ........................ SUCCESS [Validating] workflow.json ............................... SUCCESS

OpenIDM provides a customizable, browser-based user interface. The default user interface enables administrative users to create, modify and delete user accounts. It provides role-based access to tasks based on BPMN2 workflows, and allows users to manage certain aspects of their own accounts, including configurable self-service registration.

The default user interface is provided as a reference implementation that demonstrates the capabilities of the REST API. You can modify certain aspects of the default user interface according to the requirements of your deployment. Note, however, that the default user interface is still evolving and is not guaranteed to be compatible with the next OpenIDM release.

To access the user interface, install and start OpenIDM, then point your browser to http://localhost:8080/openidmui.

Log in as the default administrative user (Login: openidm-admin, Password: openidm-admin) or as an existing user in the repository. The display differs, depending on the role of the user that has logged in.

For an administrative user (role openidm-admin),

two tabs are displayed - Dashboard and Users. The Dashboard tab lists any

tasks assigned to the user, processes available to be invoked, and any

notifications for that user. The Users tab provides an interface to manage

user entries (OpenIDM managed objects under managed/user).

The Profile link enables the user to modify elements

of his user data. The Change Security Data link enables

the user to change his password and, optionally, to select a new security

question.

For a regular user (role openidm-authorized), the

Users tab is not displayed - so regular users cannot manage user accounts,

except for certain aspects of their own accounts.

The following sections outline the configurable aspects of the default user interface.

Self-registration (the ability for new users to create their own

accounts) is disabled by default. To enable self-registration, set

"selfRegistration" to true in

the conf/ui-configuration.json file.

{

"configuration" : {

"selfRegistration" : true,

...

With "selfRegistration" : true, the following

capabilities are provided on the right-hand pane of the user interface:

| Register my account |

| Reset my password |

User objects created using self-registration automatically have the

role openidm-authorized.

Security questions are disabled by default. To guard against

unauthorized access, you can specify that users be prompted with security

questions if they request a password reset. A default set of questions is

provided, but you can add to these, or overwrite them.

To enable security questions, set "securityQuestions"

to true in the

conf/ui-configuration.json file.

{

"configuration" : {

"securityQuestions" : true,

...

Specify the list of questions to be asked in the

conf/ui-secquestions.json file.

Refresh your browser after this configuration change for the change to be picked up by the UI.

To ensure that users are entering their details onto the correct site, you can enable site identification. Site identification provides a preventative measure against phishing.

With site identification enabled, users are presented with a range of

images from which they can select. To enable site identification, set

"siteIdentification" to true

in the conf/ui-configuration.json file.

{

"configuration" : {

"siteIdentification" : true,

...

Refresh your browser after this configuration change for the change to be picked up by the UI.

A default list of four images is presented for site identification.

The images are defined in the siteImages property in the

conf/ui-configuration.json file:

"siteImages" : [

"images/passphrase/mail.png",

"images/passphrase/user.png",

"images/passphrase/report.png",

"images/passphrase/twitter.png"

],

...

The user selects one of these images, which is displayed on login. In addition, the user enters a Site Phrase, which is displayed beneath the site image on login. If either the site image, or site phrase is incorrect or absent when the user logs in, the user is aware that he is not logging in to the correct site.

You can change the default images, and include additional images, by

placing image files in the ui/extension/images folder

and modifying the siteImages property in the

ui-configuration.json file to point to the new images.

The following example assumes a file named my-new-image.jpg,

located in ui/extension/images.

"siteImages" : [

"images/passphrase/mail.png",

"images/passphrase/user.png",

"images/passphrase/report.png",

"images/passphrase/twitter.png",

"images/my-new-image.jpg"

],

...

Note that the default image files are located in

ui/default/admin/public/images/passphrase.

The default user profile includes the ability to specify the user's

country and state or province. To specify the countries that appear in

this drop down list, and their associated states or provinces, edit the

conf/ui-countries.json file. For example, to add

Norway to the list of countries, you would add the following to the

conf/ui-countries.json file:

{

"key" : "norway",

"value" : "Norway",

"states" : [

{

"key" : "akershus",

"value" : "Akershus"

},

{

"key" : "aust-agder",

"value" : "Aust-Agder"

},

{

"key" : "buskerud",

"value" : "Buskerud"

},

...

Refresh your browser after this configuration change for the change to be picked up by the UI.

Only administrative users (with the role openidm-admin)

can add, modify, and delete user accounts. Regular users can modify certain

aspects of their own accounts.

Log into the user interface as an administrative user.

Select the Users tab.

Click Add User.

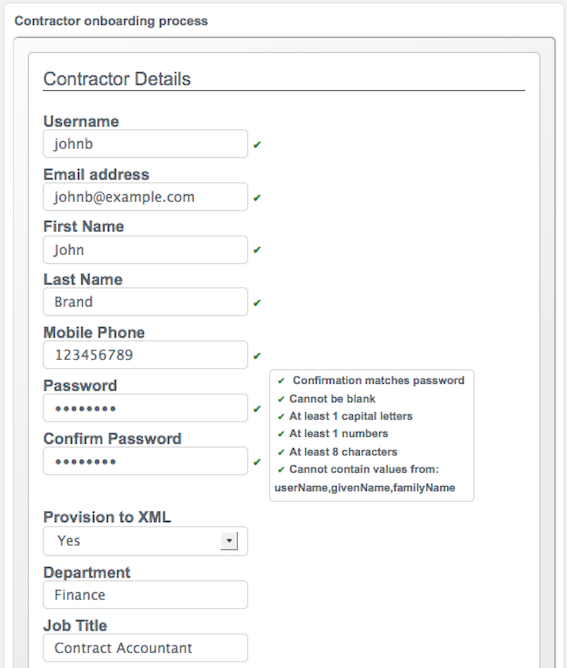

Complete the fields on the Create new account page.

Most of these fields are self-explanatory. Be aware that the user interface is subject to policy validation, as described in Using Policies to Validate Data. So, for example, the Email address must be of valid email address format, and the Password must comply with the password validation settings that are indicated in the panel to the right.

The Admin Role field reflects the roles that are defined in the

ui-configuration.jsonfile. The roles are mapped as follows:"roles" : { "openidm-admin" : "Administrator", "openidm-authorized" : "User", "tasks-manager" : "Tasks Manager" },By default, a user can be assigned more than one role. Only users with the

tasks-managerrole can assign tasks to any candidate user for that task.

Log into the user interface as an administrative user.

Select the Users tab.

Click the Username of the user that you want to update.

On the user's profile page, modify the fields you want to change and click Update.

The user account is updated in the internal repository.

Users can change their own passwords by following the Change Security Data link in their profiles. This process requires that users know their existing passwords.

In a situation where a user forgets his password, an administrator can reset the password of that user without knowing the user's existing password.

Follow steps 1-3 in Procedure 4.2, "To Update a User Account".

On the user's profile page, click Change password.

Enter a new password that conforms to the password policy and click Update.

The user password is updated in the repository.

Log into the user interface as an administrative user.

Select the Users tab.

Click the Username of the user that you want to delete.

On the user's profile page, click Delete.

Click OK to confirm the deletion.

The user is deleted from the internal repository.

The user interface is integrated with the embedded Activiti worfklow

engine, enabling users to interact with workflows. Available workflows are

displayed under the Processes item on the Dashboard. In order for a workflow

to be displayed here, the workflow definition file must be present in the

openidm/workflow directory.

A sample workflow integration with the user interface is provided in

openidm/samples/workflow, and documented in Sample Workflow -

Provisioning User Accounts. Follow the steps in that

sample for an understanding of how the workflow integration works.

Access to workflows is based on OpenIDM roles, and is configured in

the file conf/process-access.json. By default all users

with the role openidm-authorized or

openidm-admin can invoke any available workflow. The

default process-access.json file is as follows:

{

"workflowAccess" : [

{

"propertiesCheck" : {

"property" : "_id",

"matches" : ".*",

"requiresRole" : "openidm-authorized"

}

},

{

"propertiesCheck" : {

"property" : "_id",

"matches" : ".*",

"requiresRole" : "openidm-admin"

}

}

]

}

OpenIDM configuration is split between .properties and container

configuration files, and also dynamic configuration objects. The majority

of OpenIDM configuration files are stored under

openidm/conf/, as described in the appendix listing the

File

Layout.

OpenIDM stores configuration objects in its internal repository. You can manage the configuration by using either the REST access to the configuration objects, or by using the JSON file based views.

OpenIDM exposes internal configuration objects in JSON format. Configuration elements can be either single instance or multiple instance for an OpenIDM installation.

Single instance configuration objects correspond to services that have at most one instance per installation.

JSON file views of these configuration objects are named

object-name.json.

The

auditconfiguration specifies how audit events are logged.The

authenticationconfiguration controls REST access.The

endpointconfiguration controls any custom REST endpoints.The

managedconfiguration defines managed objects and their schemas.The

policyconfiguration defines the policy validation service.The

process accessconfiguration defines access to any configured workflows.The

repo.repo-typeconfiguration such asrepo.orientdborrepo.jdbcconfigures the internal repository.The

routerconfiguration specifies filters to apply for specific operations.The

syncconfiguration defines the mappings that OpenIDM uses when synchronizing and reconciling managed objects.The

uiconfiguration defines the configurable aspects of the default user interface.The

workflowconfiguration defines the configuration of the workflow engine.

Multiple instance configuration objects correspond to services that

can have many instances per installation. Configuration objects are named

objectname/instancename

. For example, provisioner.openicf/xml.

JSON file views of these configuration objects

are named objectname-instancename.json. For example,

provisioner.openicf-xml.json.

Multiple

scheduleconfigurations can run reconciliations and other tasks on different schedules.Multiple

provisioner.openicfconfigurations correspond to the resources connected to OpenIDM.

When you change OpenIDM's configuration objects, take the following points into account.

OpenIDM's authoritative configuration source is the internal repository. JSON files provide a view of the configuration objects, but do not represent the authoritative source.

OpenIDM updates JSON files after making configuration changes, whether those changes are made through REST access to configuration objects, or through edits to the JSON files.

OpenIDM recognizes changes to JSON files when it is running. OpenIDM must be running when you delete configuration objects, even if you do so by editing the JSON files.

Avoid editing configuration objects directly in the internal repository. Rather edit the configuration over the REST API, or in the configuration JSON files to ensure consistent behavior and that operations are logged.

OpenIDM stores its configuration in the internal database by default. If you remove an OpenIDM instance and do not specifically drop the repository, the configuration remains in effect for a new OpenIDM instance that uses that repository. For testing or evaluation purposes, you can disable this persistent configuration in the

conf/system.propertiesfile by uncommenting the following line:# openidm.config.repo.enabled=falseDisabling persistent configuration means that OpenIDM will store its configuration in memory only. You should not disable persistent configuration in a production environment.

Out of the box, OpenIDM is configured to make it easy to install and evaluate. Specific configuration changes are required before you deploy OpenIDM in a production environment.

By default, OpenIDM uses OrientDB for its internal repository so that you do not have to install a database in order to evaluate OpenIDM. Before you use OpenIDM in production, you must replace OrientDB with a supported repository.

For more information, see Installing a Repository for Production in the Installation Guide in the Installation Guide.

By default, OpenIDM polls the JSON files in the

conf directory periodically for any changes to

the configuration. In a production system, it is recommended that

you disable automatic polling for updates to prevent untested

configuration changes from disrupting your identity service.

To disable automatic polling for configuration changes, edit the

conf/system.properties file by uncommenting the

following line:

# openidm.fileinstall.enabled=false

Before you disable automatic polling, you must have started the OpenIDM instance at least once to ensure that the configuration has been loaded into the database.

Note that scripts are loaded each time the configuration calls the script. Modifications to scripts are therefore not applied dynamically. If you modify a script, you must either modify the configuration that calls the script, or restart the component that uses the modified script. You do not need to restart OpenIDM for script modifications to take effect.

To control configuration changes to the OpenIDM system, you

disable the file-based configuration view and have OpenIDM read its

configuration only from the repository. To disable the file-based

configuration view, edit the conf/system.properties

file to uncomment the following line:

# openidm.fileinstall.enabled=false.

OpenIDM exposes configuration objects under the

/openidm/config context.

You can list the configuration on the local host by performing a GET

http://localhost:8080/openidm/config. The following

example shows the default configuration for an OpenIDM instance started

with Sample 1.

$ curl --request GET

--header "X-OpenIDM-Username: openidm-admin"

--header "X-OpenIDM-Password: openidm-admin"

http://localhost:8080/openidm/config

{

"configurations": [

{

"_id": "endpoint/getprocessesforuser",

"pid": "endpoint.788f364e-d870-4f46-982a-793525fff6f0",

"factoryPid": "endpoint"

},

{

"_id": "provisioner.openicf/xml",

"pid": "provisioner.openicf.90b18af9-fe27-45a2-a4ae-1056c04a4d31",

"factoryPid": "provisioner.openicf"

},

{

"_id": "ui/configuration",

"pid": "ui.36bb2bf4-8e19-43d2-9df2-a0553ffac590",

"factoryPid": "ui"

},

{

"_id": "managed",

"pid": "managed",

"factoryPid": null

},

{

"_id": "sync",

"pid": "sync",

"factoryPid": null

},

{

"_id": "router",

"pid": "router",

"factoryPid": null

},

{

"_id": "process/access",

"pid": "process.44743c97-a01b-4562-85ad-8a2c9b89155a",

"factoryPid": "process"

},

{

"_id": "endpoint/siteIdentification",

"pid": "endpoint.ef05a7f3-a420-4fbb-998c-02d283cae4d1",

"factoryPid": "endpoint"

},

{

"_id": "endpoint/securityQA",

"pid": "endpoint.e2d87637-c918-4056-99a1-20f25c897066",

"factoryPid": "endpoint"

},

{

"_id": "scheduler",

"pid": "scheduler",

"factoryPid": null

},

{

"_id": "ui/countries",

"pid": "ui.acde0f4c-808f-45fb-9627-d7d2ca702e7c",

"factoryPid": "ui"

},

{

"_id": "org.apache.felix.fileinstall/openidm",

"pid": "org.apache.felix.fileinstall.2dedea63-4592-4074-a709-ffa70f1e841d",

"factoryPid": "org.apache.felix.fileinstall"

},

{

"_id": "schedule/reconcile_systemXmlAccounts_managedUser",

"pid": "schedule.f53b235a-862e-4e18-a3cf-10ae3cbabc1e",

"factoryPid": "schedule"

},

{

"_id": "workflow",

"pid": "workflow",

"factoryPid": null

},

{

"_id": "endpoint/getavailableuserstoassign",

"pid": "endpoint.d19da94f-bae3-4101-922c-fe47ea8616d2",

"factoryPid": "endpoint"

},

{

"_id": "repo.orientdb",

"pid": "repo.orientdb",

"factoryPid": null

},

{

"_id": "audit",

"pid": "audit",

"factoryPid": null

},

{

"_id": "endpoint/gettasksview",

"pid": "endpoint.edcc1ff8-a7ba-4c46-8258-bf5216e85192",

"factoryPid": "endpoint"

},

{

"_id": "ui/secquestions",

"pid": "ui.649e2c65-0cc7-4a0d-a6b1-95f4c5168bdc",

"factoryPid": "ui"

},

{

"_id": "org.apache.felix.fileinstall/activiti",

"pid": "org.apache.felix.fileinstall.a0ba2f7d-bdb9-43b5-b84e-0e8feee6be72",

"factoryPid": "org.apache.felix.fileinstall"

},

{

"_id": "policy",

"pid": "policy",

"factoryPid": null

},

{

"_id": "endpoint/usernotifications",

"pid": "endpoint.e96d5319-6260-41db-af76-bd4e692b792d",

"factoryPid": "endpoint"

},

{

"_id": "org.apache.felix.fileinstall/ui",

"pid": "org.apache.felix.fileinstall.89f8c6dd-f54e-46a4-bfda-1e76ac044c33",

"factoryPid": "org.apache.felix.fileinstall"

},

{

"_id": "authentication",

"pid": "authentication",

"factoryPid": null

}

]

}Single instance configuration objects are located under

openidm/config/object-name.

The following example shows the default audit

configuration.

$ curl

--header "X-OpenIDM-Username: openidm-admin"

--header "X-OpenIDM-Password: openidm-admin"

http://localhost:8080/openidm/config/audit

{

"eventTypes": {

"activity": {

"filter": {

"actions": [

"create",

"update",

"delete",

"patch",

"action"

]

}

},

"recon": {}

},

"logTo": [

{

"logType": "csv",

"location": "audit",

"recordDelimiter": ";"

},

{

"logType": "repository"

}

]

}Multiple instance configuration objects are found under

openidm/config/object-name/instance-name. The following example shows the

configuration for the XML connector provisioner.

$ curl

--header "X-OpenIDM-Username: openidm-admin"

--header "X-OpenIDM-Password: openidm-admin"

http://localhost:8080/openidm/config/provisioner.openicf/xml

{

"name": "xmlfile",

"connectorRef": {

"bundleName":

"org.forgerock.openicf.connectors.file.openicf-xml-connector",

"bundleVersion": "1.1.1.0",

"connectorName": "com.forgerock.openicf.xml.XMLConnector"

},

"producerBufferSize": 100,

"connectorPoolingSupported": true,

"poolConfigOption": {

"maxObjects": 10,

"maxIdle": 10,

"maxWait": 150000,

"minEvictableIdleTimeMillis": 120000,

"minIdle": 1

},

"operationTimeout": {

"CREATE": -1,

"TEST": -1,

"AUTHENTICATE": -1,

"SEARCH": -1,

"VALIDATE": -1,

"GET": -1,

"UPDATE": -1,

"DELETE": -1,

"SCRIPT_ON_CONNECTOR": -1,

"SCRIPT_ON_RESOURCE": -1,

"SYNC": -1,

"SCHEMA": -1

},

"configurationProperties": {

"xsdIcfFilePath": "samples/sample1/data/resource-schema-1.xsd",

"xsdFilePath": "samples/sample1/data/resource-schema-extension.xsd",

"xmlFilePath": "samples/sample1/data/xmlConnectorData.xml"

},

"objectTypes": {

"account": {

"$schema": "http://json-schema.org/draft-03/schema",

"id": "__ACCOUNT__",

"type": "object",

"nativeType": "__ACCOUNT__",

"properties": {

"description": {

"type": "string",

"nativeName": "__DESCRIPTION__",

"nativeType": "string"

},

"firstname": {

"type": "string",

"nativeName": "firstname",

"nativeType": "string"

},

"email": {

"type": "string",

"nativeName": "email",

"nativeType": "string"

},

"__UID__": {

"type": "string",

"nativeName": "__UID__"

},

"password": {

"type": "string",

"required": false,

"nativeName": "__PASSWORD__",

"nativeType": "JAVA_TYPE_GUARDEDSTRING",

"flags": [

"NOT_READABLE",

"NOT_RETURNED_BY_DEFAULT"

]

},

"name": {

"type": "string",

"required": true,

"nativeName": "__NAME__",

"nativeType": "string"

},

"lastname": {

"type": "string",

"required": true,

"nativeName": "lastname",

"nativeType": "string"

}

}

}

},

"operationOptions": {}

}You can change the configuration over REST by using an HTTP PUT request

to modify the required configuration object. The following example modifies

the router.json file to remove all filters, effectively

bypassing any policy validation.

$ curl

--header "X-OpenIDM-Username: openidm-admin"

--header "X-OpenIDM-Password: openidm-admin"

--request PUT

--data '{

"filters" : [

{

"onRequest" : {

"type" : "text/javascript",

"file" : "bin/defaults/script/router-authz.js"

}

}

]

}'

"http://localhost:8080/openidm/config/router"

See the REST API Reference appendix for additional details and examples using REST access to update and patch objects.

In an environment where you have more than one OpenIDM instance, you might require a configuration that is similar, but not identical, across the different OpenIDM hosts. OpenIDM supports variable replacement in its configuration which means that you can modify the effective configuration according to the requirements of a specific environment or OpenIDM instance.

Property substitution enables you to achieve the following:

Define a configuration that is specific to a single OpenIDM instance, for example, setting the location of the keystore on a particular host.

Define a configuration whose parameters vary between different environments, for example, the URLs and passwords for test, development, and production environments.

Disable certain capabilities on specific nodes. For example, you might want to disable the workflow engine on specific instances.

When OpenIDM starts up, it combines the system configuration, which might contain specific environment variables, with the defined OpenIDM configuration properties. This combination makes up the effective configuration for that OpenIDM instance. By varying the environment properties, you can change specific configuration items that vary between OpenIDM instances or environments.

Property references are contained within the construct

&{ }. When such references are found, OpenIDM replaces

them with the appropriate property value, defined in the

boot.properties file.

The following example defines two separate OpenIDM environments - a development environment and a production environment. You can specify the environment at startup time and, depending on the environment, the database URL is set accordingly.

The environments are defined by adding the following lines to the

conf/boot.properties file:

PROD.location=production DEV.location=development

The database URL is then specified as follows in the

repo.orientdb.json file:

{

"dbUrl" : "local:./db/&{&{environment}.location}-openidm",

"user" : "admin",

"poolMinSize" : 5,

"poolMaxSize" : 20,

...

}

The effective database URL is determined by setting the

OPENIDM_OPTS environment variable when you start OpenIDM.

To use the production environment, start OpenIDM as follows:

$ export OPENIDM_OPTS="-Xmx1024m -Denvironment=PROD" $ ./startup.sh

To use the development environment, start OpenIDM as follows:

$ export OPENIDM_OPTS="-Xmx1024m -Denvironment=DEV" $ ./startup.sh

You can use property value substitution in conjunction with the system properties, to modify the configuration according to the system on which the OpenIDM instance runs.

The following example modifies the audit.json file so

that the log file is written to the user's directory. The

user.home property is a default Java System property.

{

"logTo" : [

{

"logType" : "csv",

"location" : "&{user.home}/audit",

"recordDelimiter" : ";"

}

]

}

You can define nested properties (that is a property definition within another property definition) and you can combine system properties and boot properties.

The following example uses the user.country property,

a default Java System property. The example defines specific LDAP ports,

depending on the country (identified by the country code) in the

boot.properties file. The value of the LDAP port (set in

the provisioner.openicf-ldap.json file) depends on the

value of the user.country System property.

The port numbers are defined in the boot.properties

file as follows:

openidm.NO.ldap.port=2389

openidm.EN.ldap.port=3389

openidm.US.ldap.port=1389The following extract from the

provisioner.openicf-ldap.json file shows how the value of

the LDAP port is eventually determined, based on the System property:

"configurationProperties" :

{

"credentials" : "Passw0rd",

"port" : "&{openidm.&{user.country}.ldap.port}",

"principal" : "cn=Directory Manager",

"baseContexts" :

[

"dc=example,dc=com"

],

"host" : "localhost"

}

Note the following limitations when you use property value substitution:

You cannot reference complex objects or properties with syntaxes other than String. Property values are resolved from the

boot.propertiesfile or from the System properties and the value of these properties is always in String format.Property substitution of boolean values is currently only supported in stringified format, that is, resulting in

"true"or"false".Substitution of encrypted property values is currently not supported.

You can customize OpenIDM to meet the specific requirements of your

deployment by adding your own RESTful endpoints. Endpoints are configured

in files named conf/endpoint-name.json,

where name generally describes the purpose of the

endpoint.

A sample custom endpoint configuration is provided at

openidm/samples/customendpoint. The sample includes

two files:

conf/endpoint-echo.json, which provides the

configuration for the endpoint. |

script/echo.js, which is launched when the

endpoint is accessed. |

The structure of an endpoint configuration file is as follows:

{

"context" : "endpoint/echo",

"type" : "text/javascript",

"file" : "script/echo.js"

}

"context"The URL context under which the endpoint is registered. Currently this must be under

endpoint/. An endpoint registered under the contextendpoint/echois addressable over REST athttp://localhost:8080/openidm/endpoint/echoand with the internal resource API, for exampleopenidm.read("endpoint/echo")."type"The type of implementation. Currently only

"text/javascript"is supported."source"or"fileThe actual script, inline, or a pointer to the file that contains the script. The sample script, (

samples/customendpoint/script/echo.js) simply returns the HTTP request when a request is made on that endpoint.

The endpoint script has a request variable

available in its scope. The request structure carries all the information

about the request, and includes the following properties:

idThe local ID, without the

endpoint/prefix, for example,echo.methodThe requested operation, that is,

create,read,update,delete,patch,queryoraction.paramsThe parameters that are passed in. For example, for an HTTP GET with

?param=x, the request contains"params":{"param":"x"}.parentProvides the context for the invocation, including headers and security.

Note that the interface for this context is still evolving and may change in a future release.

A script implementation should check and reject requests for methods

that it does not support. For example, the following implementation

supports only the read method:

if (request.method == "read") {

...

} else {

throw "Unsupported operation: " + request.method;

}

The final statement in the script is the return value. Unlike for

functions, at this global scope there is no return

keyword. In the following example, the value of the last statement

(x) is returned.

var x = "Sample return"

functioncall();

x

The following example uses the sample provided in

openidm/samples/customendpoint and shows the complete

request structure, which is returned by the query.

$ curl

--header "X-OpenIDM-Username: openidm-admin"

--header "X-OpenIDM-Password: openidm-admin"

--request GET

"http://localhost:8080/openidm/endpoint/echo?param=x"

{

"type": "resource",

"uuid": "21c5ddc6-a66e-464e-9fa4-9b777505799e",

"params": {

"param": "x"

},

"method": "query",

"parent": {

"path": "/openidm/endpoint/echo",

"headers": {

"Accept": "*/*",

"User-Agent": "curl/7.21.4 ... OpenSSL/0.9.8r zlib/1.2.5",

"Authorization": "Basic b3BlbmlkbS1hZG1pbjpvcGVuaWRtLWFkbWlu",

"Host": "localhost:8080"

},

"query": {

"param": "x"

},

"method": "GET",

"parent": {

"uuid": "bec97cbf-8618-42f8-a841-9f5f112538e9",

"parent": null,

"type": "root"

},

"type": "http",

"uuid": "7fb3e0d9-5f56-4b15-b710-28f2147cf4b4",

"security": {

"openidm-roles": [

"openidm-admin",

"openidm-authorized"

],

"username": "openidm-admin",

"userid": {

"component": "internal/user",

"id": "openidm-admin"

}

}

},

"id": "echo"

}

You must protect access to any custom endpoints by configuring the appropriate authorization for those contexts. For more information, see the Authorization section.

OpenIDM supports a variety of objects that can be addressed via a URL or URI. You can access data objects by using scripts (through the Resource API) or by using direct HTTP calls (through the REST API).

The following sections describe these two methods of accessing data objects, and provide information on constructing and calling data queries.

OpenIDM's uniform programming model means that all objects are queried and manipulated in the same way, using the Resource API. The URL or URI that is used to identify the target object for an operation depends on the object type. For an explanation of object types, see the Data Models and Objects Reference. For more information about scripts and the objects available to scripts, see the Scripting Reference.

You can use the Resource API to obtain managed objects, configuration objects, and repository objects, as follows:

val = openidm.read("managed/organization/mysampleorg")

val = openidm.read("config/custom/mylookuptable")

val = openidm.read("repo/custom/mylookuptable")For information about constructing an object ID, see URI Scheme in the REST API Reference.

You can update entire objects with the update()

function, as follows.

openidm.update("managed/organization/mysampleorg", mymap)

openidm.update("config/custom/mylookuptable", mymap)

openidm.update("repo/custom/mylookuptable", mymap)For managed objects, you can partially update an object with the

patch() function.

openidm.patch("managed/organization/mysampleorg", rev, value)

The create(), delete(), and

query() functions work the same way.

OpenIDM provides RESTful access to data objects via a REST API. To access objects over REST, you can use a browser-based REST client, such as the Simple REST Client for Chrome, or RESTClient for Firefox. Alternatively you can use the curl command-line utility.

For a comprehensive overview of the REST API, see the REST API Reference appendix.

To obtain a managed object through the REST API, depending on your

security settings and authentication configuration, perform an HTTP GET

on the corresponding URL, for example

https://localhost:8443/openidm/managed/organization/mysampleorg.

By default, the HTTP GET returns a JSON representation of the object.

OpenIDM supports an advanced query model that enables you to define queries, and to call them over the REST or Resource API.

Managed objects in the supported OpenIDM repositories can be

accessed using a parameterized query mechanism. Parameterized

queries on repositories are defined in the repository

configuration (repo.*.json) and are called

by their _queryId.

Parameterized queries provide security and portability for the query call signature, regardless of the back-end implementation. Queries that are exposed over the REST interface must be parameterized queries to guard against injection attacks and other misuse. Queries on the officially supported repositories have been reviewed and hardened against injection attacks.

For system objects, support for parameterized queries is

restricted to _queryId=query-all-ids. There is

currently no support for user-defined parameterized queries on

system objects. Typically, parameterized queries on system objects

are not called directly over the REST interface, but are issued

from internal calls, such as correlation queries.

A typical query definition is as follows:

"query-all-ids" : "select _openidm_id from ${unquoted:_resource}"To call this query, you would reference its ID, as follows:

?_queryId=query-all-ids

The following example calls query-all-ids over

the REST interface:

$ curl

--header "X-OpenIDM-Username: openidm-admin"

--header "X-OpenIDM-Password: openidm-admin"

"http://localhost:8080/openidm/managed/user/?_queryId=query-all-ids"

Native query expressions are supported for all managed objects and system objects, and can be called directly over the REST interface, rather than being defined in the repository configuration.

Native queries are intended specifically for internal callers, such as custom scripts, in situations where the parameterized query facility is insufficient. For example, native queries are useful if the query needs to be generated dynamically.

The query expression is specific to the target resource. For repositories, queries use the native language of the underlying data store. For system objects that are backed by OpenICF connectors, queries use the applicable query language of the system resource.

Native queries on the repository are made using the

_queryExpression keyword. For example:

$ curl

--header "X-OpenIDM-Username: openidm-admin"

--header "X-OpenIDM-Password: openidm-admin"

"http://localhost:8080/openidm/managed/user?_queryExpression=select+*+from+managedobjects"

Unlike parameterized queries, native queries are not portable and do not guard against injection attacks. Such query expressions should therefore not be used or made accessible over the REST interface or over HTTP, other than for development, and should be used only via the internal Resource API. For more information, see the section on Protecting Sensitive REST Interface URLs.

If you really need to expose native queries over HTTP, in a selective manner, you can design a custom endpoint to wrap such access.

OpenIDM provides an extensible policy service that enables you to apply specific validation requirements to various components and properties. The policy service provides a REST interface for reading policy requirements and validating the properties of components against configured policies. Objects and properties are validated automatically when they are created, updated, or patched. Policies can be applied to user passwords, but also to any kind of managed object.

The policy service enables you to do the following:

Read the configured policy requirements of a specific component.

Read the configured policy requirements of all components.

Validate a component object against the configured policies.

Validate the properties of a component against the configured policies.

A default policy applies to all managed objects. You can configure the default policy to suit your requirements, or you can extend the policy service by supplying your own scripted policies.

The default policy is configured in two files:

A policy script file (

openidm/bin/defaults/script/policy.js) which defines each policy and specifies how policy validation is performed.A policy configuration file (

openidm/conf/policy.json) which specifies which policies are applicable to each resource.

The policy script file defines policy configuration in two parts:

A policy configuration object, which defines each element of the policy.

A policy implementation function, which describes the requirements that are enforced by that policy.

Together, the configuration object and the implementation function determine whether an object is valid in terms of the policy. The following extract from the policy script file configures a policy that specifies that the value of a property must contain a certain number of capital letters.

...

{ "policyId" : "at-least-X-capitals",

"clientValidation": true,

"policyExec" : "atLeastXCapitalLetters",

"policyRequirements" : ["AT_LEAST_X_CAPITAL_LETTERS"]

},

...

function atLeastXCapitalLetters(fullObject, value, params, property) {

var reg = /[(A-Z)]/g;

if (typeof value !== "string" || !value.length || value.match(reg)

=== null || value.match(reg).length < params.numCaps) {

return [ {

"policyRequirement" : "AT_LEAST_X_CAPITAL_LETTERS",

"params" : {

"numCaps": params.numCaps

}

}

];

}

return [];

}

...

To enforce user passwords that contain at least one capital letter, the previous policy ID is applied to the appropriate resource and the required number of capital letters is defined in the policy configuration file, as described in Section 7.1.2, "Policy Configuration File".

Each element of the policy is defined in a policy configuration object. The structure of a policy configuration object is as follows:

{ "policyId" : "minimum-length",

"clientValidation": true,

"policyExec" : "propertyMinLength",

"policyRequirements" : ["MIN_LENGTH"]

}

"policyId" - a unique ID that enables the

policy to be referenced by component objects. |

"clientValidation" - indicates whether the

policy decision can be made on the client. When

"clientValidation": true, the source code for the

policy decision function is returned when the client requests the

requirements for a property. |

"policyExec" - the name of the function that

contains the policy implementation. For more information, see

Section 7.1.1.2, "Policy Implementation Function". |

"policyRequirements" - an array containing

the policy requirement ID of each requirement that is associated with

the policy. Typically, a policy will validate only one requirement,

but it can validate more than one. |