Guide to configuring and integrating OpenIDM software into identity management solutions. This software offers flexible services for automating management of the identity life cycle.

ForgeRock Identity Platform™ serves as the basis for our simple and comprehensive Identity and Access Management solution. We help our customers deepen their relationships with their customers, and improve the productivity and connectivity of their employees and partners. For more information about ForgeRock and about the platform, see https://www.forgerock.com.

In this guide you will learn how to integrate OpenIDM software as part of a complete identity management solution.

This guide is written for systems integrators building solutions based on OpenIDM services. This guide describes the product functionality, and shows you how to set up and configure OpenIDM software as part of your overall identity management solution.

Most examples in the documentation are created in GNU/Linux or Mac OS X

operating environments.

If distinctions are necessary between operating environments,

examples are labeled with the operating environment name in parentheses.

To avoid repetition file system directory names are often given

only in UNIX format as in /path/to/server,

even if the text applies to C:\path\to\server as well.

Absolute path names usually begin with the placeholder

/path/to/.

This path might translate to /opt/,

C:\Program Files\, or somewhere else on your system.

Command-line, terminal sessions are formatted as follows:

$ echo $JAVA_HOME /path/to/jdk

Command output is sometimes formatted for narrower, more readable output even though formatting parameters are not shown in the command.

Program listings are formatted as follows:

class Test {

public static void main(String [] args) {

System.out.println("This is a program listing.");

}

}ForgeRock publishes comprehensive documentation online:

The ForgeRock Knowledge Base offers a large and increasing number of up-to-date, practical articles that help you deploy and manage ForgeRock software.

While many articles are visible to community members, ForgeRock customers have access to much more, including advanced information for customers using ForgeRock software in a mission-critical capacity.

ForgeRock product documentation, such as this document, aims to be technically accurate and complete with respect to the software documented. It is visible to everyone and covers all product features and examples of how to use them.

The ForgeRock.org site has links to source code for ForgeRock open source software, as well as links to the ForgeRock forums and technical blogs.

If you are a ForgeRock customer, raise a support ticket instead of using the forums. ForgeRock support professionals will get in touch to help you.

This chapter introduces the OpenIDM architecture, and describes component modules and services.

In this chapter you will learn:

How OpenIDM uses the OSGi framework as a basis for its modular architecture

How the infrastructure modules provide the features required for OpenIDM's core services

What those core services are and how they fit in to the overall architecture

How OpenIDM provides access to the resources it manages

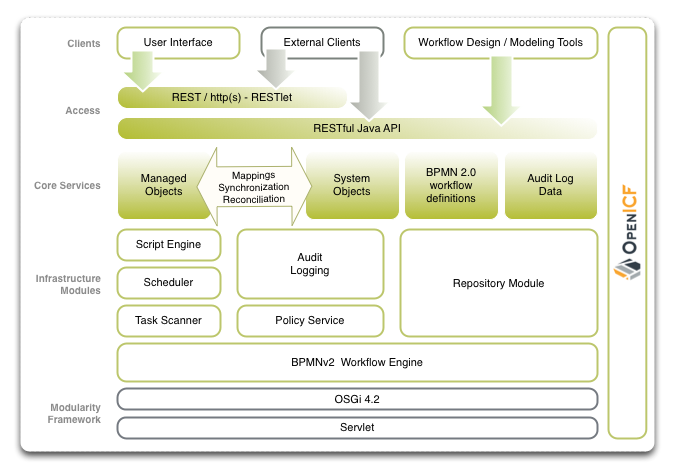

OpenIDM implements infrastructure modules that run in an OSGi framework. It exposes core services through RESTful APIs to client applications.

The following figure provides an overview of the OpenIDM architecture, which is covered in more detail in subsequent sections of this chapter.

The OpenIDM framework is based on OSGi:

- OSGi

OSGi is a module system and service platform for the Java programming language that implements a complete and dynamic component model. For a good introduction to OSGi, see the OSGi site. OpenIDM currently runs in Apache Felix, an implementation of the OSGi Framework and Service Platform.

- Servlet

The Servlet layer provides RESTful HTTP access to the managed objects and services. OpenIDM embeds the Jetty Servlet Container, which can be configured for either HTTP or HTTPS access.

Infrastructure modules provide the underlying features needed for core services:

- BPMN 2.0 Workflow Engine

OpenIDM provides an embedded workflow and business process engine based on Activiti and the Business Process Model and Notation (BPMN) 2.0 standard.

For more information, see "Integrating Business Processes and Workflows".

- Task Scanner

OpenIDM provides a task-scanning mechanism that performs a batch scan for a specified property in OpenIDM data, on a scheduled interval. The task scanner then executes a task when the value of that property matches a specified value.

For more information, see "Scanning Data to Trigger Tasks".

- Scheduler

The scheduler provides a cron-like scheduling component implemented using the Quartz library. Use the scheduler, for example, to enable regular synchronizations and reconciliations.

For more information, see "Scheduling Tasks and Events".

- Script Engine

The script engine is a pluggable module that provides the triggers and plugin points for OpenIDM. OpenIDM currently supports JavaScript and Groovy.

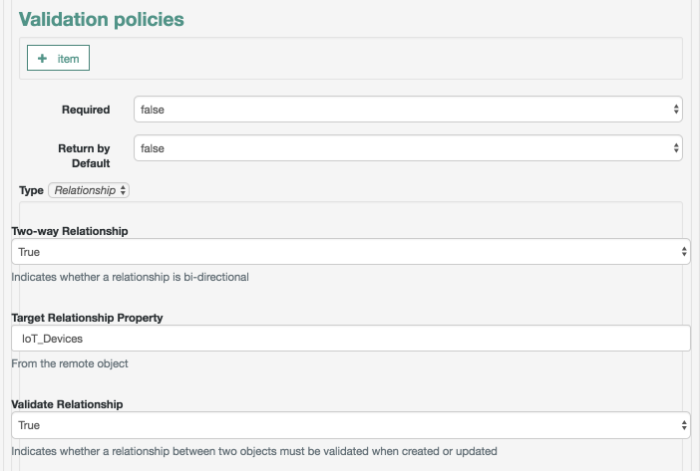

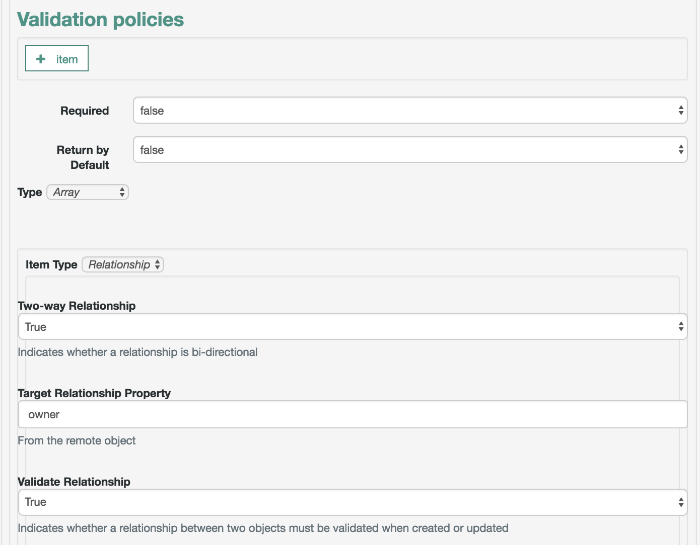

- Policy Service

OOpenIDM provides an extensible policy service that applies validation requirements to objects and properties, when they are created or updated.

For more information, see "Using Policies to Validate Data".

- Audit Logging

Auditing logs all relevant system activity to the configured log stores. This includes the data from reconciliation as a basis for reporting, as well as detailed activity logs to capture operations on the internal (managed) and external (system) objects.

For more information, see "Logging Audit Information".

- Repository

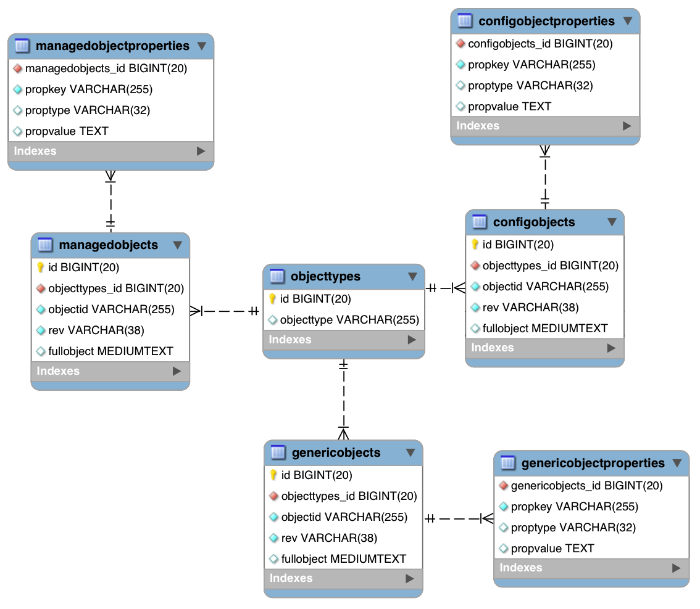

The repository provides a common abstraction for a pluggable persistence layer. OpenIDM supports reconciliation and synchronization with several major external repositories in production, including relational databases, LDAP servers, and even flat CSV and XML files.

The repository API uses a JSON-based object model with RESTful principles consistent with the other OpenIDM services. To facilitate testing, OpenIDM includes an embedded instance of OrientDB, a NoSQL database. You can then incorporate a supported internal repository, as described in "Installing a Repository For Production" in the Installation Guide.

The core services are the heart of the OpenIDM resource-oriented unified object model and architecture:

- Object Model

Artifacts handled by OpenIDM are Java object representations of the JavaScript object model as defined by JSON. The object model supports interoperability and potential integration with many applications, services, and programming languages.

OpenIDM can serialize and deserialize these structures to and from JSON as required. OpenIDM also exposes a set of triggers and functions that system administrators can define, in either JavaScript or Groovy, which can natively read and modify these JSON-based object model structures.

- Managed Objects

A managed object is an object that represents the identity-related data managed by OpenIDM. Managed objects are configurable, JSON-based data structures that OpenIDM stores in its pluggable repository. The default managed object configuration includes users and roles, but you can define any kind of managed object, for example, groups or devices.

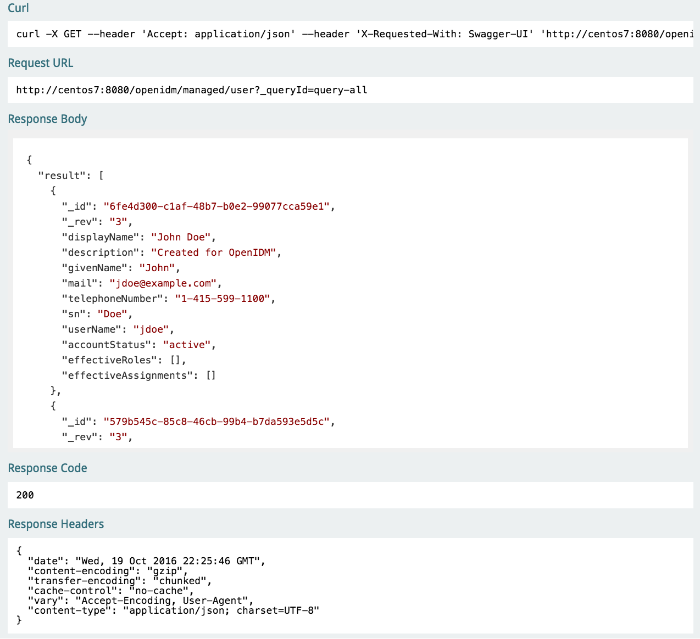

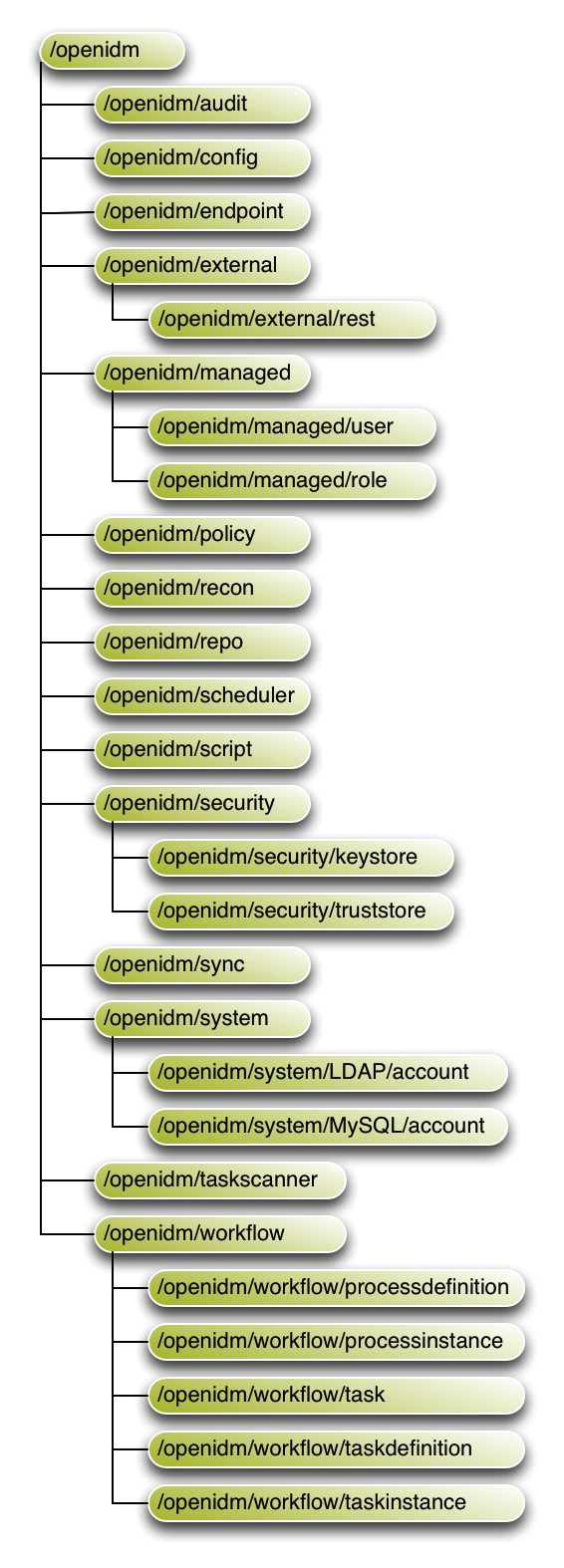

You can access managed objects over the REST interface with a query similar to the following:

$ curl \ --header "X-OpenIDM-Username: openidm-admin" \ --header "X-OpenIDM-Password: openidm-admin" \ --request GET \ "http://localhost:8080/openidm/managed/..."

- System Objects

System objects are pluggable representations of objects on external systems. For example, a user entry that is stored in an external LDAP directory is represented as a system object in OpenIDM.

System objects follow the same RESTful resource-based design principles as managed objects. They can be accessed over the REST interface with a query similar to the following:

$ curl \ --header "X-OpenIDM-Username: openidm-admin" \ --header "X-OpenIDM-Password: openidm-admin" \ --request GET \ "http://localhost:8080/openidm/system/..."

There is a default implementation for the OpenICF framework, that allows any connector object to be represented as a system object.

- Mappings

Mappings define policies between source and target objects and their attributes during synchronization and reconciliation. Mappings can also define triggers for validation, customization, filtering, and transformation of source and target objects.

For more information, see "Synchronizing Data Between Resources".

- Synchronization and Reconciliation

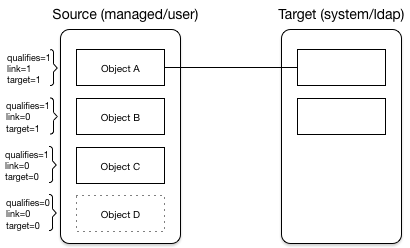

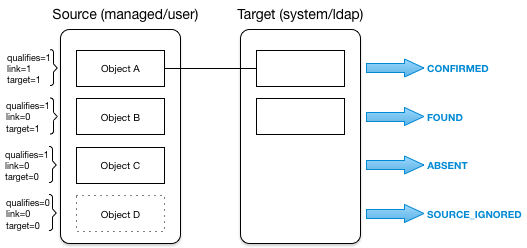

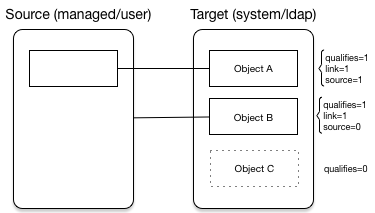

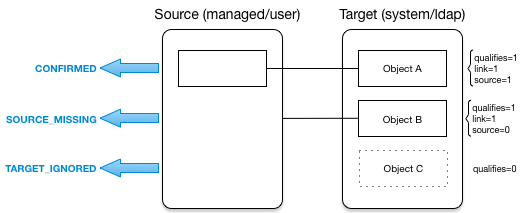

Reconciliation enables on-demand and scheduled resource comparisons between the OpenIDM managed object repository and source or target systems. Comparisons can result in different actions, depending on the mappings defined between the systems.

Synchronization enables creating, updating, and deleting resources from a source to a target system, either on demand or according to a schedule.

For more information, see "Synchronizing Data Between Resources".

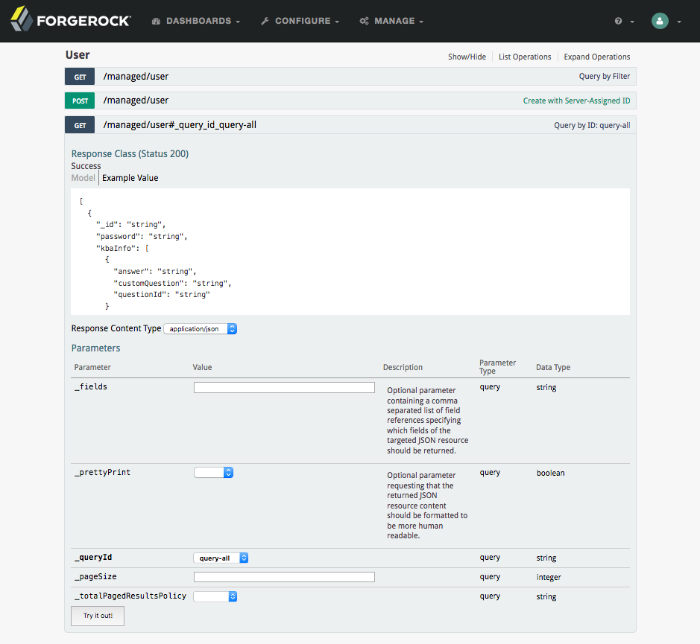

Representational State Transfer (REST) is a software architecture style for exposing resources, using the technologies and protocols of the World Wide Web. For more information on the ForgeRock REST API, see "REST API Reference".

REST interfaces are commonly tested with a curl command. Many of these commands are used in this document. They work with the standard ports associated with Java EE communications, 8080 and 8443.

To run curl over the secure port, 8443, you must include

either the --insecure option, or follow the instructions

shown in "Restrict REST Access to the HTTPS Port". You can use those

instructions with the self-signed certificate generated when OpenIDM

starts, or with a *.crt file provided by a

certificate authority.

In many examples in this guide, curl commands to the

secure port are shown with a --cacert self-signed.crt

option. Instructions for creating that self-signed.crt

file are shown in "Restrict REST Access to the HTTPS Port".

The access layer provides the user interfaces and public APIs for accessing and managing the OpenIDM repository and its functions:

- RESTful Interfaces

OpenIDM provides REST APIs for CRUD operations, for invoking synchronization and reconciliation, and to access several other services.

For more information, see "REST API Reference".

- User Interfaces

User interfaces provide access to most of the functionality available over the REST API.

This chapter covers the scripts provided for starting and stopping OpenIDM, and describes how to verify the health of a system, that is, that all requirements are met for a successful system startup.

By default you start and stop OpenIDM in interactive mode.

To start OpenIDM interactively, open a terminal or command

window, change to the openidm directory, and run the

startup script:

startup.sh (UNIX)

startup.bat (Windows)

The startup script starts the server, and opens an OSGi console with a

-> prompt where you can issue console commands.

The default hostname and ports for OpenIDM are set in the

conf/boot/boot.properties file found in the

openidm/ directory. OpenIDM is initially

configured to run on http on port 8080,

https on port 8443, with a hostname

of localhost. For more information about changing ports

and hostnames, see "Host and Port Information".

To stop the server interactively in the OSGi console, run the shutdown command:

-> shutdown

You can also start OpenIDM as a background process on UNIX and Linux. Follow these steps before starting OpenIDM for the first time.

If you have already started the server, shut it down and remove the Felix cache files under

openidm/felix-cache/:-> shutdown ... $ rm -rf felix-cache/*

Start the server in the background. The nohup survives a logout and the 2>&1& redirects standard output and standard error to the noted

console.outfile:$ nohup ./startup.sh > logs/console.out 2>&1& [1] 2343

To stop OpenIDM running as a background process, use the shutdown.sh script:

$ ./shutdown.sh ./shutdown.sh Stopping OpenIDM (2343)

Incidentally, the process identifier (PID) shown during startup should match the PID shown during shutdown.

Note

Although installations on OS X systems are not supported in production, you might want to run OpenIDM on OS X in a demo or test environment. To run OpenIDM in the background on an OS X system, take the following additional steps:

Remove the

org.apache.felix.shell.tui-*.jarbundle from theopenidm/bundledirectory.Disable

ConsoleHandlerlogging, as described in "Disabling Logs".

By default, OpenIDM starts with the configuration, script, and binary

files in the openidm/conf,

openidm/script, and openidm/bin

directories. You can launch OpenIDM with a different set of

configuration, script, and binary files for test purposes, to manage

different projects, or to run one of the included samples.

The startup.sh script enables you to specify the following elements of a running instance:

--project-locationor-p/path/to/project/directoryThe project location specifies the directory with OpenIDM configuration and script files.

All configuration objects and any artifacts that are not in the bundled defaults (such as custom scripts) must be included in the project location. These objects include all files otherwise included in the

openidm/confandopenidm/scriptdirectories.For example, the following command starts the server with the configuration of Sample 1, with a project location of

/path/to/openidm/samples/sample1:$ ./startup.sh -p /path/to/openidm/samples/sample1

If you do not provide an absolute path, the project location path is relative to the system property,

user.dir. OpenIDM then setslauncher.project.locationto that relative directory path. Alternatively, if you start OpenIDM without the -p option, OpenIDM setslauncher.project.locationto/path/to/openidm/conf.Note

In this documentation, "your project" refers to the value of

launcher.project.location.--working-locationor-w/path/to/working/directoryThe working location specifies the directory to which OpenIDM writes its database cache, audit logs, and felix cache. The working location includes everything that is in the default

db/andaudit/, andfelix-cache/subdirectories.The following command specifies that OpenIDM writes its database cache and audit data to

/Users/admin/openidm/storage:$ ./startup.sh -w /Users/admin/openidm/storage

If you do not provide an absolute path, the path is relative to the system property,

user.dir. If you do not specify a working location, OpenIDM writes this data to theopenidm/db,openidm/felix-cacheandopenidm/auditdirectories.Note that this property does not affect the location of the OpenIDM system logs. To change the location of the OpenIDM logs, edit the

conf/logging.propertiesfile.You can also change the location of the Felix cache, by editing the

conf/config.propertiesfile, or by starting OpenIDM with the-soption, described later in this section.--configor-c/path/to/config/fileA customizable startup configuration file (named

launcher.json) enables you to specify how the OSGi Framework is started.Unless you are working with a highly customized deployment, you should not modify the default framework configuration. This option is therefore described in more detail in "Advanced Configuration".

--storageor-s/path/to/storage/directorySpecifies the OSGi storage location of the cached configuration files.

You can use this option to redirect output if you are installing OpenIDM on a read-only filesystem volume. For more information, see "Installing on a Read-Only Volume" in the Installation Guide. This option is also useful when you are testing different configurations. Sometimes when you start OpenIDM with two different sample configurations, one after the other, the cached configurations are merged and cause problems. Specifying a storage location creates a separate

felix-cachedirectory in that location, and the cached configuration files remain completely separate.

By default, properties files are loaded in the following order, and property values are resolved in the reverse order:

system.propertiesconfig.propertiesboot.properties

If both system and boot properties define the same attribute, the

property substitution process locates the attribute in

boot.properties and does not attempt to locate the

property in system.properties.

You can use variable substitution in any .json

configuration file with the install, working and project locations

described previously. You can substitute the following properties:

install.location |

install.url |

working.location |

working.url |

project.location |

project.url |

Property substitution takes the following syntax:

&{launcher.property}For example, to specify the location of the OrientDB database, you

can set the dbUrl property in repo.orientdb.json

as follows:

"dbUrl" : "local:&{launcher.working.location}/db/openidm",

The database location is then relative to a working location defined in the startup configuration.

You can find more examples of property substitution in many other files in

your project's conf/ subdirectory.

Note that property substitution does not work for connector reference properties. So, for example, the following configuration would not be valid:

"connectorRef" : {

"connectorName" : "&{connectorName}",

"bundleName" : "org.forgerock.openicf.connectors.ldap-connector",

"bundleVersion" : "&{LDAP.BundleVersion}"

...

The "connectorName" must be the precise string from the

connector configuration. If you need to specify multiple connector version

numbers, use a range of versions, for example:

"connectorRef" : {

"connectorName" : "org.identityconnectors.ldap.LdapConnector",

"bundleName" : "org.forgerock.openicf.connectors.ldap-connector",

"bundleVersion" : "[1.4.0.0,2.0.0.0)",

...

Due to the highly modular, configurable nature of OpenIDM, it is often difficult to assess whether a system has started up successfully, or whether the system is ready and stable after dynamic configuration changes have been made.

OpenIDM includes a health check service, with options to monitor the status of internal resources.

To monitor the status of external resources such as LDAP servers and external databases, use the commands described in "Checking the Status of External Systems Over REST".

The health check service reports on the state of the OpenIDM system and outputs this state to the OSGi console and to the log files. The system can be in one of the following states:

STARTING- OpenIDM is starting upACTIVE_READY- all of the specified requirements have been met to consider the OpenIDM system readyACTIVE_NOT_READY- one or more of the specified requirements have not been met and the OpenIDM system is not considered readySTOPPING- OpenIDM is shutting down

You can verify the current state of an OpenIDM system with the following REST call:

$ curl \

--header "X-OpenIDM-Username: openidm-admin" \

--header "X-OpenIDM-Password: openidm-admin" \

--request GET \

"http://localhost:8080/openidm/info/ping"

{

"_id" : "",

"state" : "ACTIVE_READY",

"shortDesc" : "OpenIDM ready"

}

The information is provided by the following script:

openidm/bin/defaults/script/info/ping.js.

You can get more information about the current OpenIDM session, beyond basic health checks, with the following REST call:

$ curl \

--header "X-OpenIDM-Username: openidm-admin" \

--header "X-OpenIDM-Password: openidm-admin" \

--request GET \

"http://localhost:8080/openidm/info/login"

{

"_id" : "",

"class" : "org.forgerock.services.context.SecurityContext",

"name" : "security",

"authenticationId" : "openidm-admin",

"authorization" : {

"id" : "openidm-admin",

"component" : "repo/internal/user",

"roles" : [ "openidm-admin", "openidm-authorized" ],

"ipAddress" : "127.0.0.1"

},

"parent" : {

"class" : "org.forgerock.caf.authentication.framework.MessageContextImpl",

"name" : "jaspi",

"parent" : {

"class" : "org.forgerock.services.context.TransactionIdContext",

"id" : "2b4ab479-3918-4138-b018-1a8fa01bc67c-288",

"name" : "transactionId",

"transactionId" : {

"value" : "2b4ab479-3918-4138-b018-1a8fa01bc67c-288",

"subTransactionIdCounter" : 0

},

"parent" : {

"class" : "org.forgerock.services.context.ClientContext",

"name" : "client",

"remoteUser" : null,

"remoteAddress" : "127.0.0.1",

"remoteHost" : "127.0.0.1",

"remotePort" : 56534,

"certificates" : "",

...

The information is provided by the following script:

openidm/bin/defaults/script/info/login.js.

You can extend OpenIDM monitoring beyond what you can check on the

openidm/info/ping and openidm/info/login

endpoints. Specifically, you can get more detailed information about the

state of the:

Operating Systemon theopenidm/health/osendpointMemoryon theopenidm/health/memoryendpointJDBC Pooling, based on theopenidm/health/jdbcendpointReconciliation, on theopenidm/health/reconendpoint.

You can regulate access to these endpoints as described in the following

section: "Understanding the Access Configuration Script (access.js)".

With the following REST call, you can get basic information about the host operating system:

$ curl \

--header "X-OpenIDM-Username: openidm-admin" \

--header "X-OpenIDM-Password: openidm-admin" \

--request GET \

"http://localhost:8080/openidm/health/os"

{

"_id" : "",

"_rev" : "",

"availableProcessors" : 1,

"systemLoadAverage" : 0.06,

"operatingSystemArchitecture" : "amd64",

"operatingSystemName" : "Linux",

"operatingSystemVersion" : "2.6.32-504.30.3.el6.x86_64"

}

From the output, you can see that this particular system has one 64-bit

CPU, with a load average of 6 percent, on a Linux system with the noted

kernel operatingSystemVersion number.

With the following REST call, you can get basic information about overall JVM memory use:

$ curl \

--header "X-OpenIDM-Username: openidm-admin" \

--header "X-OpenIDM-Password: openidm-admin" \

--request GET \

"http://localhost:8080/openidm/health/memory"

{

"_id" : "",

"_rev" : "",

"objectPendingFinalization" : 0,

"heapMemoryUsage" : {

"init" : 1073741824,

"used" : 88538392,

"committed" : 1037959168,

"max" : 1037959168

},

"nonHeapMemoryUsage" : {

"init" : 24313856,

"used" : 69255024,

"committed" : 69664768,

"max" : 224395264

}

}The output includes information on JVM Heap and Non-Heap memory, in bytes. Briefly:

JVM Heap memory is used to store Java objects.

JVM Non-Heap Memory is used by Java to store loaded classes and related meta-data

Running a health check on the JDBC repository is supported only if you are using the BoneCP connection pool. This is not the default connection pool, so you must make the following changes to your configuration before running this command:

In your project's

conf/datasource.jdbc-default.jsonfile, change theconnectionPoolparameter as follows:"connectionPool" : { "type" : "bonecp" }In your project's

conf/boot/boot.propertiesfile, enable the statistics MBean for the BoneCP connection pool:openidm.bonecp.statistics.enabled=true

For a BoneCP connection pool, the following REST call returns basic information about the status of the JDBC repository:

$ curl \

--header "X-OpenIDM-Username: openidm-admin" \

--header "X-OpenIDM-Password: openidm-admin" \

--request GET \

"http://localhost:8080/openidm/health/jdbc"

{

"_id": "",

"_rev": "",

"com.jolbox.bonecp:type=BoneCP-4ffa60bd-5dfc-400f-850e-439c7aa27094": {

"connectionWaitTimeAvg": 0.012701142857142857,

"statementExecuteTimeAvg": 0.8084880967741935,

"statementPrepareTimeAvg": 1.6652538867562894,

"totalLeasedConnections": 0,

"totalFreeConnections": 7,

"totalCreatedConnections": 7,

"cacheHits": 0,

"cacheMiss": 0,

"statementsCached": 0,

"statementsPrepared": 31,

"connectionsRequested": 28,

"cumulativeConnectionWaitTime": 0,

"cumulativeStatementExecutionTime": 25,

"cumulativeStatementPrepareTime": 18,

"cacheHitRatio": 0,

"statementsExecuted": 31

}

}The BoneCP metrics are self-explanatory.

With the following REST call, you can get basic information about the system demands related to reconciliation:

$ curl \

--header "X-OpenIDM-Username: openidm-admin" \

--header "X-OpenIDM-Password: openidm-admin" \

--request GET \

"http://localhost:8080/openidm/health/recon"

{

"_id" : "",

"_rev" : "",

"activeThreads" : 1,

"corePoolSize" : 10,

"largestPoolSize" : 1,

"maximumPoolSize" : 10,

"currentPoolSize" : 1

}From the output, you can review the number of active threads used by the reconciliation, as well as the available thread pool.

You can extend or override the default information that is provided by

creating your own script file and its corresponding configuration file in

openidm/conf/info-name.json.

Custom script files can be located anywhere, although a best practice is to

place them in openidm/script/info. A sample customized

script file for extending the default ping service is provided in

openidm/samples/infoservice/script/info/customping.js.

The corresponding configuration file is provided in

openidm/samples/infoservice/conf/info-customping.json.

The configuration file has the following syntax:

{

"infocontext" : "ping",

"type" : "text/javascript",

"file" : "script/info/customping.js"

}The parameters in the configuration file are as follows:

infocontextspecifies the relative name of the info endpoint under the info context. The information can be accessed over REST at this endpoint, for example, settinginfocontexttomycontext/myendpointwould make the information accessible over REST athttp://localhost:8080/openidm/info/mycontext/myendpoint.typespecifies the type of the information source. JavaScript ("type" : "text/javascript") and Groovy ("type" : "groovy") are supported.filespecifies the path to the JavaScript or Groovy file, if you do not provide a"source"parameter.sourcespecifies the actual JavaScript or Groovy script, if you have not provided a"file"parameter.

Additional properties can be passed to the script as depicted in this

configuration file

(openidm/samples/infoservice/conf/info-name.json).

Script files in openidm/samples/infoservice/script/info/

have access to the following objects:

request- the request details, including the method called and any parameters passed.healthinfo- the current health status of the system.openidm- access to the JSON resource API.Any additional properties that are depicted in the configuration file (

openidm/samples/infoservice/conf/info-name.json.)

The configurable OpenIDM health check service can verify the status of required modules and services for an operational system. During system startup, OpenIDM checks that these modules and services are available and reports on whether any requirements for an operational system have not been met. If dynamic configuration changes are made, OpenIDM rechecks that the required modules and services are functioning, to allow ongoing monitoring of system operation.

OpenIDM checks all required modules. Examples of those modules are shown here:

"org.forgerock.openicf.framework.connector-framework"

"org.forgerock.openicf.framework.connector-framework-internal"

"org.forgerock.openicf.framework.connector-framework-osgi"

"org.forgerock.openidm.audit"

"org.forgerock.openidm.core"

"org.forgerock.openidm.enhanced-config"

"org.forgerock.openidm.external-email"

...

"org.forgerock.openidm.system"

"org.forgerock.openidm.ui"

"org.forgerock.openidm.util"

"org.forgerock.commons.org.forgerock.json.resource"

"org.forgerock.commons.org.forgerock.util"

"org.forgerock.openidm.security-jetty"

"org.forgerock.openidm.jetty-fragment"

"org.forgerock.openidm.quartz-fragment"

"org.ops4j.pax.web.pax-web-extender-whiteboard"

"org.forgerock.openidm.scheduler"

"org.ops4j.pax.web.pax-web-jetty-bundle"

"org.forgerock.openidm.repo-jdbc"

"org.forgerock.openidm.repo-orientdb"

"org.forgerock.openidm.config"

"org.forgerock.openidm.crypto"OpenIDM checks all required services. Examples of those services are shown here:

"org.forgerock.openidm.config"

"org.forgerock.openidm.provisioner"

"org.forgerock.openidm.provisioner.openicf.connectorinfoprovider"

"org.forgerock.openidm.external.rest"

"org.forgerock.openidm.audit"

"org.forgerock.openidm.policy"

"org.forgerock.openidm.managed"

"org.forgerock.openidm.script"

"org.forgerock.openidm.crypto"

"org.forgerock.openidm.recon"

"org.forgerock.openidm.info"

"org.forgerock.openidm.router"

"org.forgerock.openidm.scheduler"

"org.forgerock.openidm.scope"

"org.forgerock.openidm.taskscanner"

You can replace the list of required modules and services, or add to it, by

adding the following lines to your project's

conf/boot/boot.properties file. Bundles and services

are specified as a list of symbolic names, separated by commas:

openidm.healthservice.reqbundles- overrides the default required bundles.openidm.healthservice.reqservices- overrides the default required services.openidm.healthservice.additionalreqbundles- specifies required bundles (in addition to the default list).openidm.healthservice.additionalreqservices- specifies required services (in addition to the default list).

By default, OpenIDM gives the system 15 seconds to start up all the required

bundles and services, before the system readiness is assessed. Note that this

is not the total start time, but the time required to complete the service

startup after the framework has started. You can change this default by

setting the value of the servicestartmax property (in

milliseconds) in your project's conf/boot/boot.properties

file. This example sets the startup time to five seconds:

openidm.healthservice.servicestartmax=5000

On a running OpenIDM instance, you can list the installed modules and their states by typing the following command in the OSGi console. (The output will vary by configuration):

-> scr list

Id State Name

[ 12] [active ] org.forgerock.openidm.endpoint

[ 13] [active ] org.forgerock.openidm.endpoint

[ 14] [active ] org.forgerock.openidm.endpoint

[ 15] [active ] org.forgerock.openidm.endpoint

[ 16] [active ] org.forgerock.openidm.endpoint

...

[ 34] [active ] org.forgerock.openidm.taskscanner

[ 20] [active ] org.forgerock.openidm.external.rest

[ 6] [active ] org.forgerock.openidm.router

[ 33] [active ] org.forgerock.openidm.scheduler

[ 19] [unsatisfied ] org.forgerock.openidm.external.email

[ 11] [active ] org.forgerock.openidm.sync

[ 25] [active ] org.forgerock.openidm.policy

[ 8] [active ] org.forgerock.openidm.script

[ 10] [active ] org.forgerock.openidm.recon

[ 4] [active ] org.forgerock.openidm.http.contextregistrator

[ 1] [active ] org.forgerock.openidm.config

[ 18] [active ] org.forgerock.openidm.endpointservice

[ 30] [unsatisfied ] org.forgerock.openidm.servletfilter

[ 24] [active ] org.forgerock.openidm.infoservice

[ 21] [active ] org.forgerock.openidm.authentication

->

To display additional information about a particular module or service, run

the following command, substituting the Id of that module

from the preceding list:

-> scr info Id

The following example displays additional information about the router service:

-> scr info 9

ID: 9

Name: org.forgerock.openidm.router

Bundle: org.forgerock.openidm.api-servlet (127)

State: active

Default State: enabled

Activation: immediate

Configuration Policy: optional

Activate Method: activate (declared in the descriptor)

Deactivate Method: deactivate (declared in the descriptor)

Modified Method: -

Services: org.forgerock.json.resource.ConnectionFactory

java.io.Closeable

java.lang.AutoCloseable

Service Type: service

Reference: requestHandler

Satisfied: satisfied

Service Name: org.forgerock.json.resource.RequestHandler

Target Filter: (org.forgerock.openidm.router=*)

Multiple: single

Optional: mandatory

Policy: static

...

Properties:

component.id = 9

component.name = org.forgerock.openidm.router

felix.fileinstall.filename = file:/path/to/openidm-latest/conf/router.json

jsonconfig = {

"filters" : [

{

"condition" : {

"type" : "text/javascript",

"source" : "context.caller.external === true || context.current.name === 'selfservice'"

},

"onRequest" : {

"type" : "text/javascript",

"file" : "router-authz.js"

}

},

{

"pattern" : "^(managed|system|repo/internal)($|(/.+))",

"onRequest" : {

"type" : "text/javascript",

"source" : "require('policyFilter').runFilter()"

},

"methods" : [

"create",

"update"

]

},

{

"pattern" : "repo/internal/user.*",

"onRequest" : {

"type" : "text/javascript",

"source" : "request.content.password = require('crypto').hash(request.content.password);"

},

"methods" : [

"create",

"update"

]

}

]

}

maintenanceFilter.target = (service.pid=org.forgerock.openidm.maintenance)

requestHandler.target = (org.forgerock.openidm.router=*)

service.description = OpenIDM Common REST Servlet Connection Factory

service.pid = org.forgerock.openidm.router

service.vendor = ForgeRock AS.

->To debug custom libraries, you can start OpenIDM with the option to use the Java Platform Debugger Architecture (JPDA):

Start OpenIDM with the

jpdaoption:$ cd /path/to/openidm $ ./startup.sh jpda Executing ./startup.sh... Using OPENIDM_HOME: /path/to/openidm Using OPENIDM_OPTS: -Xmx1024m -Xms1024m -Denvironment=PROD -Djava.compiler=NONE -Xnoagent -Xdebug -Xrunjdwp:transport=dt_socket,address=5005,server=y,suspend=n Using LOGGING_CONFIG: -Djava.util.logging.config.file=/path/to/openidm/conf/logging.properties Listening for transport dt_socket at address: 5005 Using boot properties at /path/to/openidm/conf/boot/boot.properties -> OpenIDM version "5.0.0" (revision: xxxx) OpenIDM ready

The relevant JPDA options are outlined in the startup script (

startup.sh).In your IDE, attach a Java debugger to the JVM via socket, on port 5005.

Caution

This interface is internal and subject to change. If you depend on this interface, contact ForgeRock support.

OpenIDM provides a script that generates an initialization script to run OpenIDM as a service on Linux systems. You can start the script as the root user, or configure it to start during the boot process.

When OpenIDM runs as a service, logs are written to the directory in which OpenIDM was installed.

To run OpenIDM as a service, take the following steps:

If you have not yet installed OpenIDM, follow the procedure described in "Preparing to Install and Run Servers" in the Installation Guide.

Run the RC script:

$ cd /path/to/openidm/bin $ ./create-openidm-rc.sh

As a user with administrative privileges, copy the

openidmscript to the/etc/init.ddirectory:$ sudo cp openidm /etc/init.d/

If you run Linux with SELinux enabled, change the file context of the newly copied script with the following command:

$ sudo restorecon /etc/init.d/openidm

You can verify the change to SELinux contexts with the

ls -Z /etc/init.dcommand. For consistency, change the user context to match other scripts in the same directory with thesudo chcon -u system_u /etc/init.d/openidmcommand.Run the appropriate commands to add OpenIDM to the list of RC services:

On Red Hat-based systems, run the following commands:

$ sudo chkconfig --add openidm

$ sudo chkconfig openidm on

On Debian/Ubuntu systems, run the following command:

$ sudo update-rc.d openidm defaults Adding system startup for /etc/init.d/openidm ... /etc/rc0.d/K20openidm -> ../init.d/openidm /etc/rc1.d/K20openidm -> ../init.d/openidm /etc/rc6.d/K20openidm -> ../init.d/openidm /etc/rc2.d/S20openidm -> ../init.d/openidm /etc/rc3.d/S20openidm -> ../init.d/openidm /etc/rc4.d/S20openidm -> ../init.d/openidm /etc/rc5.d/S20openidm -> ../init.d/openidm

Note the output, as Debian/Ubuntu adds start and kill scripts to appropriate runlevels.

When you run the command, you may get the following warning message:

update-rc.d: warning: /etc/init.d/openidm missing LSB information. You can safely ignore that message.

As an administrative user, start the OpenIDM service:

$ sudo /etc/init.d/openidm start

Alternatively, reboot the system to start the OpenIDM service automatically.

(Optional) The following commands stops and restarts the service:

$ sudo /etc/init.d/openidm stop

$ sudo /etc/init.d/openidm restart

If you have set up a deployment of OpenIDM in a custom directory, such as

/path/to/openidm/production, you can modify the

/etc/init.d/openidm script.

Open the openidm script in a text editor and navigate to

the START_CMD line.

At the end of the command, you should see the following line:

org.forgerock.commons.launcher.Main -c bin/launcher.json > logs/server.out 2>&1 &"

Include the path to the production directory. In this case, you would add -p production as shown:

org.forgerock.commons.launcher.Main -c bin/launcher.json -p production > logs/server.out 2>&1 &

Save the openidm script file in the

/etc/init.d directory. The

sudo /etc/init.d/openidm start command should now start

OpenIDM with the files in your production subdirectory.

This chapter describes the basic command-line interface (CLI). The CLI includes a number of utilities for managing an OpenIDM instance.

All of the utilities are subcommands of the cli.sh

(UNIX) or cli.bat (Windows) scripts. To use the utilities,

you can either run them as subcommands, or launch the cli

script first, and then run the utility. For example, to run the

encrypt utility on a UNIX system:

$ cd /path/to/openidm $ ./cli.sh Using boot properties at /path/to/openidm/conf/boot/boot.properties openidm# encrypt ....

or

$ cd /path/to/openidm $ ./cli.sh encrypt ...

By default, the command-line utilities run with the properties defined in your

project's conf/boot/boot.properties file.

If you run the cli.sh command by itself, it opens an OpenIDM-specific shell prompt:

openidm#

The startup and shutdown scripts are not discussed in this chapter. For information about these scripts, see "Starting and Stopping the Server".

The following sections describe the subcommands and their use. Examples assume that you are running the commands on a UNIX system. For Windows systems, use cli.bat instead of cli.sh.

For a list of subcommands available from the openidm#

prompt, run the cli.sh help command. The

help and exit options shown below are

self-explanatory. The other subcommands are explained in the subsections

that follow:

local:keytool Export or import a SecretKeyEntry.

The Java Keytool does not allow for exporting or importing SecretKeyEntries.

local:encrypt Encrypt the input string.

local:secureHash Hash the input string.

local:validate Validates all json configuration files in the configuration

(default: /conf) folder.

basic:help Displays available commands.

basic:exit Exit from the console.

remote:update Update the system with the provided update file.

remote:configureconnector Generate connector configuration.

remote:configexport Exports all configurations.

remote:configimport Imports the configuration set from local file/directory.The following options are common to the configexport, configimport, and configureconnector subcommands:

- -u or --user USER[:PASSWORD]

Allows you to specify the server user and password. Specifying a username is mandatory. If you do not specify a username, the following error is output to the OSGi console:

Remote operation failed: Unauthorized. If you do not specify a password, you are prompted for one. This option is used by all three subcommands.- --url URL

The URL of the OpenIDM REST service. The default URL is

http://localhost:8080/openidm/. This can be used to import configuration files from a remote running instance of OpenIDM. This option is used by all three subcommands.- -P or --port PORT

The port number associated with the OpenIDM REST service. If specified, this option overrides any port number specified with the --url option. The default port is 8080. This option is used by all three subcommands.

The configexport subcommand exports all configuration objects to a specified location, enabling you to reuse a system configuration in another environment. For example, you can test a configuration in a development environment, then export it and import it into a production environment. This subcommand also enables you to inspect the active configuration of an OpenIDM instance.

OpenIDM must be running when you execute this command.

Usage is as follows:

$ ./cli.sh configexport --user username:password export-location

For example:

$ ./cli.sh configexport --user openidm-admin:openidm-admin /tmp/conf

On Windows systems, the export-location must be provided in quotation marks, for example:

C:\openidm\cli.bat configexport --user openidm-admin:openidm-admin "C:\temp\openidm"

Configuration objects are exported as .json files to the

specified directory. The command creates the directory if needed.

Configuration files that are present in this directory are renamed as backup

files, with a timestamp, for example,

audit.json.2014-02-19T12-00-28.bkp, and are not

overwritten. The following configuration objects are exported:

The internal repository table configuration (

repo.orientdb.jsonorrepo.jdbc.json) and the datasource connection configuration, for JDBC repositories (datasource.jdbc-default.json)The script configuration (

script.json)The log configuration (

audit.json)The authentication configuration (

authentication.json)The cluster configuration (

cluster.json)The configuration of a connected SMTP email server (

external.email.json)Custom configuration information (

info-name.json)The managed object configuration (

managed.json)The connector configuration (

provisioner.openicf-*.json)The router service configuration (

router.json)The scheduler service configuration (

scheduler.json)Any configured schedules (

schedule-*.json)Standard knowledge-based authentication questions (

selfservice.kba.json)The synchronization mapping configuration (

sync.json)If workflows are defined, the configuration of the workflow engine (

workflow.json) and the workflow access configuration (process-access.json)Any configuration files related to the user interface (

ui-*.json)The configuration of any custom endpoints (

endpoint-*.json)The configuration of servlet filters (

servletfilter-*.json)The policy configuration (

policy.json)

The configimport subcommand imports configuration objects from the specified directory, enabling you to reuse a system configuration from another environment. For example, you can test a configuration in a development environment, then export it and import it into a production environment.

The command updates the existing configuration from the import-location over the OpenIDM REST interface. By default, if configuration objects are present in the import-location and not in the existing configuration, these objects are added. If configuration objects are present in the existing location but not in the import-location, these objects are left untouched in the existing configuration.

The subcommand takes the following options:

-r,--replaceall,--replaceAllReplaces the entire list of configuration files with the files in the specified import location.

Note that this option wipes out the existing configuration and replaces it with the configuration in the import-location. Objects in the existing configuration that are not present in the import-location are deleted.

--retries(integer)New in OpenIDM 5.0.0, this option specifies the number of times the command should attempt to update the configuration if OpenIDM is not ready.

Default value : 10

--retryDelay(integer)New in OpenIDM 5.0.0, this option specifies the delay (in milliseconds) between configuration update retries if OpenIDM is not ready.

Default value : 500

Usage is as follows:

$ ./cli.sh configimport --user username:password [--replaceAll] [--retries integer] [--retryDelay integer] import-location

For example:

$ ./cli.sh configimport --user openidm-admin:openidm-admin --retries 5 --retryDelay 250 --replaceAll /tmp/conf

On Windows systems, the import-location must be provided in quotation marks, for example:

C:\openidm\cli.bat configimport --user openidm-admin:openidm-admin --replaceAll "C:\temp\openidm"

Configuration objects are imported as .json files from the

specified directory to the conf directory. The

configuration objects that are imported are the same as those for the

export command, described in the previous section.

The configureconnector subcommand generates a configuration for an OpenICF connector.

Usage is as follows:

$ ./cli.sh configureconnector --user username:password --name connector-name

Select the type of connector that you want to configure. The following example configures a new XML connector:

$ ./cli.sh configureconnector --user openidm-admin:openidm-admin --name myXmlConnector Starting shell in /path/to/openidm Using boot properties at /path/to/openidm/conf/boot/boot.properties 0. XML Connector version 1.1.0.3 1. SSH Connector version 1.4.1.0 2. LDAP Connector version 1.4.3.0 3. Kerberos Connector version 1.4.2.0 4. Scripted SQL Connector version 1.4.3.0 5. Scripted REST Connector version 1.4.3.0 6. Scripted CREST Connector version 1.4.3.0 7. Scripted Poolable Groovy Connector version 1.4.3.0 8. Scripted Groovy Connector version 1.4.3.0 9. Database Table Connector version 1.1.0.2 10. CSV File Connector version 1.5.1.4 11. Exit Select [0..11]: 0 Edit the configuration file and run the command again. The configuration was saved to /openidm/temp/provisioner.openicf-myXmlConnector.json

The basic configuration is saved in a file named

/openidm/temp/provisioner.openicf-connector-name.json.

Edit the configurationProperties parameter in this file to

complete the connector configuration. For an XML connector, you can use the

schema definitions in Sample 1 for an example configuration:

"configurationProperties" : {

"xmlFilePath" : "samples/sample1/data/resource-schema-1.xsd",

"createFileIfNotExists" : false,

"xsdFilePath" : "samples/sample1/data/resource-schema-extension.xsd",

"xsdIcfFilePath" : "samples/sample1/data/xmlConnectorData.xml"

},

For more information about the connector configuration properties, see "Configuring Connectors".

When you have modified the file, run the configureconnector command again so that OpenIDM can pick up the new connector configuration:

$ ./cli.sh configureconnector --user openidm-admin:openidm-admin --name myXmlConnector Executing ./cli.sh... Starting shell in /path/to/openidm Using boot properties at /path/to/openidm/conf/boot/boot.properties Configuration was found and read from: /path/to/openidm/temp/provisioner.openicf-myXmlConnector.json

You can now copy the new

provisioner.openicf-myXmlConnector.json file to the

conf/ subdirectory.

You can also configure connectors over the REST interface, or through the Admin UI. For more information, see "Creating Default Connector Configurations" and "Adding New Connectors from the Admin UI".

The encrypt subcommand encrypts an input string, or JSON object, provided at the command line. This subcommand can be used to encrypt passwords, or other sensitive data, to be stored in the OpenIDM repository. The encrypted value is output to standard output and provides details of the cryptography key that is used to encrypt the data.

Usage is as follows:

$ ./cli.sh encrypt [-j] string

If you do not enter the string as part of the command, the command prompts for the string to be encrypted. If you enter the string as part of the command, any special characters, for example quotation marks, must be escaped.

The -j option indicates that the string to be encrypted is

a JSON object, and validates the object. If the object is malformed JSON and

you use the -j option, the command throws an error. It is

easier to input JSON objects in interactive mode. If you input the JSON

object on the command-line, the object must be surrounded by quotes and any

special characters, including curly braces, must be escaped. The rules for

escaping these characters are fairly complex. For more information, see

section 4.8.2 of the OSGi draft specification.

For example:

$ ./cli.sh encrypt -j '\{\"password\":\"myPassw0rd\"\}'The following example encrypts a normal string value:

$ ./cli.sh encrypt mypassword

Executing ./cli.sh...

Starting shell in /path/to/openidm

Using boot properties at /path/to/openidm/conf/boot/boot.properties

-----BEGIN ENCRYPTED VALUE-----

{

"$crypto" : {

"type" : "x-simple-encryption",

"value" : {

"cipher" : "AES/CBC/PKCS5Padding",

"salt" : "0pRncNLTJ6ZySHfV4DEtgA==",

"data" : "pIrCCkLPhBt0rbGXiZBHkw==",

"iv" : "l1Hau6nf2zizQSib8kkW0g==",

"key" : "openidm-sym-default",

"mac" : "SoqfhpvhBVuIkux8mztpeQ=="

}

}

}

------END ENCRYPTED VALUE------The following example prompts for a JSON object to be encrypted:

$ ./cli.sh encrypt -j

Using boot properties at /path/to/openidm/conf/boot/boot.properties

Enter the Json value

> Press ctrl-D to finish input

Start data input:

{"password":"myPassw0rd"}

^D

-----BEGIN ENCRYPTED VALUE-----

{

"$crypto" : {

"type" : "x-simple-encryption",

"value" : {

"cipher" : "AES/CBC/PKCS5Padding",

"salt" : "vdz6bUztiT6QsExNrZQAEA==",

"data" : "RgMLRbX0guxF80nwrtaZkkoFFGqSQdNWF7Ve0zS+N1I=",

"iv" : "R9w1TcWfbd9FPmOjfvMhZQ==",

"key" : "openidm-sym-default",

"mac" : "9pXtSKAt9+dO3Mu0NlrJsQ=="

}

}

}

------END ENCRYPTED VALUE------The secureHash subcommand hashes an input string, or JSON object, using the specified hash algorithm. This subcommand can be used to hash password values, or other sensitive data, to be stored in the OpenIDM repository. The hashed value is output to standard output and provides details of the algorithm that was used to hash the data.

Usage is as follows:

$ ./cli.sh secureHash --algorithm [-j] string

The -a or --algorithm option specifies the

hash algorithm to use. OpenIDM supports the following hash algorithms:

MD5, SHA-1, SHA-256,

SHA-384, and SHA-512. If you do not

specify a hash algorithm, SHA-256 is used.

If you do not enter the string as part of the command, the command prompts for the string to be hashed. If you enter the string as part of the command, any special characters, for example quotation marks, must be escaped.

The -j option indicates that the string to be hashed is

a JSON object, and validates the object. If the object is malformed JSON and

you use the -j option, the command throws an error. It is

easier to input JSON objects in interactive mode. If you input the JSON

object on the command-line, the object must be surrounded by quotes and any

special characters, including curly braces, must be escaped. The rules for

escaping these characters are fairly complex. For more information, see

section 4.8.2 of the OSGi draft specification.

For example:

$ ./cli.sh secureHash --algorithm SHA-1 '\{\"password\":\"myPassw0rd\"\}'

The following example hashes a password value (mypassword)

using the SHA-1 algorithm:

$ ./cli.sh secureHash --algorithm SHA-1 mypassword

Executing ./cli.sh...

Starting shell in /path/to/openidm

Using boot properties at /path/to/openidm/conf/boot/boot.properties

-----BEGIN HASHED VALUE-----

{

"$crypto" : {

"value" : {

"algorithm" : "SHA-1",

"data" : "T9yf3dL7oepWvUPbC8kb4hEmKJ7g5Zd43ndORYQox3GiWAGU"

},

"type" : "salted-hash"

}

}

------END HASHED VALUE------The following example prompts for a JSON object to be hashed:

$ ./cli.sh secureHash --algorithm SHA-1 -j

Executing ./cli.sh...

Starting shell in /path/to/openidm

Using boot properties at /path/to/openidm/conf/boot/boot.properties

Enter the Json value

> Press ctrl-D to finish input

Start data input:

{"password":"myPassw0rd"}

^D

-----BEGIN HASHED VALUE-----

{

"$crypto" : {

"value" : {

"algorithm" : "SHA-1",

"data" : "PBsmFJZEVNHuYPZJwaF5oX0LtamUA2tikFCiQEfgIsqa/VHK"

},

"type" : "salted-hash"

}

}

------END HASHED VALUE------The keytool subcommand exports or imports secret key values.

The Java keytool command enables you to export and import public keys and certificates, but not secret or symmetric keys. The OpenIDM keytool subcommand provides this functionality.

Usage is as follows:

$ ./cli.sh keytool [--export, --import] alias

For example, to export the default OpenIDM symmetric key, run the following command:

$ ./cli.sh keytool --export openidm-sym-default Using boot properties at /openidm/conf/boot/boot.properties Use KeyStore from: /openidm/security/keystore.jceks Please enter the password: [OK] Secret key entry with algorithm AES AES:606d80ae316be58e94439f91ad8ce1c0

The default keystore password is changeit. For security

reasons, you must change this password in a production

environment. For information about changing the keystore password, see

"Change the Default Keystore Password".

To import a new secret key named my-new-key, run the following command:

$ ./cli.sh keytool --import my-new-key Using boot properties at /openidm/conf/boot/boot.properties Use KeyStore from: /openidm/security/keystore.jceks Please enter the password: Enter the key: AES:606d80ae316be58e94439f91ad8ce1c0

If a secret key of that name already exists, OpenIDM returns the following error:

"KeyStore contains a key with this alias"

The validate subcommand validates all .json configuration

files in your project's conf/ directory.

Usage is as follows:

$ ./cli.sh validate

Executing ./cli.sh

Starting shell in /path/to/openidm

Using boot properties at /path/to/openidm/conf/boot/boot.properties

...................................................................

[Validating] Load JSON configuration files from:

[Validating] /path/to/openidm/conf

[Validating] audit.json .................................. SUCCESS

[Validating] authentication.json ......................... SUCCESS

...

[Validating] sync.json ................................... SUCCESS

[Validating] ui-configuration.json ....................... SUCCESS

[Validating] ui-countries.json ........................... SUCCESS

[Validating] workflow.json ............................... SUCCESS

The update subcommand supports updates of OpenIDM for patches and migrations. For an example of this process, see "Updating Servers" in the Installation Guide.

OpenIDM includes a customizable, browser-based user interface. The functionality is subdivided into Administrative and Self-Service User Interfaces.

If you are administering OpenIDM, navigate to the Administrative User

Interface, also known as the Admin UI. If OpenIDM is installed on the

local system, you can get to the Admin UI at the following URL:

https://localhost:8443/admin. In the Admin UI, you

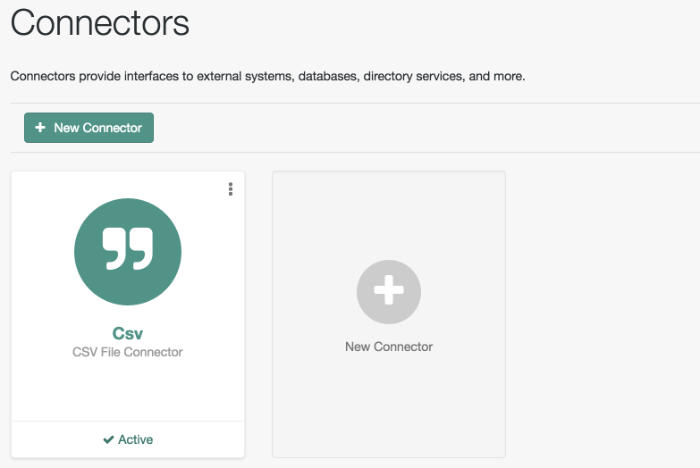

can configure connectors, customize managed objects, set up attribute

mappings, manage accounts, and more.

The Self-Service User Interface, also known as the Self-Service UI,

provides role-based access to tasks based on BPMN2 workflows, and

allows users to manage certain aspects of their own accounts, including

configurable self-service registration. When OpenIDM starts, you can

access the Self-Service UI at https://localhost:8443/.

Warning

The default password for the administrative user,

openidm-admin, is openidm-admin.

To protect your deployment in production, change this password.

All users, including openidm-admin, can change their

password through the Self-Service UI. After you have logged in, click Change

Password.

You can set up a basic configuration with the Administrative User Interface (Admin UI).

Through the Admin UI, you can connect to resources, configure attribute mapping and scheduled reconciliation, and set up and manage objects, such as users, groups, and devices.

You can configure OpenIDM through Quick Start cards, and from the Configure and Manage drop-down menus. Try them out, and see what happens when you select each option.

In the following sections, you will examine the default Admin UI dashboard, and learn how to set up custom Admin UI dashboards.

Caution

If your browser uses an AdBlock extension, it might inadvertently block

some UI functionality, particularly if your configuration includes strings

such as ad. For example, a connection to an Active

Directory server might be configured at the endpoint

system/ad. To avoid problems related to blocked UI

functionality, either remove the AdBlock extension, or set up a suitable

white list to ensure that none of the targeted endpoints are blocked.

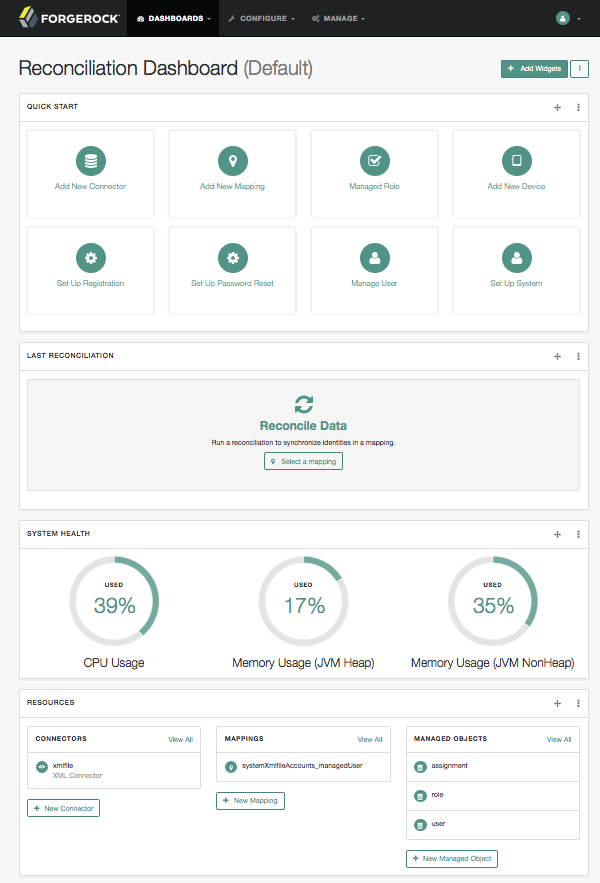

When you log into the Admin UI, the first screen you should see is the "Reconciliation Dashboard".

The Admin UI includes a fixed top menu bar. As you navigate around the Admin UI, you should see the same menu bar throughout. You can click the Dashboards > Reconciliation Dashboard to return to that screen.

The default dashboard is split into four sections, based on widgets.

Quick Start cards support one-click access to common administrative tasks, and are described in detail in the following section.

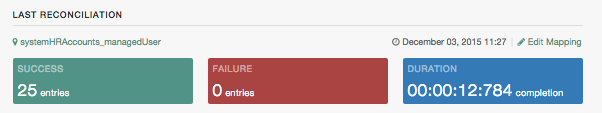

Last Reconciliation includes data from the most recent reconciliation between data stores. After you run a reconciliation, you should see data similar to:

System Health includes data on current CPU and memory usage.

Resources include an abbreviated list of configured connectors, mappings, and managed objects.

The Quick Start cards allow quick access to the labeled

configuration options, described here:

Add ConnectorUse the Admin UI to connect to external resources. For more information, see "Adding New Connectors from the Admin UI".

Create MappingConfigure synchronization mappings to map objects between resources. For more information, see "Mapping Source Objects to Target Objects".

Manage RoleSet up managed provisioning or authorization roles. For more information, see "Working With Managed Roles".

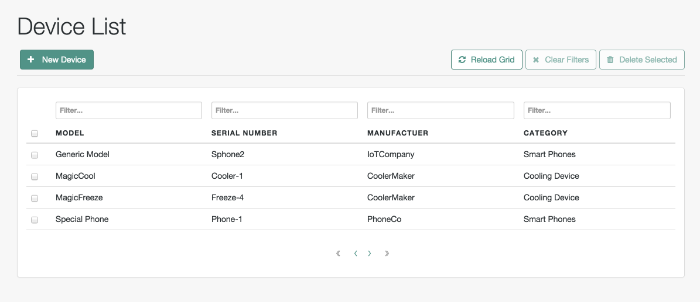

Add DeviceUse the Admin UI to set up managed objects, including users, groups, roles, or even Internet of Things (IoT) devices. For more information, see "Managing Accounts".

Set Up RegistrationConfigure User Self-Registration. You can set up the Self-Service UI login screen, with a link that allows new users to start a verified account registration process. For more information, see "Configuring User Self-Service".

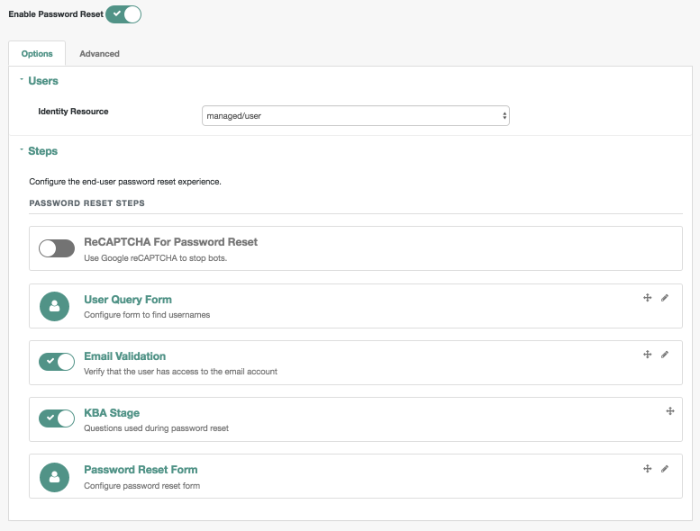

Set Up Password ResetConfigure user self-service Password Reset, allowing end-users to reset forgotten passwords. For more information, see "Configuring User Self-Service".

Manage UserAllows management of users in the repository. You may have to run a reconciliation from an external repository first. For more information, see "Working with Managed Users".

Set Up SystemConfigure the following server elements:

Authentication, as described in "Supported Authentication and Session Modules".

Audit, as described in "Logging Audit Information".

Self-Service UI, as described in "Changing the UI Path".

Email, as described in "Configuring Outbound Email".

Updates, as described in "Updating Servers" in the Installation Guide.

To create a new dashboard, click Dashboards > New Dashboard. You're prompted for a dashboard name, and whether to set it as the default. You can then add widgets.

Alternatively, you can start with an existing dashboard. In the upper-right corner of the UI, next to the Add Widgets button, click the vertical ellipsis. In the menu that appears, you can take the following actions on the current dashboard:

Rename

Duplicate

Set as Default

Delete

To add a widget to a dashboard, click Add Widgets and add the widget of your choice in the window that appears.

To modify the position of a widget in a dashboard, click and drag on the move icon for the widget. You can find that four arrow icon in the upper right corner of the widget window, next to the three dot vertical ellipsis.

If you add a new Quick Start widget, select the vertical ellipsis in the upper right corner of the widget, and click Settings. You can configure an Admin UI sub-widget to embed in the Quick Start widget in the pop-up menu that appears.

Click Add a Link. You can then enter a name, a destination URL, and an icon for the widget.

If you are linking to a specific page in the OpenIDM Admin UI, the

destination URL can be the part of the address after the main page for the

Admin UI, such as https://localhost:8443/admin

For example, if you want to create a quick start link to the Audit

configuration tab, at

https://localhost:8443/admin/#settings/audit/,

you could enter #settings/audit in the

destination URL text box.

OpenIDM writes the changes you make to the

ui-dashboard.json file for your project.

For example, if you add a Last Reconciliation and Embed Web Page widget to

a new dashboard named Test, you'll see the following excerpt in your

ui-dashboard.json file:

{

"name" : "Test",

"isDefault" : false,

"widgets" : [

{

"type" : "frame",

"size" : "large",

"frameUrl" : "http://example.com",

"height" : "100px",

"title" : "Example.com"

},

{

"type" : "lastRecon",

"size" : "large",

"barchart" : "true"

},

{

"type" : "quickStart",

"size" : "large",

"cards" : [

{

"name" : "Audit",

"icon" : "fa-align-justify",

"href" : "#settings/audit"

}

]

},

]

}For more information on each property, see the following table:

| Property | Options | Description |

|---|---|---|

name | User entry | Dashboard name |

isDefault | true or false | Default dashboard; can set one default |

widgets | Different options for type | Code blocks that define a widget |

type | lifeCycleMemoryHeap, lifeCycleMemoryNonHeap,

systemHealthFull, cpuUsage,

lastRecon, resourceList,

quickStart, frame,

userRelationship

| Widget name |

size | x-small, small,

medium, or large | Width of widget, based on a 12-column grid system, where x-small=4, small=6, medium=8, and large=12; for more information, see Bootstrap CSS |

height | Height, in units such as cm, mm,

px, and in | Height; applies only to Embed Web Page widget |

frameUrl | URL | Web page to embed; applies only to Embed Web Page widget |

title | User entry | Label shown in the UI; applies only to Embed Web Page widget |

barchart | true or false | Reconciliation bar chart; applies only to Last Reconciliation widget |

When complete, you can select the name of the new dashboard under the Dashboards menu.

You can modify the options for each dashboard and widget. Select the vertical ellipsis in the upper right corner of the object, and make desired choices from the pop-up menu that appears.

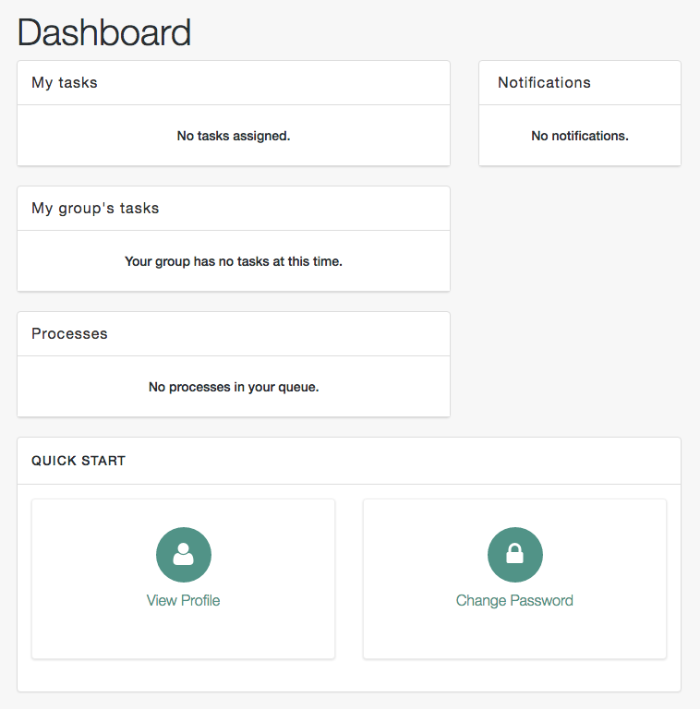

For all users, the Self-Service UI includes Dashboard and Profile links in the top menu bar.

To access the Self-Service UI, start OpenIDM, then navigate to

https://localhost:8443/. If you have not installed a

certificate that is trusted by a certificate authority, you are prompted

with an Untrusted Connection warning the first time you log in to the UI.

The Dashboard includes a list tasks assigned to the user who has logged in, tasks assigned to the relevant group, processes available to be invoked, current notifications for that user, along with Quick Start cards for that user's profile and password.

For examples of these tasks, processes, and notifications, see "Workflow Samples" in the Samples Guide.

Every user who logs into the Self-Service UI has a profile, with Basic Info

and Password Tabs. Users other than openidm-admin may

see additional information, including Preferences, Social Identities, and

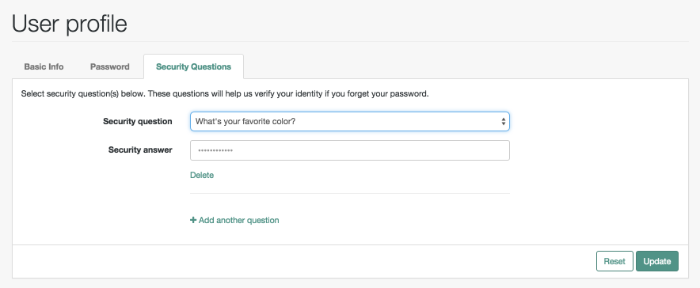

Security Questions tabs.

You'll see the following information under each tab:

- Basic Info

Specifies basic account information, including username, first name, last name, and email address.

- Password

Supports password changes; for more information on password policy criteria, see "Enforcing Password Policy".

- Preferences

Allows selection of preferences, as defined in the

managed.jsonfile, and the Managed Object User property Preferences tab. The default preferences relate to updates and special offers.- Social Identities

Lists social ID providers that have been enabled in the Admin UI. If you have registered with one provider, you can enable logins to this account with additional social ID providers. For more information on configuring and linking each provider, see "Configuring Social ID Providers".

- Security Questions

Shown if KBA is enabled. Includes security questions and answers for this account, created when a new user goes through the registration process. For more information on KBA, see "Configuring Self-Service Questions".

You may want to customize information included in the Self-Service UI.

These procedures do not address actual data store requirements. If you add text boxes in the UI, it is your responsibility to set up associated properties in your repositories.

To do so, you should copy existing default template files in the

openidm/ui/selfservice/default subdirectory to

associated extension/ subdirectories.

To simplify the process, you can copy some or all of the content from the

openidm/ui/selfservice/default/templates to the

openidm/ui/selfservice/extension/templates directory.

You can use a similar process to modify what is shown in the Admin UI.

In the following procedure, you will customize the screen that users see during the User Registration process. You can use a similar process to customize what a user sees during the Password Reset and Forgotten Username processes.

For user Self-Service features, you can customize options in three files.

Navigate to the extension/templates/user/process

subdirectory, and examine the following files:

User Registration:

registration/userDetails-initial.htmlPassword Reset:

reset/userQuery-initial.htmlForgotten Username:

username/userQuery-initial.html

The following procedure demonstrates the process for User Registration.

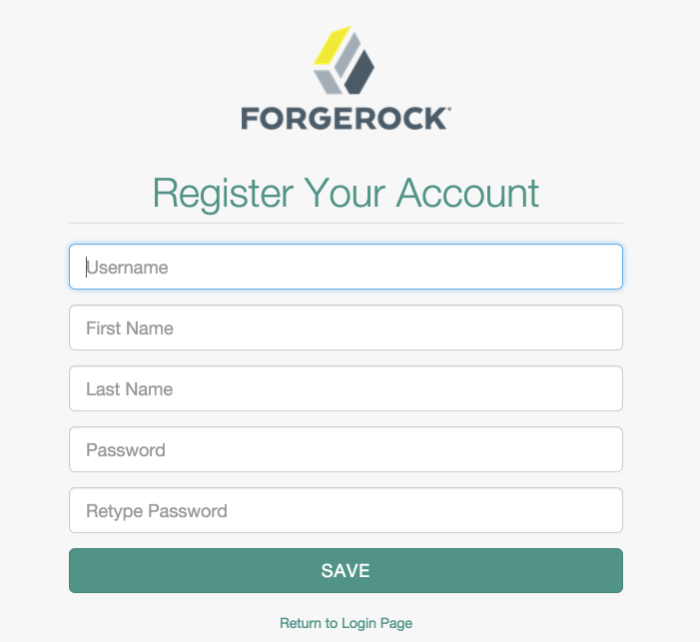

When you configure user self-service, as described in "Configuring User Self-Service", anonymous users who choose to register will see a screen similar to:

The screen you see is from the following file:

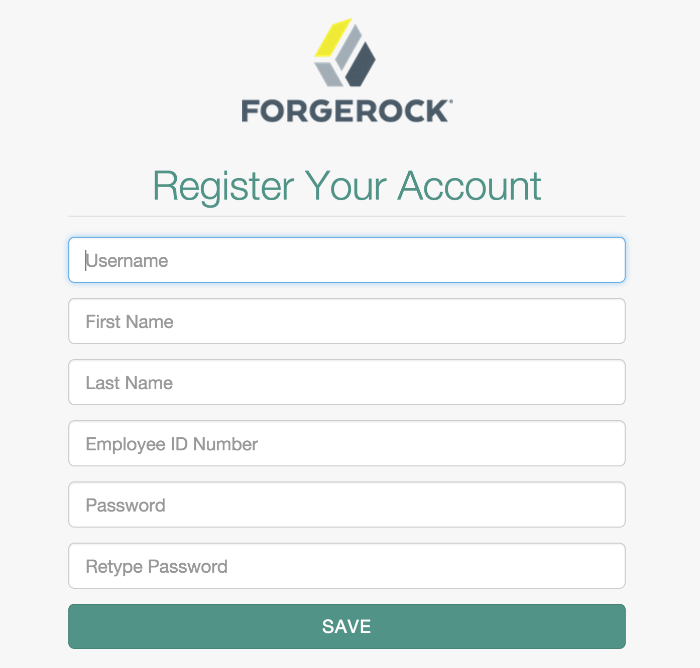

userDetails-initial.html, in theselfservice/extension/templates/user/process/registrationsubdirectory. Open that file in a text editor.Assume that you want new users to enter an employee ID number when they register.

Create a new

form-groupstanza for that number. For this procedure, the stanza appears after the stanza for Last Name (or surname)sn:<div class="form-group"> <label class="sr-only" for="input-employeeNum">{{t 'common.user.employeeNum'}}</label> <input type="text" placeholder="{{t 'common.user.employeeNum'}}" id="input-employeeNum" name="user.employeeNum" class="form-control input-lg" /> </div>Edit the relevant

translation.jsonfile. As this is the customized file for the Self-Service UI, you will find it in theselfservice/extension/locales/endirectory that you set up in "Customizing the UI".You need to find the right place to enter text associated with the

employeeNumproperty. Look for the other properties in theuserDetails-initial.htmlfile.The following excerpt illustrates the

employeeNumproperty as added to thetranslation.jsonfile.... "givenName" : "First Name", "sn" : "Last Name", "employeeNum" : "Employee ID Number", ...The next time an anonymous user tries to create an account, that user should see a screen similar to:

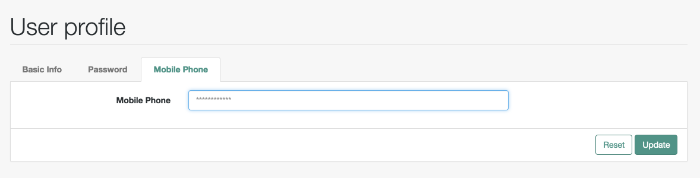

In the following procedure, you will customize what users can modify when they navigate to their User Profile page:

If you want to allow users to modify additional data on their profiles, this procedure is for you.

Log in to the Self-Service UI. Click the Profile tab. You should see at least the following tabs:

Basic InfoandPassword. In this procedure, you will add aMobile Phonetab.OpenIDM generates the user profile page from the following file:

UserProfileTemplate.html. Assuming you set up customextensionsubdirectories, as described in "Customizing a UI Template", you should find a copy of this file in the following directory:selfservice/extension/templates/user.Examine the first few lines of that file. Note how the

tablistincludes the tabs in the Self-Service UI user profile: Basic Info and Password, associated with thecommon.user.basicInfoandcommon.user.passwordproperties.The following excerpt includes a third tab, with the

mobilePhoneproperty:<div class="container"> <div class="page-header"> <h1>{{t "common.user.userProfile"}}</h1> </div> <div class="tab-menu"> <ul class="nav nav-tabs" role="tablist"> <li class="active"><a href="#userDetailsTab" role="tab" data-toggle="tab"> {{t "common.user.basicInfo"}}</a></li> <li><a href="#userPasswordTab" role="tab" data-toggle="tab"> {{t "common.user.password"}}</a></li> <li><a href="#userMobilePhoneNumberTab" role="tab" data-toggle="tab"> {{t "common.user.mobilePhone"}}</a></li> </ul> </div> ...Next, you should provide information for the tab. Based on the comments in the file, and the entries in the

Passwordtab, the following code sets up a Mobile Phone number entry:<div role="tabpanel" class="tab-pane panel panel-default fr-panel-tab" id="userMobilePhoneNumberTab"> <form class="form-horizontal" id="password"> <div class="panel-body"> <div class="form-group"> <label class="col-sm-3 control-label" for="input-telephoneNumber"> {{t "common.user.mobilePhone"}}</label> <div class="col-sm-6"> <input class="form-control" type="telephoneNumber" id="input-mobilePhone" name="mobilePhone" value="" /> </div> </div> </div> <div class="panel-footer clearfix"> {{> form/_basicSaveReset}} </div> </form> </div> ...Note

For illustration, this procedure uses the HTML tags found in the

UserProfileTemplate.htmlfile. You can use any standard HTML content withintab-panetags, as long as they include a standardformtag and standardinputfields. OpenIDM picks up this information when the tab is saved, and uses it toPATCHuser content.Review the

managed.jsonfile. Make sure it isviewableanduserEditableas shown in the following excerpt:"telephoneNumber" : { "type" : "string", "title" : "Mobile Phone", "viewable" : true, "userEditable" : true, "pattern" : "^\\+?([0-9\\- \\(\\)])*$" },Open the applicable

translation.jsonfile. You should find a copy of this file in the following subdirectory:selfservice/extension/locales/en/.Search for the line with

basicInfo, and add an entry formobilePhone:"basicInfo": "Basic Info", "mobilePhone": "Mobile Phone",Review the result. Log in to the Self-Service UI, and click Profile. Note the entry for the Mobile Phone tab.

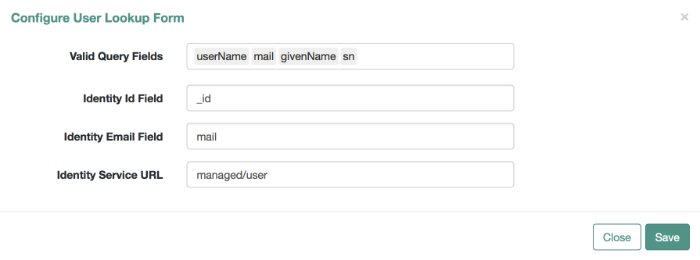

For Password Reset and Forgotten Username functionality, you may choose to modify Valid Query Fields, such as those described in "Configuring User Self-Service".

For example, if you click Configure > Password Reset > User Query Form, you can make changes to Valid Query Fields.

If you add, delete, or modify any Valid Query Fields, you will have to

change the corresponding userQuery-initial.html file.

Assuming you set up custom extension subdirectories, as

described in "Customizing a UI Template", you can find this file

in the following directory:

selfservice/extension/templates/user/process.

If you change any Valid Query Fields, you should make corresponding changes.

For Forgotten Username functionality, you would modify the

username/userQuery-initial.htmlfile.For Password Reset functionality, you would modify the

reset/userQuery-initial.htmlfile.

For a model of how you can change the userQuery-initial.html

file, see "Customizing the User Registration Page".

Only administrative users (with the role openidm-admin)

can add, modify, and delete accounts from the Admin UI. Regular users

can modify certain aspects of their own accounts from the Self-Service UI.

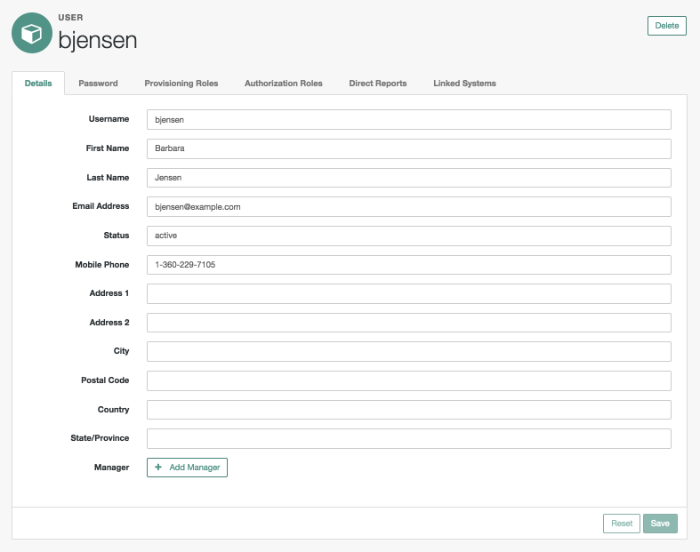

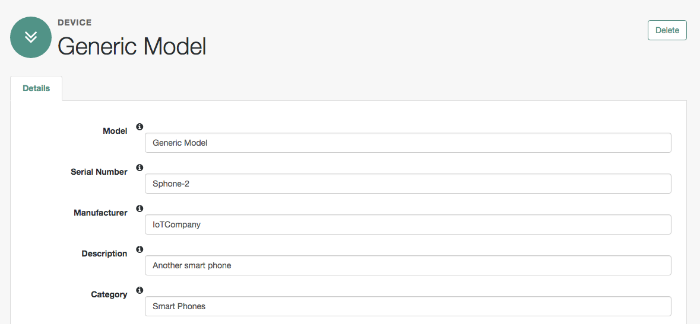

In the Admin UI, you can manage most details associated with an account, as shown in the following screenshot.

You can configure different functionality for an account under each tab:

- Details

The Details tab includes basic identifying data for each user, with two special entries:

- Status

By default, accounts are shown as active. To suspend an account, such as for a user who has taken a leave of absence, set that user's status to inactive.

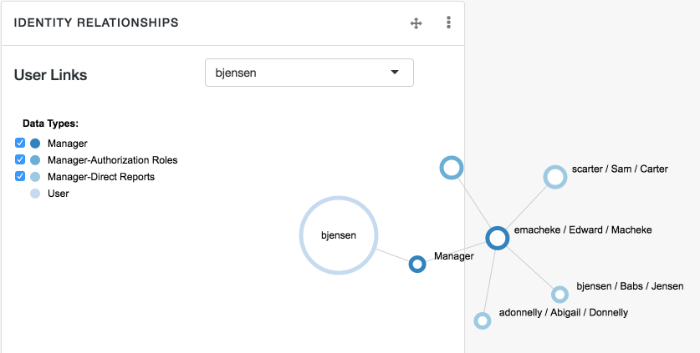

- Manager

You can assign a manager from the existing list of managed users.

- Password

As an administrator, you can create new passwords for users in the managed user repository.

- Provisioning Roles

Used to specify how objects are provisioned to an external system. For more information, see "Working With Managed Roles".

- Authorization Roles

Used to specify the authorization rights of a managed user within OpenIDM. For more information, see "Working With Managed Roles".

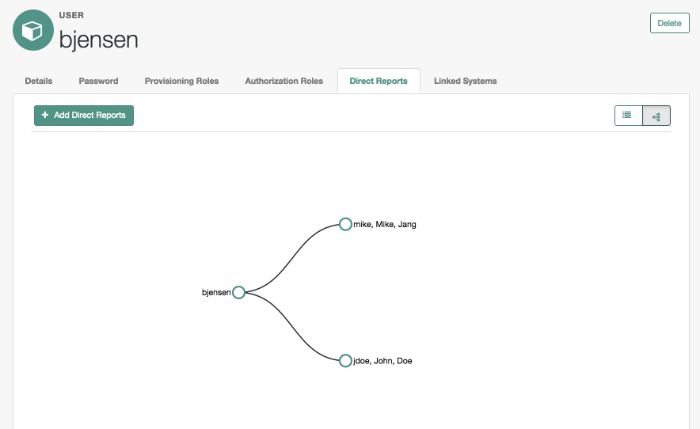

- Direct Reports

Users who are listed as managers of others have entries under the Direct Reports tab, as shown in the following illustration:

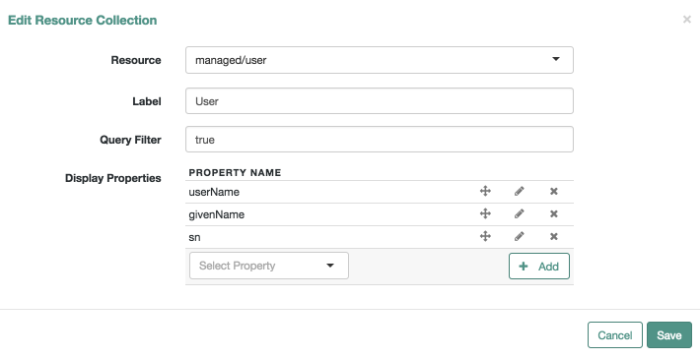

- Linked Systems

Used to display account information reconciled from external systems.

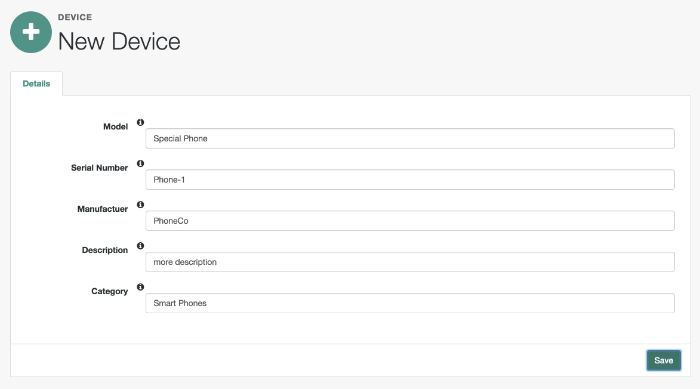

With the following procedures, you can add, update, and deactivate accounts for managed objects such as users.

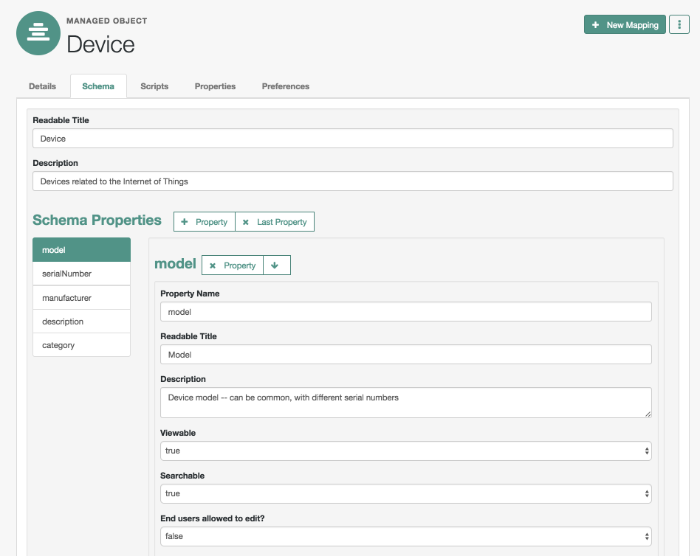

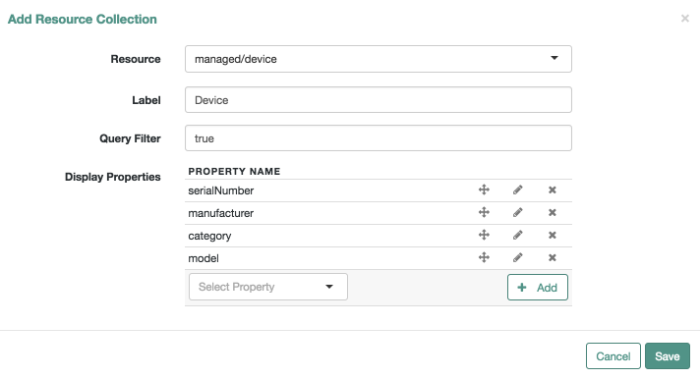

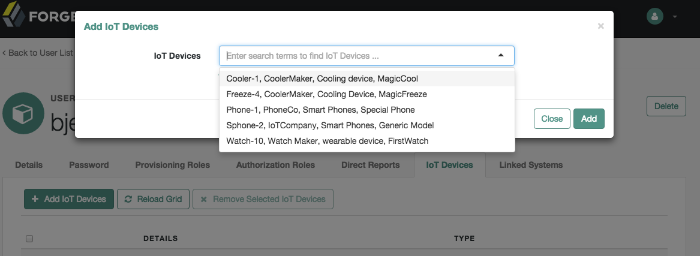

The managed object does not have to be a user. It can be a role, a group, or even be a physical item such as an IoT device. The basic process for adding, modifying, deactivating, and deleting other objects is the same as it is with accounts. However, the details may vary; for example, many IoT devices do not have telephone numbers.

Log in to the Admin UI at

https://localhost:8443/admin.Click Manage > User.

Click New User.

Complete the fields on the New User page.

Most of these fields are self-explanatory. Be aware that the user interface is subject to policy validation, as described in "Using Policies to Validate Data". So, for example, the email address must be a valid email address, and the password must comply with the password validation settings that appear if you enter an invalid password.

In a similar way, you can create accounts for other managed objects.

You can review new managed object settings in the managed.json

file of your project-dir/conf

directory.

In the following procedures, you learn how:

Log in to the Admin UI at

https://localhost:8443/adminas an administrative user.Click Manage > User.

Click the Username of the user that you want to update.

On the profile page for the user, modify the fields you want to change and click Update.

The user account is updated in the OpenIDM repository.

Log in to the Admin UI at

https://localhost:8443/adminas an administrative user.Click Manage > User.

Select the checkbox next to the desired Username.

Click the Delete Selected button.

Click OK to confirm the deletion.

The user is deleted from the internal repository.