Run the Analytics Pipeline

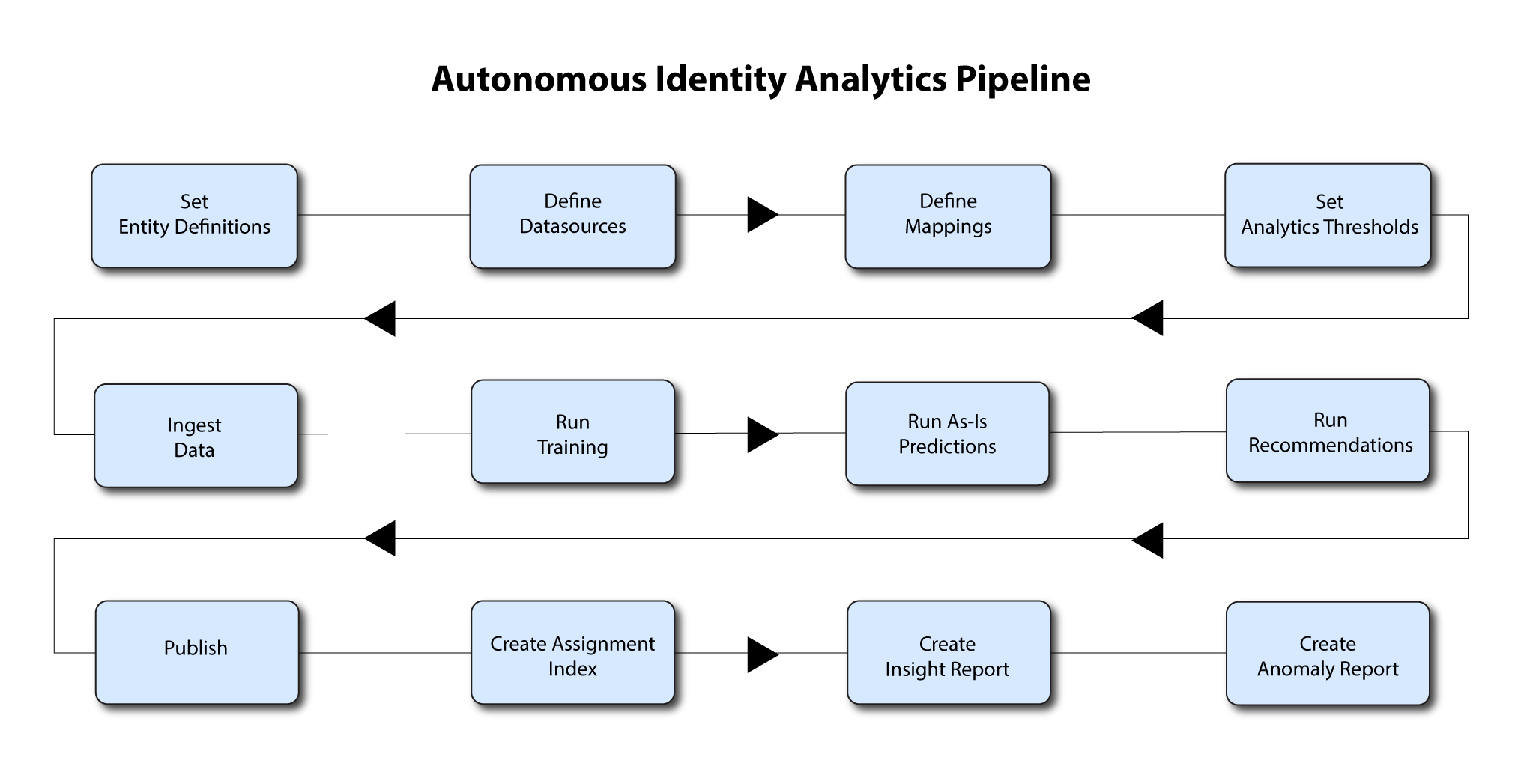

The Analytics pipeline is the heart of Autonomous Identity. The pipeline analyzes, calculates, and determines the association rules, confidence scores, predictions, and recommendations for assigning entitlements to the users.

The analytics pipeline is an intensive processing operation that can take time depending on your dataset and configuration. To ensure an accurate analysis, the data needs to be as complete as possible with little or no null values. Once you have prepared the data, you must run a series of analytics jobs to ensure an accurate rendering of the entitlements and confidence scores.

Before running the analytics, you must run the following pre-analytics steps to set up your datasets and schema using the Autonomous Identity UI:

-

Add attributes to the schema. For more information, see Set Entity Definitions.

-

Define your CSV datasources. You can enter more than one data source, specifying the dataset location on your target machine. For more information, see Set Data Sources.

-

Define attribute mappings between your data and the schema. For more information, see Set Attribute Mappings.

-

Configure your analytics threshold values. For more information, see Set Analytics Thresholds.

Once you have finished the pre-analytics steps, you can start the analytics. First, ingest the data into the database. After that, run the data through its initial training process to create the association rules for each user-assigned entitlement. This is a somewhat intensive operation as the analytics generates a million or more association rules. Once the association rules have been determined, they are applied to user-assigned entitlements.

After the training run, run predictions to determine the current confidence scores for all assigned entitlements. After predictions, run a recommendations job that looks at all users who do not have a specific entitlement but should, based on their user attribute data.

After this, publish the data to the backend Cassandra or MongoDB database, and then create the Autonomous Identity index.

The final steps is to run an insight report to get a summary of the analytics pipeline run, and an anomaly report that reports any anomalous entitlement assignments.

The general analytics process is outlined as follows:

|

The analytics pipeline requires that DNS properly resolve the hostname before its start. Make sure to set it on your DNS server or locally in your |

Ingest the Data Files

At this point, you should have set your data sources and configured your attribute mappings. You can now run the initial analytics job to import the data into the Cassandra or MongoDB database.

-

SSH to the target machine.

-

Change to the analytics directory:

$ cd /opt/autoid/apache-livy/analytics

-

View the default Spark memory settings and available analytics commands.

$ analytics --help driverMemory=2g driverCores=3 executorCores=6 executorMemory=3G livy_url=http://<IP-Address>:8998 Usage: analytics <command> Commands: audit ingest train predict-as-is predict-recommendation publish create-assignment-index create-assignment-index-report anomaly insight

-

If you want to make changes to your Spark memory settings, edit the

analytics.cfgfile, and then save it. -

Run the data ingestion command.

$ analytics ingest

The analytics job displays the JSON output log during the process and refreshes every 15 seconds. When the job completes, you should see the following JSON output at the end of the log if the job completed successfully:

... "+ Executing Load for Base Dataframes", " - identities: Success", " - entitlements: Success", " - assignments: Success", " - applications: Success", "", "Time to Complete: 48.7060821056366s", " " stderr: " ], "total": 50 }

Run Training

After you have ingested the data into Autonomous Identity, start the training run.

Training involves two steps:

-

Autonomous Identity starts an initial machine learning run where it analyzes the data and produces association rules, which are relationships discovered within your large set of data. In a typical deployment, you can have several million generated rules. The training process can take time depending on the size of your data set.

-

Each of these rules are mapped from the user attributes to the entitlements and assigned a confidence score.

The initial training run may take time as it goes through the analysis process. Once it completes, it saves the results directly to the database.

-

Run the training command.

$ analytics train

The analytics job displays the JSON output log during the process and refreshes every 15 seconds. When the job completes, you should see the following JSON output at the end of the log if the job completed successfully:

... "+ Training Chunk Range: {'min': 3, 'max': 160, 'min_group': 3}", " - Train Batch: 1-1 (15000 entitlements per batch)...", "+ Training Chunk Range: {'min': 161, 'max': 300, 'min_group': 4}", " - Train Batch: 2-1 (15000 entitlements per batch)...", "+ Training Chunk Range: {'min': 301, 'max': 500, 'min_group': 5}", " - Train Batch: 3-1 (15000 entitlements per batch)...", "", "Time to Complete: 157.52734184265137s", " stderr: " ], "total": 52 }

Run As-Is Predictions

After your initial training run, the association rules are saved to disk. The next phase is to use these rules as a basis for the predictions module.

The predictions module is comprised of two different processes:

-

as-is. During the As-Is Prediction process, confidence scores are assigned to the entitlements that users do not have. The as-is process maps the highest confidence score to the highest

freqUnionrule for each user-entitlement access. These rules will then be displayed in the UI and saved directly to the database. -

Recommendations. See Run Recommendations.

-

Run the as-is predictions command.

$ analytics predict-as-is

The analytics job displays the JSON output log during the process and refreshes every 15 seconds. When the job completes, you should see the following JSON output at the end of the log if the job completed successfully:

... "- As-Is Batch: 1 (15000 entitlements per batch)...", "", "Time to Complete: 86.35014176368713s", " stderr: " ], "total": 47 }

Run Recommendations

During the second phase of the predictions process, the recommendations process analyzes each employee who may not have a particular entitlement and predicts the access rights that they should have according to their high confidence score justifications. These rules will then be displayed in the UI and saved directly to the database.

-

Run the recommendations command.

$ analytics predict-recommendation

The analytics job displays the JSON output log during the process and refreshes every 15 seconds. When the job completes, you should see the following JSON output at the end of the log if the job completed successfully:

… "- Recommend Batch: 1 (1000 features per batch)…", "- Recommend Batch: 2 (1000 features per batch)…", "- Recommend Batch: 3 (1000 features per batch)…", "- Recommend Batch: 4 (1000 features per batch)…", "- Recommend Batch: 5 (1000 features per batch)…", "- Recommend Batch: 6 (1000 features per batch)…", "", "Time to Complete: 677.2908701896667s", " stderr: " ], "total": 52 }

Publish the Analytics Data

Populate the output of the training, predictions, and recommendation runs to a large table with all assignments and justifications for each assignment. The table data is then pushed to the Cassandra or MongoDB backend.

-

Run the publish command.

$ analytics publish

The analytics job displays the JSON output log during the process and refreshes every 15 seconds. When the job completes, you should see the following JSON output at the end of the log if the job completed successfully:

… "+ Creating backups of existing table data", " - backup: api.entitlement_average_conf_score", " - backup: api.entitlement_assignment_conf_summary", " - backup: api.entitlement_driving_factor", " - backup: api.search_user", "", "+ Backup Results", " Status", "Tables ", "entitlement_average_conf_score Success", "entitlement_assignment_conf_summary Success", "entitlement_driving_factor Success", "search_user Success", "+ Building dataframes for table load", " - Building dataframe: api.entitlement_average_conf_score", " - write: api.entitlement_average_conf_score", " - Building dataframe: api.entitlement_assignment_conf_summary", " - write: api.entitlement_assignment_conf_summary", " - Building dataframe: api.entitlement_driving_factor", " - write: api.entitlement_driving_factor", " - Building dataframe: api.search_user", " - write: api.search_user", "", "+ Load Results", " Status", "Tables ", "entitlement_average_conf_score Success", "entitlement_assignment_conf_summary Success", "entitlement_driving_factor Success", "search_user Success", "", "Time to Complete: 71.09975361824036s", " stderr: " ], "total": 75 }

Create Assignment Index

Next, generate the Elasticsearch index using the analytics create-assignment-index command.

-

Run the create-assignment-index command.

$ analytics create-assignment-index

The analytics job displays the JSON output log during the process and refreshes every 15 seconds. When the job completes, ou should see the following JSON output near the end of the log if the job completed successfully:

… "21/03/10 06:48:18 INFO EntitlementAssignmentIndex: -----------JOB COMPLETED-----------", …

-

Optional. Run the create-assignment-index-report command. The command writes the entire index data to a file at

/data/report/assignment-index. Note that the file can get quite large but is useful to back up your csv data.$ analytics create-assignment-index-report

Run Insight Report

Next, run an insight report on the generated rules and predictions that were generated during the training and predictions runs. The analytics command generates insight_report.txt and insight_report.xlsx and writes them to the /data/input/spark_runs/reports

directory.

The report provides the following insights:

-

Number of assignments received, scored, and unscored.

-

Number of entitlements received, scored, and unscored.

-

Number of assignments scored >80% and <5%.

-

Distribution of assignment confidence scores.

-

List of the high volume, high average confidence entitlements.

-

List of the high volume, low average confidence entitlements.

-

Top 25 users with more than 10 entitlements.

-

Top 25 users with more than 10 entitlements and confidence scores greater than 80%.

-

Top 25 users with more than 10 entitlements and confidence scores less than 5%.

-

Breakdown of all applications and confidence scores of their assignments.

-

Supervisors with most employees and confidence scores of their assignments.

-

Top 50 role owners by number of assignments.

-

List of the "Golden Rules", high confidence justifications that apply to a large volume of people.

-

Run the insight command.

$ analytics insight

The analytics job displays the JSON output log during the process and refreshes every 15 seconds. When the job completes, you should see the following JSON output at the end of the log if the job completed successfully:

… "Time to Complete: 47.645989656448364s", " stderr: " ], "total": 53 } -

Access the insight report. The report is available at

/data/output/reportsin.xlsxformat.

Run Anomaly Report

Autonomous Identity provides a report on any anomalous entitlement assignments that have a low confidence score but are for entitlements that have a high average confidence score. The report’s purpose is to identify true anomalies rather than poorly managed entitlements.

The report generates the following points:

-

Identifies potential anomalous assignments.

-

Identifies the number of users who fall below a low confidence score threshold. For example, if 100 people all have low confidence score assignments to the same entitlement, then it is likely not an anomaly. The entitlement is either missing data or the assignment is poorly managed.

-

Run the anomaly command to generate the report.

$ analytics anomaly

The analytics job displays the JSON output log during the process and refreshes every 15 seconds. When the job completes, you should see the following JSON output at the end of the log if the job completed successfully:

"", "Time to Complete: 97.65438652038574s", " stderr: " ], "total": 48 } -

Access the anomaly report. The report is available at

/data/output/reportsin.csvformat.