Tuning Performance

Tune deployments in the following steps:

-

Consider the issues that impact the performance of a deployment. See Defining Performance Requirements and Constraints.

-

Tune and test the downstream servers and applications:

-

Tune the downstream web container and JVM to achieve performance targets.

-

Test downstream servers and applications in a pre-production environment, under the expected load, and with common use cases.

-

Make sure that the configuration of the downstream web container can form the basis for IG and its container.

-

-

Tune IG and its web container:

-

Optimize IG performance, throughput, and response times. See Tuning IG.

-

Configure IG connections to downstream services and protected applications. See Tuning the ClientHandler/ReverseProxyHandler.

-

Configure connections in the IG web container. See Tuning IG’s Tomcat Container.

-

Configure the IG JVM to support the required throughput. See Tuning IG’s JVM.

-

-

Increase hardware resources as required, and then re-tune the deployment.

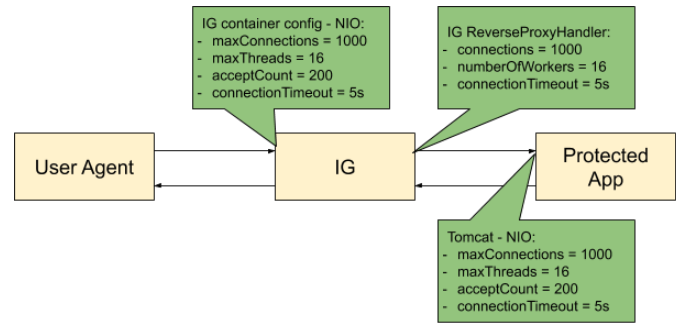

The following figure shows an example configuration for IG, its container, and the container for the protected app:

Defining Performance Requirements and Constraints

When you consider performance requirements, bear in mind the following points:

-

The capabilities and limitations of downstream services or applications on your performance goals.

-

The increase in response time due to the extra network hop and processing, when IG is inserted as a proxy in front of a service or application.

-

The constraint that downstream limitations and response times places on IG and its container.

Service Level Objectives

A service level objective (SLO) is a target that you can measure quantitatively. Where possible, define SLOs to set out what performance your users expect. Even if your first version of an SLO consists of guesses, it is a first step towards creating a clear set of measurable goals for your performance tuning.

When you define SLOs, bear in mind that IG can depend on external resources that can impact performance, such as AM’s response time for token validation, policy evaluation, and so on. Consider measuring remote interactions to take dependencies into account.

Consider defining SLOs for the following metrics of a route:

-

Average response time for a route.

The response time is the time to process and forward a request, and then receive, process, and forward the response from the protected application.

The average response time can range from less than a millisecond, for a low latency connection on the same network, to however long it takes your network to deliver the response.

-

Distribution of response times for a route.

Because applications set timeouts based on worst case scenarios, the distribution of response times can be more important than the average response time.

-

Peak throughput.

The maximum rate at which requests can be processed at peak times. Because applications are limited by their peak throughput, this SLO is arguably more important than an SLO for average throughput.

-

Average throughput.

The average rate at which requests are processed.

Metrics are returned at the monitoring endpoints. For information about monitoring endpoints, see Monitoring. For examples of how to set up monitoring in IG, see Monitoring Services.

Available Resources

With your defined SLOs, inventory the server, networks, storage, people, and other resources. Estimate whether it is possible to meet the requirements, with the resources at hand.

Benchmarks

Before you can improve the performance of your deployment, establish an accurate benchmark of its current performance. Consider creating a deployment scenario that you can control, measure, and reproduce.

For information about running benchmark tests on IG as part of ForgeOps, refer to ForgeOps' CDM Benchmarks.

Tuning IG

Consider the following recommendations for improving performance, throughput, and response times. Adjust the tuning to your system workload and available resources, and then test suggestions before rolling them out into production.

Logs

Log messages in IG and third-party dependencies are recorded using the Logback implementation of the Simple Logging Facade for Java (SLF4J) API. By default, logging level is INFO.

To reduce the number of log messages, consider setting the logging level to

error. For information, see Managing Logs.

Buffering Message Content

IG creates a TemporaryStorage object to buffer content during processing. For information about this object and its default values, see TemporaryStorage.

Messages bigger than the buffer size are written to disk, consuming I/O resources and reducing throughput.

The default size of the buffer is 64 KB. If the number of concurrent messages in your application is generally bigger than the default, consider allocating more heap memory or changing the initial or maximum size of the buffer.

To change the values, add a TemporaryStorage object named TemporaryStorage,

and use non-default values.

Cache

When caches are enabled, IG can reuse cached information without making additional or repeated queries for the information. This gives the advantage of higher system performance, but the disadvantage of lower trust in results.

During service downtime, the cache is not updated, and important notifications can be missed, such as for the revocation of tokens or the update of policies, and IG can continue to use outdated tokens or policies.

When caches are disabled, IG must query a data store each time it needs data. This gives the disadvantage of lower system performance, and the advantage of higher trust in results.

When you configure caches in IG, make choices to balance your required performance with your security needs.

IG provides the following caches:

- Session cache

-

After a user authenticates with AM, this cache stores information about the session. IG can reuse the information without asking AM to verify the session token (SSO token or CDSSO token) for each request.

If WebSocket notifications are enabled, the cache evicts entries based on session notifications from AM, making the cache content more accurate (trustable).

By default, the session information is not cached. To increase performance, consider enabling and configuring the cache. For more information, see

sessionCachein AmService. - Policy cache

-

After an AM policy decision, this cache stores the decision. IG can reuse the policy decision without repeatedly asking AM for a new policy decision.

If WebSocket notifications are enabled, the cache evicts entries based on policy notifications from AM, making the cache content more accurate (trustable).

By default, policy decisions are not cached. To increase performance, consider enabling and configuring the cache. For more information, see PolicyEnforcementFilter.

- User profile cache

-

When the UserProfileFilter retrieves user information, it caches it. IG can reuse the cached data without repeatedly querying AM to retrieve it.

By default, profile attributes are not cached. To increase performance, consider enabling and configuring the cache. For more information, see UserProfileFilter.

- Access token cache

-

After a user presents an access_token to the OAuth2ResourceServerFilter, this cache stores the token. IG can reuse the token information without repeatedly asking the authorization server to verify the access_token for each request.

By default, access_tokens are not cached. To increase performance by caching access_tokens, consider configuring a cache in one of the following ways:

-

Configure a CacheAccessTokenResolver for a cache based on Caffeine. For more information, see CacheAccessTokenResolver.

-

Configure the

cacheproperty of OAuth2ResourceServerFilter. For more information, see OAuth2ResourceServerFilter

-

- Open ID Connect user information cache

-

When a downstream filter or handler needs user information from an OpenID Connect provider, IG fetches it lazily. By default, IG caches the information for 10 minutes to prevent repeated calls over a short time.

For more information, see

cacheExpirationin OAuth2ClientFilter.

All caches provide similar configuration properties for timeout, defining the duration to cache entries. When the timeout is lower, the cache is evicted more frequently, and consequently, the performance is lower but the trust in results is higher. Consider your requirements for performance and security when you configure the timeout properties for each cache.

WebSocket Notifications

By default, IG receives WebSocket notifications from AM for the following events:

-

When a user logs out of AM, or when the AM session is modified, closed, or times out. IG can use WebSocket notifications to evict entries from the session cache.

For an example of setting up session cache eviction, see Use WebSocket Notifications to Evict the Session Info Cache.

-

When AM creates, deletes, or changes a policy decision. IG can use WebSocket notifications to evict entries from the policy cache.

For an example of setting up cache eviction, see Using WebSocket Notifications to Evict the Policy Cache.

If the WebSocket connection is lost, during the time the WebSocket is not connected, IG behaves as follows:

-

Responds to session service calls with an empty SessionInfo result.

When the SingleSignOn filter recieves an empty SessionInfo call, it concludes that the user is not logged in, and triggers a login redirect.

-

Responds to policy evaluations with a deny policy result.

By default, IG waits for five seconds before trying to re-establish the connection. If it can’t re-establish the connection, it keeps trying every five seconds.

To disable WebSocket notifications, or change any of the parameters, configure

the notifications property in AmService. For information, see

AmService.

Tuning the ClientHandler/ReverseProxyHandler

The ClientHandler/ReverseProxyHandler communicates as a client to a downstream third-party service or protected application. The performance of the communication is determined by the following parameters:

-

The number of available connections to the downstream service or application.

-

Number of IG worker threads allocated to service inbound requests, and manage propagation to the downstream service or application.

-

The connection timeout, or maximum time to connect to a server-side socket, before timing out and abandoning the connection attempt.

-

The socket timeout, or the maximum time a request is expected to take before a response is received, after which the request is deemed to have failed.

ClientHandler/ReverseProxyHandler Tuning in Web Container Mode

Configure IG in conjunction with the Tomcat container, as follows:

-

For BIO Connector (Tomcat 3.x to 8.x), configure

maxThreadsin Tomcat to be close to the number of configured Tomcat connections.Because IG uses an asynchronous threading model, the

numberOfWorkersin ClientHandler/ReverseProxyHandler can be much lower. The asynchronous threads are freed up immediately after the request is propagated, and can service another blocking Tomcat request thread.To take advantage of IG’s asynchronous thread model, configure Tomcat to use a non-blocking, NIO or NIO2 connector, instead of a BIO connector.

-

For NIO connectors, align

numberOfWorkersin IG withmaxThreadsin Tomcat.Because NIO connectors use an asynchronous threading model, the

maxThreadsin Tomcat can be much lower than for a BIO connector.

To identify the throughput plateau, test in a pre-production performance

environment, with realistic use cases. Increment numberOfWorkers from its

default value of one thread per JVM core, up to a large maximum value based on

the number of concurrent connections.

ClientHandler/ReverseProxyHandler Tuning in Standalone Mode

Configure IG in conjunction with IG’s first-class Vert.x

configuration, and the vertx property of admin.json. For more

information, see

AdminHttpApplication (admin.json).

| Object | Vert.x Option | Description |

|---|---|---|

IG (first-class) |

|

The number of Vert.x Verticle instances to deploy. Each instance operates on the same port on its own event-loop thread. This setting effectively determines the number of cores that IG operates across, and therefore, the number of available threads. Default: The number of cores. |

root.vertx |

|

The number of available event-loop threads to be supplied to instances. Specify

a value greater than that for Default: 20 |

root.connectors.<connector>.vertx |

|

The maximum number of connections to queue before refusing requests. |

|

TCP connection send buffer size. Set this property according to the available RAM and required number of concurrent connections. |

|

|

TCP receive buffer size. Set this property according to the available RAM and required number of concurrent connections. |

| Object | Vert.x Option | Description |

|---|---|---|

root.vertx |

|

Interval at which Vert.x checks for blocked threads and logs a warning. Default: One second. |

|

Maximum time executing before Vert.x logs a warning. Default: Two seconds. |

|

|

Threshold at which warning logs are accompanied by a stack trace to identify causes. Default: Five seconds. |

|

|

Log network activity. |

Tuning IG’s Tomcat Container

Configure the Tomcat container in conjunction with IG, as described in Tuning the ClientHandler/ReverseProxyHandler.

To take advantage of IG’s asynchronous thread model, configure Tomcat to use a non-blocking, NIO or NIO2 connector. Consider configuring the following connector attributes:

-

maxConnections -

connectionTimeout -

soTimeout -

acceptCount -

executor -

maxThreads -

minSpareThreads

For more information, see Apache Tomcat 9 Configuration Reference and Apache Tomcat 8 Configuration Reference.

Set the Maximum Number of File Descriptors and Processes Per User

Each IG instance in your environment should have access to at least 65,536 file descriptors to handle multiple client connections.

Ensure that every IG instance is allocated enough file descriptors.

For example, use the ulimit -n command to check the limits for a

particular user:

$ su - iguser

$ ulimit -nIt may also be necessary to increase the number of processes available to the user running the IG processes.

For example, use the ulimit -u command to check the process limits

for a user:

$ su - iguser

$ ulimit -u|

Before increasing the file descriptors for the IG instance, ensure that the total amount of file descriptors configured for the operating system is higher than 65,536. If the IG instance uses all of the file descriptors, the operating system will run out of file descriptors. This may prevent other services from working, including those required for logging in the system. |

Refer to your operating system’s documentation for instructions on how to display and increase the file descriptors or process limits for the operating system and for a given user.

Tuning IG’s JVM

Start tuning the JVM with default values, and monitor the execution, paying particular attention to memory consumption, and GC collection time and frequency. Incrementally adjust the configuration, and retest to find the best settings for memory and garbage collection.

Make sure that there is enough memory to accommodate the peak number of required connections, and make sure that timeouts in IG and its container support latency in downstream servers and applications.

IG makes low memory demands, and consumes mostly YoungGen memory. However, using caches, or proxying large resources, increases the consumption of OldGen memory. For information about how to optimize JVM memory, see the Oracle documentation.

Consider these points when choosing a JVM:

-

Find out which version of the JVM is available. More recent JVMs usually contain performance improvements, especially for garbage collection.

-

Choose a 64-bit JVM if you need to maximize available memory.

Consider these points when choosing a GC:

-

Test GCs in realistic scenarios, and load them into a pre-production environment.

-

Choose a GC that is adapted to your requirements and limitations. Consider comparing the Garbage-First Collector (G1) and Parallel GC in typical business use cases.

The G1 is targeted for multi-processor environments with large memories. It provides good overall performance without the need for additional options. The G1 is designed to reduce garbage collection, through low-GC latency. It is largely self-tuning, with an adaptive optimization algorithm.

The Parallel GC aims to improve garbage collection by following a high-throughput strategy, but it requires more full garbage collections.

For more information, see Best practice for JVM Tuning with G1 GC