Run the Analytics Pipeline

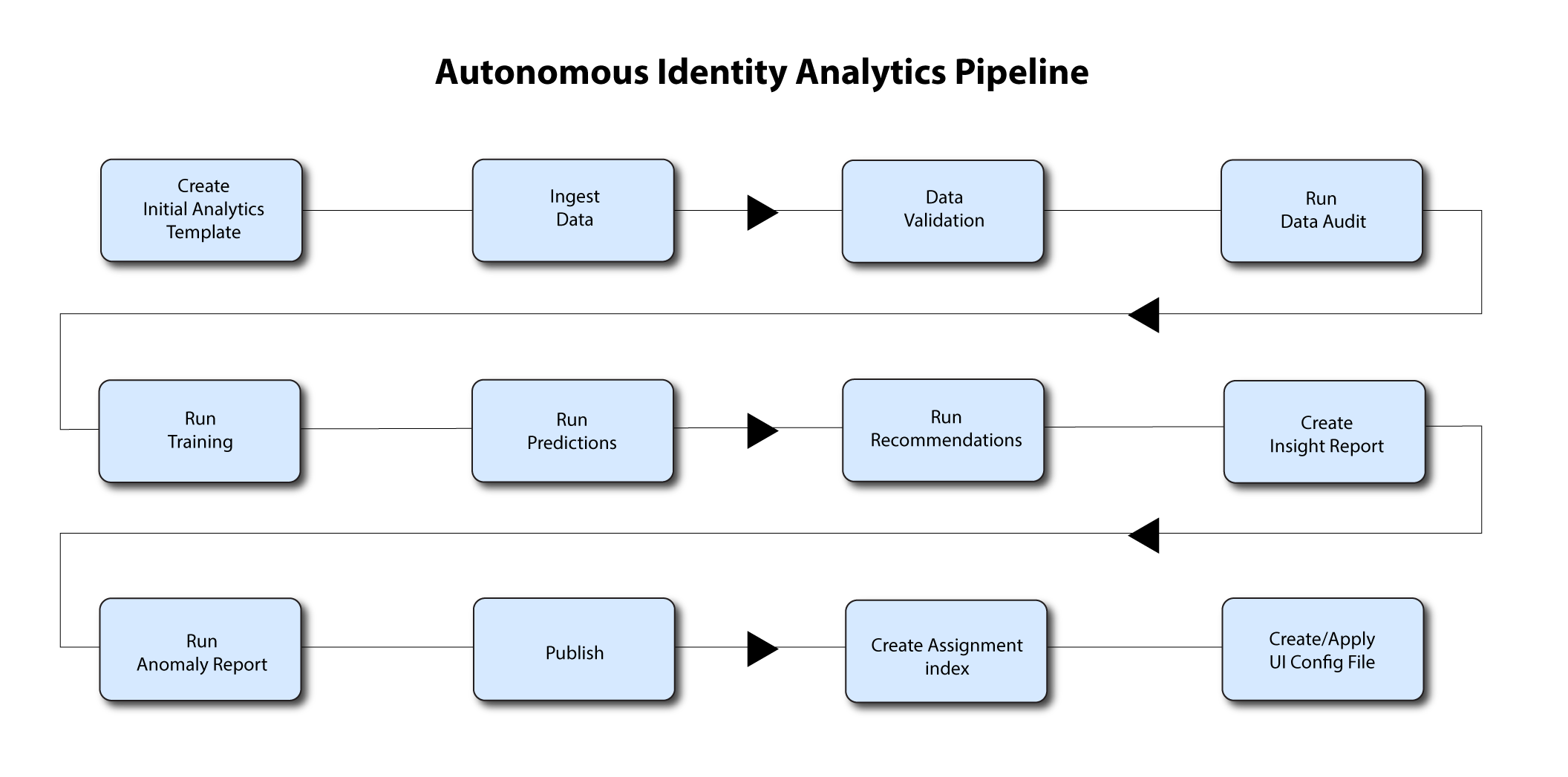

The Analytics pipeline is the heart of Autonomous Identity. It analyzes, calculates, and determines the association rules, confidence scores, predictions, and recommendations for assigning entitlements to the users.

The analytics pipeline is an intensive processing operation that can take some time depending on your dataset and configuration. To ensure an accurate analysis, the data needs to be as complete as possible with little or no null values. Once you have prepared the data, you must run a series of analytics jobs to ensure an accurate rendering of the entitlements and confidence scores.

The initial pipeline step is to create, edit, and apply the analytics_init_config.yml configuration file. The analytics_init_config.yml file configures the key properties for the analytics pipeline. In general, you will not need to change this file too much, except for the Spark configuration options. For more information, see Prepare Spark Environment.

Next, run a job to validate the data, then, when acceptable, ingest the data into the database. After that, run a final audit of the data to ensure accuracy. If everything passes, run the data through its initial training process to create the association rules for each user-assigned entitlement. This is a somewhat intensive operation as the analytics generates a million or more association rules. Once the association rules have been determined, they are applied to user-assigned entitlements.

After the training run, run predictions to determine the current confidence scores for all assigned entitlements. After this, run a recommendations job that looks at all users who do not have a specific entitlement but should, based on their user attribute data. Once the predictions and recommendations are completed, run an insight report to get a summary of the analytics pipeline run, and an anomaly report that reports any anomalous entitlement assignments.

The final steps are to push the data to the backend Cassandra database, and then configure and apply any UI configuration changes to the system.

The general analytics process is outlined as follows:

Note

The analytics pipeline requires that DNS properly resolve the hostname before its start. Make sure to set it on your DNS server or locally in your /etc/hosts file.

Analytic Actions

The Deployer-based installation of the analytics services provides an "analytics" alias (alias analytics='docker exec -it analytics bash analytics') on the server, with which you can perform a number of actions for configuration or to run the pipeline on the target machine.

| Command | Description |

|---|---|

analytics create-template | Run this command to create the |

analytics apply-template | Apply the changes to |

analytics ingest | Ingest data into Autonomous Identity. |

analytics validate | Run data validation. |

analytics audit | Run a data audit to ensure if meets the specifications. |

analytics train | Runs an analytics training run. |

analytics predict-as-is | Run as-is predictions. |

analytics predict-recommendation | Run recommendations. |

analytics insight | Create the Insights report. |

analytics anomaly | Create the Anomaly report. |

analytics publish | Push the data to the Apache Cassandra backend. |

analytics create-ui-config | Create the |

analytics apply-ui-config | Apply the |

analytics run-pipeline | Run all of the pipeline commands at once in the following order: validate, ingest, audit, train, predict-as-is, predict-recommendation, publish, create-ui-config, apply-ui-config. |

Create Initial Analytics Template

The main configuration file for the Analytics service is analytics_init_config.yml. You generate this file by running the analytics create-template command.

On the target node, create the initial configuration template. The command generates the

analytics_init_config.ymlin the/data/conf/directory.$

analytics create-templateEdit the

analytics_init_config.ymlfile for the Spark machine. Make sure to edit theuser_column_descriptionsand thespark.total.coresfields if you are submitting your own dataset. For more information, see Appendix A: The analytics_init_config.yml File.Copy the .csv files to the

/data/inputfolder. Note if you are using the sample dataset, it is located at the/data/conf/demo-datadirectory.$

cp *.csv /data/input/Apply the template to the analytics service. The command generates the

analytics_config.ymlfile in the/data/conf/directory. Autonomous Identity uses this configuration for other analytic jobs.Note

Note that you do not directly edit the

analytics_config.ymlfile. If you want to make any additional configuration changes, edit theanalytics_init_config.ymlfile again, and then re-apply the new changes using the analytics apply-template command.$

analytics apply-template

Ingest the Data Files

By this point, you should have prepared and validated the data files for ingestion into Autonomous Identity. This process imports the seven .csv files into the Cassandra database.

Ingest the data into the Cassandra database:

Make sure Cassandra is up-and-running.

Make sure you have determined your Spark configuration in terms of the number of executors and memory.

Run the data ingestion command.

$

analytics ingestYou should see the following output if the job completed successfully:

Script: /usr/local/lib/python3.6/site-packages/zoran_pipeline_scripts-0.32.0-py3.6.egg/EGG-INFO/scripts/zinput_data_validation_script.py is successful

Run Data Validation

Next, run the analytics validate command to check that the input data is accurate before you run it through the Analytics service.

Validate the data.

$

analytics validateYou should see the following output if the job completed successfully:

Script: /usr/local/lib/python3.6/site-packages/zoran_pipeline_scripts-0.32.0-py3.6.egg/EGG-INFO/scripts/zinput_data_validation_script.py is successful

The validation script outputs a .csv file with 32 pass/fail tests across the seven input files. You can use the validation report to fix any issues with your data files. After the fixes, you can re-run the validation script to check that your files are complete.

The command checks the following items:

All files contain the correct columns and column names.

No duplicate rows in the files.

No null values in the files.

Check

features.csv.No missing

USR_KEY,USR_MANAGER_KEY,USR_EMP_TYPEvalues.No duplicate

USR_KEYvalues. There should only be one row of entitlements per user. For example, if the user has six entitlements, there should be six rows respectively for each entitlement assignment.All

USR_MANAGER_KEYSshould also exist asUSR_KEYS. This ensures that we have the user attribute information for all managers.

Check

labels.csvAll

USR_KEYvalues in thelabels.csvshould also exist in thefeatures.csvfile.

Check

HRName.csv.No duplicate

USR_KEYvalues.All

USR_KEYvalues in theHRName.csvshould also exist in thefeatures.csvfile.

Check

RoleOwner.csv.No duplicate

ENTvalues.All

USR_KEYvalues in theRoleOwner.csvshould also exist in thefeatures.csvfile.All

ENTvalues in theRoleOwner.csvfile should also exist in thelabel.csvfile.

Check

AppToEnt.csv.No duplicate

ENTvalues.All

ENTs in theAppToEnt.csvfile should also exist in thelabel.csvfile.

Check

JobAndDeptDesc.csvNo duplicate

USR_KEYSvalues.All

USR_KEYvalues in theJobAndDeptDesc.csvfile should also exist in thefeatures.csvfile.

Run Data Audit

Before running the analytics training run, we need to do one final audit of the data. The audit runs through the seven .csv files as loaded into the database and generates initial metrics for your company.

Run the Data Audit as follows:

Verify that the .csv files are in the

/data/input/directory.Run the audit command.

$

analytics auditYou should see the following output if the job completed successfully:

Script : /usr/local/lib/python3.6/site-packages/zoran_pipeline_scripts-0.32.0-py3.6.egg/EGG-INFO/scripts/zinput_data_audit_script.py is successful

The script provides the following metrics:

| File | Description |

|---|---|

| features.csv |

|

| labels.csv |

|

| RoleOwner.csv |

|

| AppToEnt.csv |

|

Run Training

Now that you have ingested the data into Autonomous Identity. Start the training run.

Training involves two steps: the first step is an initial machine learning run where Autonomous Identity analyzes the data and produces the association rules. In a typical deployment, you can have several million generated rules. Each of these rules are mapped from the user attributes to the entitlements and assigned a confidence score.

The initial training run may take time as it goes through the analysis process. Once it completes, it saves the results directly to the Cassandra database.

Start the training process:

Run the training command.

$

analytics trainYou should see the following output if the job completed successfully:

Script : /usr/local/lib/python3.6/site-packages/zoran_analytics-0.32.0-py3.6.egg/EGG-INFO/scripts/ztrain.py is successful

Run Predictions and Recommendations

After your initial training run, the association rules are saved to disk. The next phase is to use these rules as a basis for the predictions module.

The predictions module is comprised of two different processes:

as-is. During the As-Is Prediction process, confidence scores are assigned to the entitlements that users do not have. During a pre-processing phases, the

labels.csvandfeatures.csvare combined in a way that appends them to only the access rights that each user has. The as-is process maps the highest confidence score to the highestfreqUnionrule for each user-entitlement access. These rules will then be displayed in the UI and saved directly to the Cassandra database.recommendation. During the Recommendations process, confidence scores are assigned to all entitlements. This allows Autonomous Identity to recommend entitlements to users who do not have them. The lowest confidence entitlement is bound by the confidence threshold used in the initial training step. During a pre-processing phase, the

labels.csvandfeatures.csvare combined in a way that appends them to all access rights. The script analyzes each employee who may not have a particular entitlement and predicts the access rights that they should have according to their high confidence score justifications. These rules will then be displayed in the UI and saved directly to the Cassandra database.

Run as-is Predictions:

In most cases, there is no need to make any changes to the configuration file. However, if you want to modify the analytics, make changes to your

analytics_init_config.ymlfile.For example, check that you have set the correct parameters for the association rule analysis (for example, minimum confidence score) and for deciding the rules for each employee (for example, the confidence window range over which to consider rules equivalent).

Run the as-is predictions command.

$

analytics predict-as-isYou should see the following output if the job completed successfully:

Script : /usr/local/lib/python3.6/site-packages/zoran_analytics-0.32.0-py3.6.egg/EGG-INFO/scripts/zas_is.py is successful

Run Recommendations

Make any changes to the configuration file,

analytics_init_config.yml, to ensure that you have set the correct parameters (for example, minimum confidence score).Run the recommendations command.

$

analytics predict-recommendationYou should see the following output if the job completed successfully:

Script : /usr/local/lib/python3.6/site-packages/zoran_analytics-0.32.0-py3.6.egg/EGG-INFO/scripts/zrecommend.py is successful

Run the Insight Report

Next, run a report on the generated rules and predictions that were generated during the training and predictions runs.

Run the Analytics Insight Report:

$

analytics insightYou should see the following output if the job completed successfully:

Script : /usr/local/lib/python3.6/site-packages/zoran_analytics_reporting-0.32.0-py3.6.egg/EGG-INFO/scripts/zinsight.py is successful

The report provides the following insights:

Number of entitlements and assignments received.

Number of entitlements and assignments scored and unscored.

Number of assignments scored >80% and <5%.

Distribution of assignment confidence scores.

List of the high volumne, high confidence entitlements.

List of the high volume, low confidence entitlements.

List of users with the most entitlement accesses with an average confidence score of >80% and <5%.

Breakdown of all applications and confidence scores of their assignments.

Supervisors with most employees and confidence scores of their assignments.

Entitlement owners with most entitlements and confidence scores of their assignments.

List of the "Golden Rules", high confidence justifications that apply to a large volume of people.

Run Anomaly Report

Autonomous Identity provides a report on any anomalous entitlement assignments that have a low confidence score but are for entitlements that have a high average confidence score. The report's purpose is to identify true anomalies rather than poorly managed entitlements. The script writes the anomaly report to a Cassandra database.

Run the Anomaly report.

$

analytics anomalyYou should see the following output if the job completed successfully:

Script : /usr/local/lib/python3.6/site-packages/zoran_analytics_reporting-0.32.0-py3.6.egg/EGG-INFO/scripts/zanomaly.py is successful

The report generates the following points:

Identifies potential anomalous assignments.

Identifies the number of users who fall below a low confidence score threshold. For example, if 100 people all have low confidence score assignments to the same entitlement, then it is unlikely an anomaly. The entitlement is either missing data or the assignment is poorly managed.

Publish the Analytics Data

The final step in the analytics process is to populate the output of the training, predictions, and recommendation runs to a large table with all assignments and justifications for each assignment. The table data is then pushed to the Cassandra backend.

Publish the data to the backend.

$

analytics publishYou should see the following output if the job completed successfully:

Script : /usr/local/lib/python3.6/site-packages/zoran_etl-0.32.0-py3.6.egg/EGG-INFO/scripts/zload.py is successful

Create the Analytics UI Config File

Once the analytics pipeline has completed, you can configure the UI using the analytics create-ui-config command if desired.

On the target node, run the analytics create-ui-config to generate the

ui_config.jsonfile in the/data/conf/directory. The file sets what is displayed in the Autonomous Identity UI.$

analytics create-ui-configIn most cases, you can run the file as-is. If you want to make changes, make edits to the

ui_config.jsonfile and save it to the/data/conf/directory.Apply the file.

$

analytics apply-ui-config

Run Full Pipeline

You can run the full analytics pipeline with a single command using the run-pipeline command. Make sure your data is in the correct directory, /data, and that any UI configuration changes are set in the ui_config.json file in the /data/conf/ directory.

Run the full pipeline.

$

analytics run-pipelineYou should see the following output if the job completed successfully:

Script : init.py is successful ****************************************************************************************************************** Pipe Line Ends

The run-pipeline command runs the following jobs in order:

analytics ingest

analytics validate

analytics audit

analytics train

analytics predict-as-is

analytics predict-recommendation

analytics insight

analytics anomaly

analytics publish

analytics create-ui-config

analytics apply-ui-config