Install a Single Node Air-Gap Target

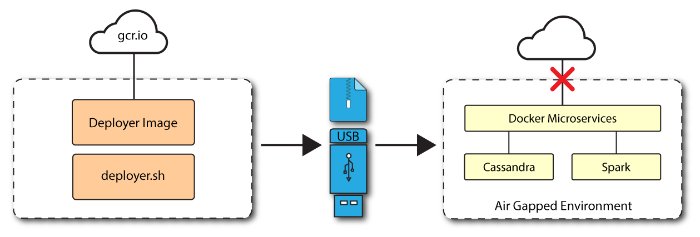

This chapter presents instructions on deploying Autonomous Identity in a single-node target machine that has no Internet connectivity. This type of configuration, called an air-gap or offline deployment, provides enhanced security by isolating itself from outside Internet or network access.

The air-gap installation is similar to that of the single-node target deployment with Internet connectivity, except that the image and deployer script must be saved on a portable media, such as USB drive or drive, and copied to the air-gapped target machine.

Figure 9: A single-node air-gapped target deployment.

Let's deploy Autonomous Identity on a single-node air-gapped target on CentOS 7. The following are prequisites:

Operating System. The target machine requires CentOS 7. The deployer machine can use any operating system as long as Docker is installed. For this guide, we use CentOS 7 as its base operating system.

Memory Requirements. Make sure you have enough free disk space on the deployer machine before running the

deployer.shcommands. We recommend at least a 40GB/partition with 14GB used and 27GB free after running the commands.Default Shell. The default shell for the

autoiduser must be bash.Deployment Requirements. Autonomous Identity provides a Docker image that creates a

deployer.shscript. The script downloads additional images necessary for the installation. To download the deployment images, you must first obtain a registry key to log into the ForgeRock Google Cloud Registry (gcr.io). The registry key is only available to ForgeRock Autonomous Identity customers. For specific instructions on obtaining the registry key, see How To Configure Service Credentials (Push Auth, Docker) in Backstage.

Set Up the Deployer Machine

Set up the deployer on an Internet-connect machine.

The install assumes that you have CentOS 7 as your operating system. Check your CentOS 7 version.

$

sudo cat /etc/centos-releaseSet the user for the target machine to a username of your choice. For example,

autoid.$

sudo adduser autoidSet the password for the user you created in the previous step.

$

sudo passwd autoidConfigure the user for passwordless sudo.

$

echo "autoid ALL=(ALL) NOPASSWD:ALL" | sudo tee /etc/sudoers.d/autoidAdd administrator privileges to the user.

$

sudo usermod -aG wheel autoidChange to the user account.

$

su - autoidInstall yum-utils package on the deployer machine. yum-utils is a utilities manager for the Yum RPM package repository. The repository compresses software packages for Linux distributions.

$

sudo yum install -y yum-utilsCreate the installation directory. Note that you can use any install directory for your system as long as your run the deployer.sh script from there. Also, the disk volume where you have the install directory must have at least 8GB free space for the installation.

$

mkdir ~/autoid-config

Install Docker on the Deployer Machine

On the target machine, set up the Docker-CE repository.

$

sudo yum-config-manager \ --add-repo https://download.docker.com/linux/centos/docker-ce.repoInstall the latest version of the Docker CE, the command-line interface, and containerd.io, a containerized website.

$

sudo yum install -y docker-ce docker-ce-cli containerd.io

Set Up SSH on the Deployer

While SSH is not necessary to connect the deployer to the target node as the machines are isolated from one another. You still need SSH on the deployer so that it can communicate with itself.

On the deployer machine, run ssh-keygen to generate an RSA keypair, and then click Enter. You can use the default filename. Enter a password for protecting your private key.

$

ssh-keygen -t rsa -C "autoid"The public and private rsa key pair is stored in

home-directory/.ssh/id_rsaandhome-directory/.ssh/id_rsa.pub.Copy the SSH key to the

~/autoid-configdirectory.$

cp ~/.ssh/id_rsa ~/autoid-configChange the privileges to the file.

$

chmod 400 ~/autoid-config/id_rsa

Prepare the Tar File

Run the following steps on an Internet-connect host machine:

On the deployer machine, change to the installation directory.

$

cd ~/autoid-config/Log in to the ForgeRock Google Cloud Registry (gcr.io) using the registry key. The registry key is only available to ForgeRock Autonomous Identity customers. For specific instructions on obtaining the registry key, see How To Configure Service Credentials (Push Auth, Docker) in Backstage.

$

docker login -u _json_key -p "$(cat autoid_registry_key.json)" https://gcr.io/forgerock-autoidRun the create-template command to generate the

deployer.shscript wrapper. Note that the command sets the configuration directory on the target node to/config. Note that the --user parameter eliminates the need to use sudo while editing the hosts file and other configuration files.$

docker run --user=`id -u` -v ~/autoid-config:/config -it gcr.io/forgerock-autoid/deployer:2020.6.4 create-templateMake the script executable.

$

chmod +x deployer.shDownload the Docker images. This step downloads software dependencies needed for the deployment and places them in the

autoid-packagesdirectory.$

sudo ./deployer.sh download-imagesCreate a tar file containing all of the Autonomous Identity binaries.

$

tar czf deployer.sh autoid-packages.tgz autoid-packages/*Copy the

autoid-packages.tgzto a USB drive or portable hard drive.

Install from the Air-Gap Target

Before you begin, make sure you have CentOS 7 installed on your air-gapped target machine.

Create the

~/autoid-configdirectory if you haven't already.$

mkdir ~/autoid-configCopy the

autoid-package.tgztar file from the portable storage device.Unpack the tar file.

$

tar xf autoid-packages.tgz -C ~/autoid-configOn the air-gap host node, copy the SSH key to the

~/autoid-configdirectory.$

cp ~/.ssh/id_rsa ~/autoid-configChange the privileges to the file.

$

chmod 400 ~/autoid-config/id_rsaChange to the configuration directory.

$

cd ~/autoid-configInstall Docker.

$

sudo ./deployer.sh install-dockerLog out and back in.

Change to the configuration directory.

$

cd ~/autoid-configImport the deployer image.

$

./deployer.sh import-deployerCreate the configuration template using he create-template command. This command creates a configuration file,

ansible.cfg.$

./deployer.sh create-templateOpen a text editor and edit the

ansible.cfgto set up the target machine user and SSH private key file location on the target node. Make sure that the remote_user exists on the target node and that the deployer machine can ssh to the target node as the user specified in theid_rsafile.[defaults] host_key_checking = False remote_user = autoid private_key_file = id_rsaOpen a text editor and enter the target host's private IP addresses in the

~/autoid-config/hostsfile. The following is an example of the~/autoid-config/hostsfile:[docker-managers] 10.128.0.34 [docker-workers] 10.128.0.34 [docker:children] docker-managers docker-workers [cassandra-seeds] 10.128.0.34 [cassandra-workers] 10.128.0.34 [spark-master] 10.128.0.34 [spark-workers] 10.128.0.34 [analytics] 10.128.0.34An air-gap deployment has no external IP addresses, but you may still need to define a mapping if your internal IP address differs from an external IP, say in a virtual air-gapped configuration.

If the IP addresses are the same, you can skip this step.

On the target machine, add the

private_ip_address_mappingproperty in the~/autoid-config/vars.ymlfile. Make sure the values are within double quotes. The key should not be in double quotes and should have two spaces preceding the IP address.private_ip_address_mapping: 34.70.190.144: "10.128.0.34"Edit other properties in the

~/autoid-config/vars.ymlfile, specific to your deployment, such as the following:Domain name. For information, see Customize the Domain and Namespace.

UI Theme. Autonomous Identity provides a dark theme mode for its UI. Set the

enable_dark_themetotrueto enable it.Session Duration. The default session duration is set to 30 minutes. You can alter this value by editing the

jwt_expiryproperty to a time value in minutes of your choice.SSO. Autonomous Identity provides a single sign-on (SSO) feature that you can configure with an OIDC IdP provider. For information, see Set Up Single Sign-On.

The default Autonomous Identity URL will be:

https://autoid-ui.forgerock.com. To customize your domain name and target environment, see Customize the Domain and Namespace.Set the Autonomous Identity passwords, located at

~/autoid-config/vault.yml.Note

Do not include special characters & or $ in

vault.ymlpasswords as it will result in a failed deployer process.configuration_service_vault: basic_auth_password: Welcome123 openldap_vault: openldap_password: Welcome123 cassandra_vault: cassandra_password: Welcome123 cassandra_admin_password: Welcome123Encrypt the vault file that stores the Autonomous Identity passwords, located at

~/autoid-config/vault.yml. The encrypted passwords will be saved to/config/.autoid_vault_password. The/config/mount is internal to the deployer container.$

./deployer.sh encrypt-vaultRun the deployment.

$

./deployer.sh run

Access the Dashboard

You can now access the Autonomous Identity console UI.

Open a browser, and point it to

https://autoid-ui.forgerock.com/(or your customized URL:https://myid-ui.abc.com).Log in as a test user:

bob.rodgers@forgerock.com. Enter the password:Welcome123.

Check Apache Cassandra

On the target node, check the status of Apache Cassandra.

$ /opt/autoid/apache-cassandra-3.11.2/bin/nodetool status

An example output is as follows:

Datacenter: datacenter1

=======================

Status=Up/Down

|/ State=Normal/Leaving/Joining/Moving

-- Address Load Tokens Owns (effective) Host ID Rack

UN 34.70.190.144 1.33 MiB 256 100.0% a10a91a4-96e83dd-85a2-4f90d19224d9 rack1

Check Apache Spark

SSH to the target node and open Spark dashboard using the bundled text-mode web browser

$

elinks http://localhost:8080You should see Spark Master status as ALIVE and worker(s) with State ALIVE

Access Self-Service

The self-service feature lets Autonomous Identity users change their own passwords.

Open a browser and point it to:

https://autoid-selfservice.forgerock.com/.

Run the Analytics

If the previous steps all check out successfully, you can start an analytics pipeline run, where association rules, confidence scores, predications, and recommendations are determined. Autonomous Identity provides a small demo data set that lets you run the analytics pipeline on. Note for production runs, prepare your company's dataset as outlined in Data Preparation.

SSH to the target node.

Check that the analytics service is running.

$

docker ps | grep analyticsIf the previous step returns blank, start the analytics. For more information, see Data Preparation.

$

docker start analyticsOnce you have verified that the analytics service has started, you can run the analytics commands. For more information, see Run the Analytics Pipeline.

If your analytics pipeline run completes successfully, you have finished your Autonomous Identity installation.