Data storage

DS directory servers store data in backends . A backend is a private server repository implemented in memory, as an LDIF file, or as an embedded database.

Embedded database backends have these characteristics:

- Suitable for large numbers of entries

-

When creating a database backend, you choose the backend type. DS directory servers use JE backends for local data.

The JE backend type is implemented using B-tree data structures. It stores data as key-value pairs, which is different from the model used by relational databases.

JE backends are designed to hold hundreds of millions, or even billions of LDAP entries.

- Fully managed by DS servers

-

Let the server manage its backends and their database files.

By default, backend database files are located under the

opendj/dbdirectory.Do not compress, tamper with, or otherwise alter backend database files directly, unless specifically instructed to do so by a qualified technical support engineer. External changes to backend database files can render them unusable by the server.

If you use snapshot technology for backup, read Back up using snapshots and Restore from a snapshot.

DS servers provide the

dsbackupcommand for backing up and restoring database backends. For details, refer to Backup and restore. - Self-cleaning

-

A JE backend stores data on disk using append-only log files with names like

number.jdb. The JE backend writes updates to the highest-numbered log file. The log files grow until they reach a specified size (default: 1 GB). When the current log file reaches the specified size, the JE backend creates a new log file.To avoid an endless increase in database size on disk, JE backends clean their log files in the background. A cleaner thread copies active records to new log files. Log files that no longer contain active records are deleted.

Due to the cleanup processes, JE backends can actively write to disk, even when there are no pending client or replication operations.

- Configurable I/O behavior

-

By default, JE backends let the operating system potentially cache data for a period of time before flushing the data to disk. This setting trades full durability with higher disk I/O for good performance with lower disk I/O.

With this setting, it is possible to lose the most recent updates that were not yet written to disk, in the event of an underlying OS or hardware failure.

You can modify this behavior by changing the advanced configuration settings for the JE backend. If necessary, you can change the advanced setting, db-durability, using the

dsconfig set-backend-propcommand. - Automatic recovery

-

When a JE backend is opened, it recovers by recreating its B-tree structure from its log files.

This is a normal process. It lets the backend recover after an orderly shutdown or after a crash.

- Automatic verification

-

JE backends run checksum verification periodically on the database log files.

If the verification process detects backend database corruption, then the server logs an error message and takes the backend offline. If this happens, restore the corrupted backend from backup so that it can be used again.

By default, the verification runs every night at midnight local time. If necessary, you can change this behavior by adjusting the advanced settings, db-run-log-verifier and db-log-verifier-schedule, using the

dsconfig set-backend-propcommand. - Encryption support

-

JE backends support encryption for data confidentiality.

For details, refer to Data encryption.

Depending on the setup profiles used at installation time, DS servers have backends with the following default settings:

| Backend | Type | Optional?(1) | Replicated? | Part of Backup? | Details |

|---|---|---|---|---|---|

adminRoot |

LDIF |

No |

If enabled |

If enabled |

Symmetric keys for (deprecated) reversible password storage schemes. Base DN: Base directory: |

amCts |

JE |

Yes |

Yes |

Yes |

AM core token store (CTS) data. More information: Install DS for AM CTS. Base DN: Base directory: |

amIdentityStore |

JE |

Yes |

Yes |

Yes |

AM identities. More information: Install DS for platform identities. Base DN: Base directory: |

cfgStore |

JE |

Yes |

Yes |

Yes |

AM configuration, policy, and other data. More information: Install DS for AM configuration. Base DN: Base directory: |

changelogDb |

Changelog |

Yes(2) |

N/A |

No |

Change data for replication and change notifications. More information: Changelog for notifications. Base DN: Base directory: |

config |

Config |

No |

N/A |

No |

File-based representation of this server’s configuration. Do not edit Base DN: Base directory: |

dsEvaluation |

JE |

Yes |

Yes |

Yes |

Example.com sample data. More information: Install DS for evaluation. Base DN: Base directory: |

idmRepo |

JE |

Yes |

Yes |

Yes |

IDM repository data. More information: Install DS as an IDM repository. Base DN: Base directory: |

monitor |

Monitor |

No |

N/A |

N/A |

Single entry; volatile monitoring metrics maintained in memory since server startup. More information: LDAP-based monitoring. Base DN: Base directory: N/A |

monitorUser |

LDIF |

Yes |

Yes |

Yes |

Single entry; default monitor user account. Base DN: Base directory: |

rootUser |

LDIF |

No(3) |

No |

Yes |

Single entry; default directory superuser account. Base DN: Base directory: |

root DSE |

Root DSE |

No |

N/A |

N/A |

Single entry describing server capabilities. Use Base DN: Base directory: N/A |

schema |

Schema |

No |

Yes |

Yes |

Single entry listing LDAP schema definitions. More information: LDAP schema. Base DN: Base directory: |

tasks |

Task |

No |

N/A |

Yes |

Scheduled tasks for this server. Use the Base DN: Base directory: |

userData |

JE |

Yes |

Yes |

Yes |

User entries imported from the LDIF you provide. More information: Install DS for user data. Base directory: |

(1) Optional backends depend on the setup choices.

(2) The changelog backend is mandatory for servers with a replication server role.

(3) You must create a superuser account at setup time. You may choose to replace it later. For details, refer to Use a non-default superuser account.

Create a backend

|

Separate backends let you use different configuration settings for different data with different access patterns. While doing this allows you more flexibility, it also comes at the price of more administrative and maintenance tasks to manage. Because of this, don’t create more backends than you need. Each new backend implies new administrative tasks. When you create a backend:

Before creating a backend whose base DN is a child of an existing backend, also read Subordinate backends carefully. Subordinate backends include important requirements and limitations. |

When you create a new backend on a replicated directory server, let the server replicate the new data:

-

Configure the new backend.

The following example creates a database backend for Example.org data. The backend relies on a JE database for data storage and indexing:

$ dsconfig \ create-backend \ --hostname localhost \ --port 4444 \ --bindDn uid=admin \ --bindPassword password \ --backend-name exampleOrgBackend \ --type je \ --set enabled:true \ --set base-dn:dc=example,dc=org \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pin \ --no-promptbashWhen you create a new backend using the

dsconfigcommand, DS directory servers create the following indexes automatically:Index Approx. Equality Ordering Presence Substring Entry Limit aci-

-

-

Yes

-

4000

dn2idNon-configurable internal index

ds-sync-conflict-

Yes

-

-

-

4000

ds-sync-hist-

-

Yes

-

-

4000

entryUUID-

Yes

-

-

-

4000

id2childrenNon-configurable internal index

id2subtreeNon-configurable internal index

objectClass-

Yes

-

-

-

4000

-

Verify that replication is enabled:

$ dsconfig \ get-synchronization-provider-prop \ --provider-name "Multimaster Synchronization" \ --property enabled \ --hostname localhost \ --port 4444 \ --bindDN uid=admin \ --bindPassword password \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pin \ --no-prompt Property : Value(s) ---------:--------- enabled : truebashIf replication should be enabled but is not, use

dsconfig set-synchronization-provider-prop --set enabled:trueto enable it. -

Let the server replicate the base DN of the new backend:

$ dsconfig \ create-replication-domain \ --hostname localhost \ --port 4444 \ --bindDN uid=admin \ --bindPassword password \ --provider-name "Multimaster Synchronization" \ --domain-name dc=example,dc=org \ --type generic \ --set enabled:true \ --set base-dn:dc=example,dc=org \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pin \ --no-promptbash -

If you have existing data for the backend, follow the appropriate procedure to initialize replication.

For details, refer to Manual initialization.

If you must temporarily disable replication for the backend, take care to avoid losing changes. For details, refer to Disable replication.

Import and export

The following procedures demonstrate how to import and export LDIF data.

For details on creating custom sample LDIF to import, refer to Generate test data.

Import LDIF

If you are initializing replicas by importing LDIF, refer to Initialize from LDIF for context.

|

Perform either of the following steps to import dc=example,dc=com data into the dsEvaluation backend:

-

To import offline, shut down the server before you run the

import-ldifcommand:$ stop-ds $ import-ldif \ --offline \ --backendId dsEvaluation \ --includeBranch dc=example,dc=com \ --ldifFile example.ldifbash -

To import online, schedule a task:

$ start-ds $ import-ldif \ --hostname localhost \ --port 4444 \ --bindDn uid=admin \ --bindPassword password \ --backendId dsEvaluation \ --includeBranch dc=example,dc=com \ --ldifFile example.ldif \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pinbashYou can schedule the import task to start at a particular time using the

--startoption.

Export LDIF

Perform either of the following steps to export dc=example,dc=com data from the dsEvaluation backend:

-

To export offline, shut down the server before you run the

export-ldifcommand:$ stop-ds $ export-ldif \ --offline \ --backendId dsEvaluation \ --includeBranch dc=example,dc=com \ --ldifFile backup.ldifbash -

To export online, schedule a task:

$ export-ldif \ --hostname localhost \ --port 4444 \ --bindDn uid=admin \ --bindPassword password \ --backendId dsEvaluation \ --includeBranch dc=example,dc=com \ --ldifFile backup.ldif \ --start 0 \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pinbashThe

--start 0option tells the directory server to start the export task immediately.You can specify a time for the task to start using the format yyyymmddHHMMSS. For example,

20250101043000specifies a start time of 4:30 AM on January 1, 2025.If the server is not running at the time specified, it attempts to perform the task after it restarts.

Subordinate backends

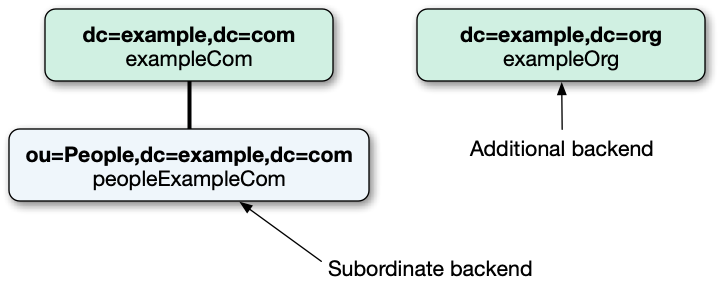

A subordinate backend has a base DN making it a child of an existing backend.

For example, a DS server has an exampleCom backend for dc=example,dc=com,

a subordinate peopleExampleCom backend for ou=People,dc=example,dc=com, and an exampleOrg backend:

(Technically, all DS backends are subordinate to the root DSE backend whose base DN is the empty string. You don’t manage the root DSE, however.)

Subordinate backends include important requirements and limitations:

-

DS supports paging, sorting, and VLV search results for searches in a single backend. DS doesn’t support paging, sorting, and VLV searches traversing backends.

Client applications know base DNs, however, not backends. Use of subordinate backends can lead to unexpected, logically wrong search results when the scope extends from the parent to the child backend.

-

Like other backends, you must add backup tasks and restore procedures, and include subordinate backends in disaster recovery plans.

Unlike other backends, you must perform all tasks and procedures for subordinate backends at the same time you perform them for parent backends. This ensures the replicated data remains aligned.

-

Subordinate backends don’t reduce network traffic between replication servers, and the replication changelog doesn’t use less disk space. Instead, the servers use slightly more system resources in file descriptors and threads for each added backend.

|

If you migrate to DS from another directory service, don’t use subordinate backends simply because you used them in the other directory service. Try concatenating the data and using a single backend instead:

|

If you accept the requirements and limitations and the deployment appears to require a subordinate backend, contact ForgeRock to validate your plans before deploying in production.

To set up a subordinate backend, stop the servers and follow this example for each server:

-

Create an

exampleCombackend with adc=example,dc=comreplication domain.This backend holds all Example.com data except

ou=People,dc=example,dc=com. The backendbase-dnmust match the replication domainbase-dn:# Create a backend for the data not under the subordinate base DN: $ dsconfig \ create-backend \ --backend-name exampleCom \ --type je \ --set enabled:true \ --set base-dn:dc=example,dc=com \ --offline \ --no-prompt # Let the server replicate the base DN of the new backend: $ dsconfig \ create-replication-domain \ --provider-name "Multimaster Synchronization" \ --domain-name dc=example,dc=com \ --type generic \ --set enabled:true \ --set base-dn:dc=example,dc=com \ --offline \ --no-prompt # Import data not under the subordinate base DN: $ import-ldif \ --backendId exampleCom \ --excludeBranch ou=People,dc=example,dc=com \ --ldifFile example.ldif \ --offlinebash -

Create a

peopleExampleCombackend with anou=People,dc=example,dc=comreplication domain.This backend holds only Example.com data for

ou=People. The backendbase-dnmust match the replication domainbase-dn:# Create a backend for the data under the subordinate base DN: $ dsconfig \ create-backend \ --backend-name peopleExampleCom \ --type je \ --set enabled:true \ --set base-dn:ou=People,dc=example,dc=com \ --offline \ --no-prompt # Let the server replicate the base DN of the new backend: $ dsconfig \ create-replication-domain \ --provider-name "Multimaster Synchronization" \ --domain-name ou=People,dc=example,dc=com \ --type generic \ --set enabled:true \ --set base-dn:ou=People,dc=example,dc=com \ --offline \ --no-prompt # Import data under the subordinate base DN: $ import-ldif \ --backendId peopleExampleCom \ --includeBranch ou=People,dc=example,dc=com \ --ldifFile example.ldif \ --offlinebash -

Start the server.

Disk space thresholds

Directory data growth depends on applications that use the directory. When directory applications add more data than they delete, the database backend grows until it fills the available disk space. The system can end up in an unrecoverable state if no disk space is available.

Database backends therefore have advanced properties, disk-low-threshold and disk-full-threshold.

When available disk space falls below disk-low-threshold,

the directory server only allows updates from users and applications that have the bypass-lockdown privilege.

When available space falls below disk-full-threshold, the directory server stops allowing updates,

instead returning an UNWILLING_TO_PERFORM error to each update request.

If growth across the directory service tends to happen quickly,

set the thresholds higher than the defaults to allow more time to react when growth threatens to fill the disk.

The following example sets disk-low-threshold to 10 GB disk-full-threshold to 5 GB for the dsEvaluation backend:

$ dsconfig \

set-backend-prop \

--hostname localhost \

--port 4444 \

--bindDn uid=admin \

--bindPassword password \

--backend-name dsEvaluation \

--set "disk-low-threshold:10 GB" \

--set "disk-full-threshold:5 GB" \

--usePkcs12TrustStore /path/to/opendj/config/keystore \

--trustStorePassword:file /path/to/opendj/config/keystore.pin \

--no-promptThe properties disk-low-threshold, and disk-full-threshold are listed as advanced properties.

To examine their values with the dsconfig command, use the --advanced option:

$ dsconfig \

get-backend-prop \

--advanced \

--hostname localhost \

--port 4444 \

--bindDn uid=admin \

--bindPassword password \

--backend-name dsEvaluation \

--property disk-low-threshold \

--property disk-full-threshold \

--usePkcs12TrustStore /path/to/opendj/config/keystore \

--trustStorePassword:file /path/to/opendj/config/keystore.pin \

--no-prompt

Property : Value(s)

--------------------:---------

disk-full-threshold : 5 gb

disk-low-threshold : 10 gbEntry expiration

If the directory service creates many entries that expire and should be deleted, you could find the entries with a time-based search and then delete them individually. That approach uses replication to delete the entries in all replicas. It has the disadvantage of generating potentially large amounts of replication traffic.

Entry expiration lets you configure the backend database to delete the entries as they expire. This approach deletes expired entries at the backend database level, without generating replication traffic. AM uses this approach when relying on DS to delete expired token entries, as demonstrated in Install DS for AM CTS.

Backend indexes for generalized time (timestamp) attributes have these properties to configure automated, optimized entry expiration and removal:

Configure this capability by performing the following steps:

-

Prepare an ordering index for a generalized time (timestamp) attribute on entries that expire.

For details, refer to Configure indexes and Active accounts.

-

Using the

dsconfig set-backend-index-propcommand, setttl-enabledon the index to true, and setttl-ageon the index to the desired entry lifetime duration. -

Optionally enable the access log to record messages when the server deletes an expired entry.

Using the

dsconfig set-log-publisher-propcommand, setsuppress-internal-operations:falsefor the access log publisher. Note that this causes the server to log messages for all internal operations.When the server deletes an expired entry, it logs a message with

"additionalItems":{"ttl": true}in the response.

Once you configure and build the index, the backend can delete expired entries. At intervals of 10 seconds, the backend automatically deletes entries whose timestamps on the attribute are older than the specified lifetime. Entries that expire in the interval between deletions are removed on the next round. Client applications should therefore check that entries have not expired, as it is possible for expired entries to remain available until the next round of deletions.

When using this capability, keep the following points in mind:

-

Entry expiration is per index. The time to live depends on the value of the indexed attribute and the

ttl-agesetting, and all matching entries are subject to TTL. -

If multiple indexes'

ttl-enabledandttl-ageproperties are configured, as soon as one of the entry’s matching attributes exceeds the TTL, the entry is eligible for deletion. -

The backend deletes the entries directly as an internal operation. The server only records the deletion when

suppress-internal-operations: falsefor the access log publisher. Persistent searches do not return the deletion.Furthermore, this means that deletion is not replicated. To ensure expired entries are deleted on all replicas, use the same indexes with the same settings on all replicas.

-

When a backend deletes an expired entry, the effect is a subtree delete. In other words, if a parent entry expires, the parent entry and all the parent’s child entries are deleted.

If you do not want parent entries to expire, index a generalized time attribute that is only present on its child entries.

-

The backend deletes expired entries atomically.

If you update the TTL attribute to prevent deletion and the update succeeds, then TTL has effectively been reset.

-

Expiration fails when the

index-entry-limitis exceeded. (For background information, refer to Index entry limits.)This only happens if the timestamp for the indexed attribute matches to the nearest millisecond on more than 4000 entries (for default settings). This corresponds to four million timestamp updates per second, which would be very difficult to reproduce in a real directory service.

It is possible, however, to construct and import an LDIF file where more than 4000 entries have the same timestamp. Make sure not to reuse the same timestamp for thousands of entries when artificially creating entries that you intend to expire.

Add a base DN to a backend

The following example adds the base DN o=example to the dsEvaluation backend,

and creates a replication domain configuration to replicate the data under the new base DN:

$ dsconfig \

set-backend-prop \

--hostname localhost \

--port 4444 \

--bindDn uid=admin \

--bindPassword password \

--backend-name dsEvaluation \

--add base-dn:o=example \

--usePkcs12TrustStore /path/to/opendj/config/keystore \

--trustStorePassword:file /path/to/opendj/config/keystore.pin \

--no-prompt

$ dsconfig \

get-backend-prop \

--hostname localhost \

--port 4444 \

--bindDn uid=admin \

--bindPassword password \

--backend-name dsEvaluation \

--property base-dn \

--usePkcs12TrustStore /path/to/opendj/config/keystore \

--trustStorePassword:file /path/to/opendj/config/keystore.pin \

--no-prompt

Property : Value(s)

---------:-------------------------------

base-dn : "dc=example,dc=com", o=example

$ dsconfig \

create-replication-domain \

--provider-name "Multimaster Synchronization" \

--domain-name o=example \

--type generic \

--set enabled:true \

--set base-dn:o=example \

--hostname localhost \

--port 4444 \

--bindDN uid=admin \

--bindPassword password \

--usePkcs12TrustStore /path/to/opendj/config/keystore \

--trustStorePassword:file /path/to/opendj/config/keystore.pin \

--no-promptWhen you add a base DN to a backend, the base DN must not be subordinate to any other base DNs in the backend.

As in the commands shown here, you can add the base DN o=example to a backend

that already has a base DN dc=example,dc=com.

You cannot, however, add o=example,dc=example,dc=com as a base DN, because that is a child of dc=example,dc=com.

Delete a backend

When you delete a database backend with the dsconfig delete-backend command,

the directory server does not actually remove the database files for these reasons:

-

A mistake could potentially cause lots of data to be lost.

-

Deleting a large database backend could cause severe service degradation due to a sudden increase in I/O load.

After you run the dsconfig delete-backend command, manually remove the database backend files,

and remove replication domain configurations any base DNs you deleted by removing the backend.

If run the dsconfig delete-backend command by mistake, but have not yet deleted the actual files,

recover the data by creating the backend again, and reconfiguring and rebuilding the indexes.